Evaluate a RAG app

Use Humanloop to evaluate more complex workflows like Retrieval Augmented Generation (RAG)

This tutorial demonstrates how to take an existing RAG (Retrieval Augmented Generation) pipeline and use Humanloop to evalaute it. At the end of the tutorial you’ll understand how to:

- Set up logging for your AI application using a Flow.

- Create a Dataset and run Evaluations to benchmark the performance of your RAG pipeline.

- Extend your logging to include individual steps like Promptand Tool steps within your Flow.

- To use evaluation reports to iteratively improve your application.

The full code for this tutorial is available in the Humanloop Cookbook.

Example RAG Pipeline

In this tuotrial we’ll first implement a simple RAG pipeline to do Q&A over medical documents without Humanloop. Then we’ll add Humanloop and use it for evals. Our RAG system will have three parts:

- Dataset: A version of the MedQA dataset from Hugging Face.

- Retriever: Chroma as a simple local vector DB.

- Prompt: Managed in code, populated with the user’s question and retrieved context.

We’ll walk through what the code does step-by-step and you can follow along in the cookbook. The first thing we’ll do is create our simple RAG app.

Set-up

Clone the Cookbook repository from github and install required packages:

Set up environment variables:

Set up the Vector DB:

Define the Prompt:

Define the RAG Pipeline:

Run the pipeline:

Humanloop Integration

Now that we have a simple RAG app, we’ll instrument it with Humanloop and evaluate how well it works.

Initialize the Humanloop SDK:

The first thing we’re going to do is create a Humanloop Flow to log the questions and answers generated by our pipeline to Humanloop. We’ll then run our RAG system over a dataset and score each of the flow-logs to understand how well our system works.

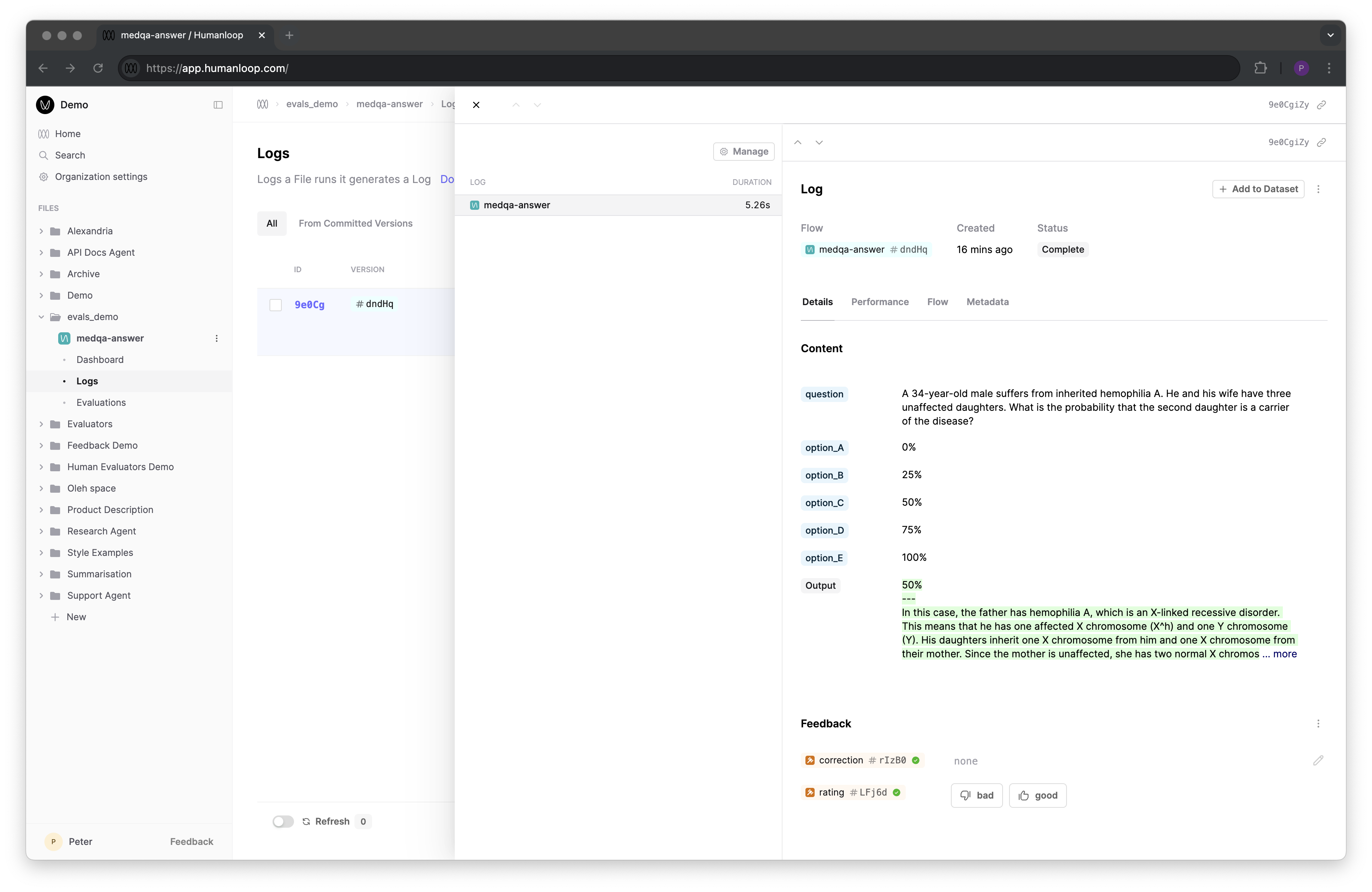

After running this pipeline, you will now see your Flow logs in your Humanloop workspace. If you make changes to your attributes in code and re-run the pipeline, you will see a new version of the Flow created in Humanloop.

Create a Dataset

We upload a test dataset to Humanloop:

Set up Evaluators

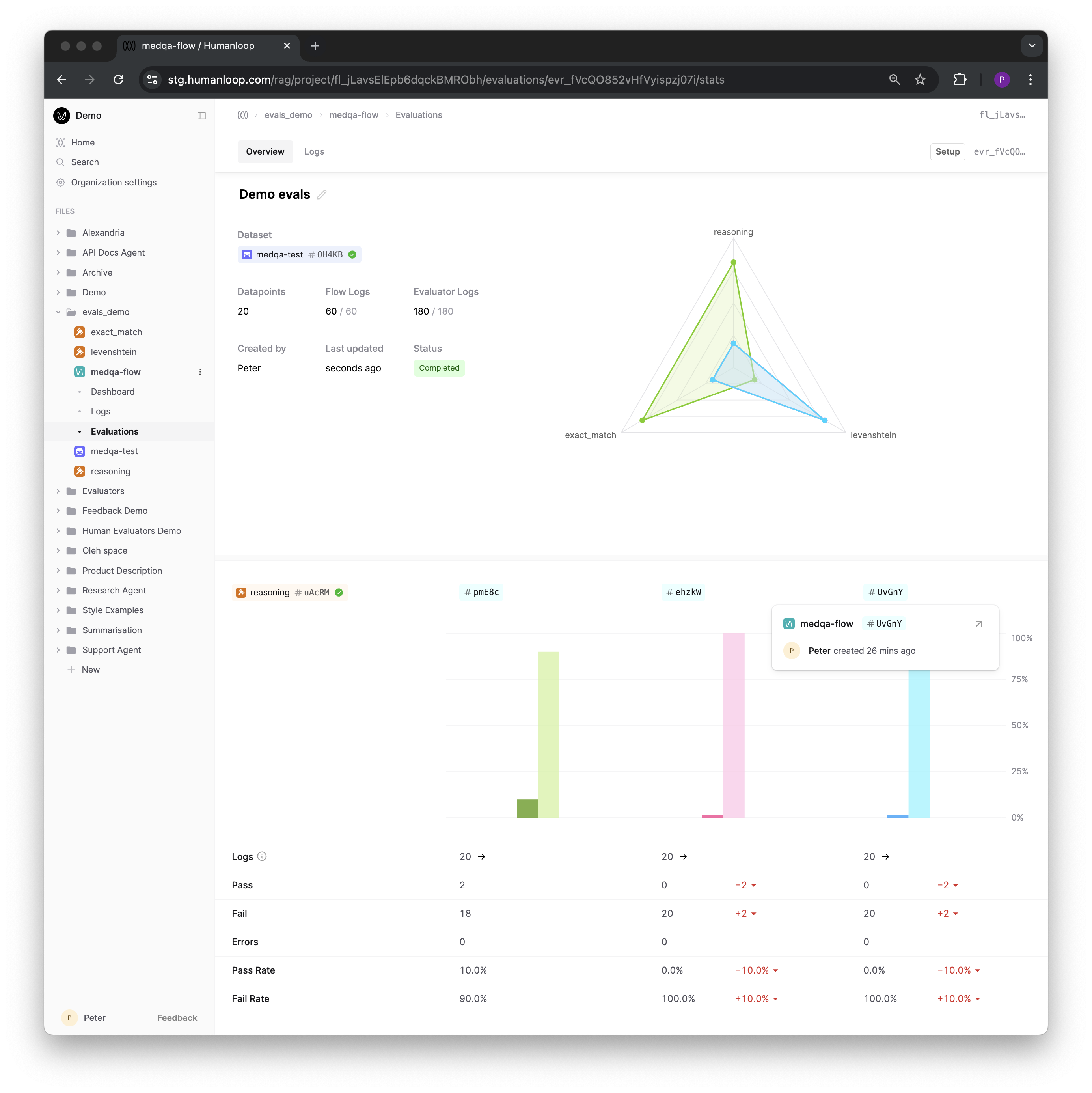

Our dataset has human groundtruth answers we can compare against. It’s very unlikely that the AI answers are exactly the same as the Human answers but we can measure how close they are by using the “levenshtein-distance”. The code for this evaluator is in the cookbook. We can run the evaluator locally but if we upload it to Humanloop then we get the added benefit that Humanloop can run the evalaution for us and this can be integrated into CI/CD.

Create and Run Your Evaluation

Now that we have our Flow, our dataset and our evaluators we can create and run an evaluation.

Get results and URL:

Look at the results of the evalution on Humanloop by following the URL. You should be able to see how accurate this version of our system is and as you make changes you’ll be able to compare the differnet versions.

Logging the full trace

One limitaiton of our evaluation so far is that we’ve measured the app end-to-end but we don’t know how the different components contribute to performance. If we really want to improve our app, we’ll need to understand To log the full trace of events, including separate Tool and Prompt steps:

This concludes the Humanloop RAG Evaluation walkthrough. You’ve learned how to integrate Humanloop into your RAG pipeline, set up logging, create datasets, configure evaluators, run evaluations, and log the full trace of events including Tool and Prompt steps.