May

Cohere

_ May 23rd, 2023_

We’ve just added support for Cohere to Humanloop!

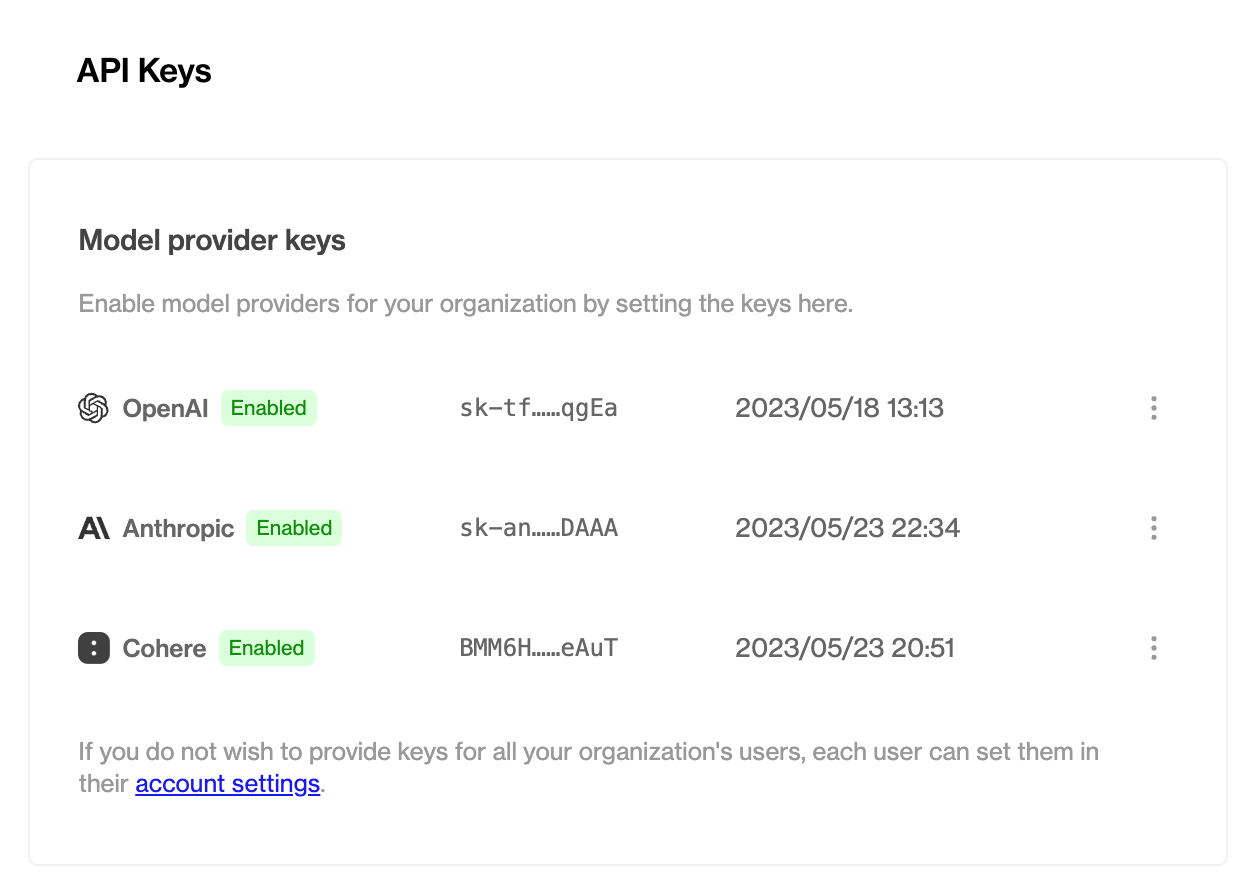

This update adds Cohere models to the playground and your projects - just add your Cohere API key in your organization’s settings. As with other providers, each user in your organization can also set a personal override API key, stored locally in the browser, for use in Cohere requests from the Playground.

Enabling Cohere for your organization

Working with Cohere models

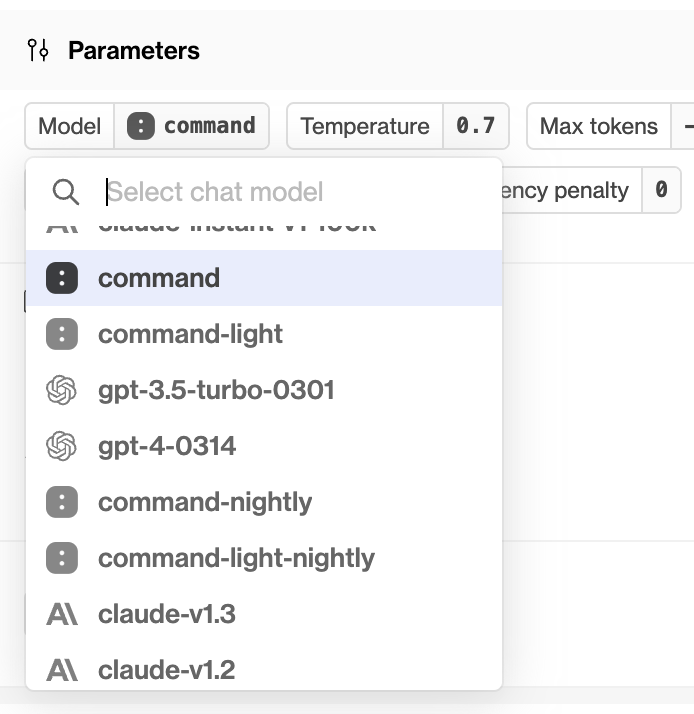

Once you’ve successfully enabled Cohere for your organization, you’ll be able to access it through the playground and in your projects, in exactly the same way as your existing OpenAI and/or Anthropic models.

REST API and Python / TypeScript support

As with other model providers, once you’ve set up a Cohere-backed model config, you can call it with the Humanloop REST API or our SDKs.

If you don’t provide a Cohere API key under the provider_api_keys field, the request will fall back on the stored organization level key you configured above.

Improved Python SDK

May 17th, 2023

We’ve just released a new version of our Python SDK supporting our v4 API!

This brings support for:

- 💬 Chat mode

humanloop.chat(...) - 📥 Streaming support

humanloop.chat_stream(...) - 🕟 Async methods

humanloop.acomplete(...)

https://pypi.org/project/humanloop/

Installation

pip install --upgrade humanloop

Example usage

Migration from 0.3.x

For those coming from an older SDK version, this introduces some breaking changes. A brief highlight of the changes:

- The client initialization step of

hl.init(...)is nowhumanloop = Humanloop(...).- Previously

provider_api_keyscould be provided inhl.init(...). They should now be provided when constructingHumanloop(...)client. -

- Previously

hl.generate(...)’s various call signatures have now been split into individual methods for clarity. The main ones are:humanloop.complete(project, model_config={...}, ...)for a completion with the specified model config parameters.humanloop.complete_deployed(project, ...)for a completion with the project’s active deployment.