April

Agents (beta)

April 29th, 2025

We’ve added beta support for Agents, enabling you to build, version-control and run Agents directly in Humanloop. A Humanloop Agent is a multi-step AI system that leverages an LLM, external information sources and tool calling to accomplish complex tasks automatically. It comprises a main orchestrator LLM that utilizes tools to accomplish its task.

- Agent files are versioned the same way as our other Files (e.g. Prompts, Tools, etc.).

- Agents can use function-calling by linking Tools or Prompts.

- Humanloop can run Agents, automatically executing any called functions.

- The full trace of events when an Agent is called is captured and logged in Humanloop.

- Agents can be evaluated.

For an in-depth introduction on Agents, see our Key Concepts documentation. To get started with Agents, follow our Agents quickstart guide.

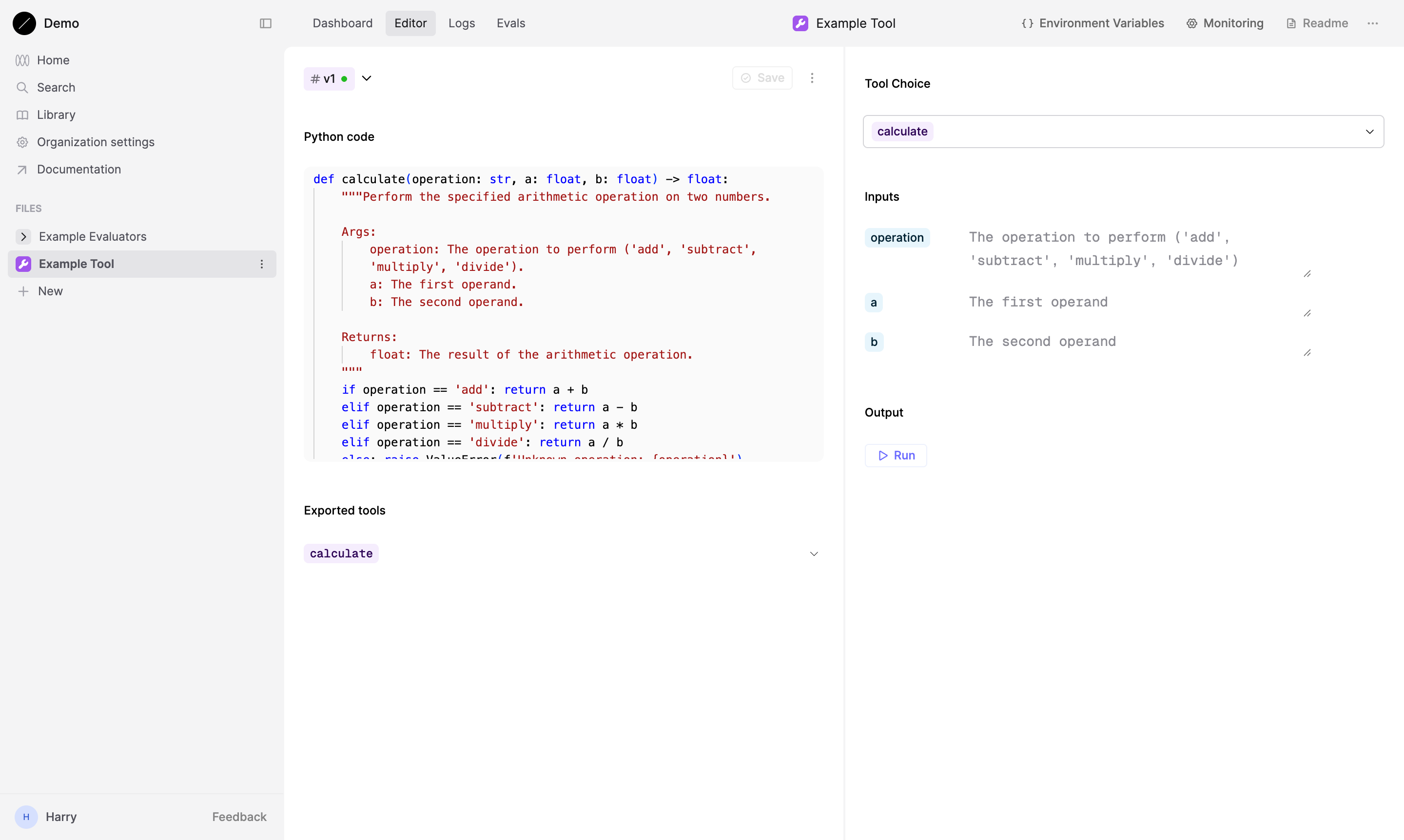

Tool Runtime

April 25th, 2025

We’ve added a new Python Tool to Humanloop, enabling you to define Tools with Python code that Humanloop can execute. This allows you to build powerful Tools that can access external data sources or perform complex computations. These Tools, which are run in Humanloop’s secure runtime environment, are especially useful in extending the capabilities of Agents.

To create a Python tool, click New File in the sidebar or on the homepage. Select Tool and then Python. You will be brought to the Tool Editor, which contains a pre-filled example Python function.

You can run the Tool in the Editor by entering the required parameters and clicking Run.

For more information on how to use Python Tools with Agents, follow our Agent evaluation tutorial, which uses a Python Tool to fetch search results.

We’ve also taken the change to add some example Python Tools for you to try out; check them out in the Library.

Support for Anthropic Thinking

April 20th, 2025

Humanloop now supports Anthropic’s extended thinking feature in the UI and the API.

ChatMessage objects used in /prompts/call and /prompts/log now have a thinking attribute that contains the reasoning blocks returned by Anthropic.

To try it out, set your Anthropic key, head to the Playground, choose a model from the sonnet-3.7 family, and enable the Reasoning effort parameter.

For more details, check out the Anthropic docs on extended thinking.

Gemini 2.5 Flash

April 19th, 2025

Google’s Gemini 2.5 Flash (gemini-2.5-flash-preview-04-17) is now available on Humanloop, offering enhanced reasoning capabilities with optimized performance. Learn more on the Google DeepMind website.

o3 and o4-mini Models

OpenAI’s new reasoning models, o3 and o4-mini, are now available on Humanloop. These models excel at complex reasoning tasks including coding, mathematics, and scientific analysis:

- o3: OpenAI’s most powerful reasoning model, delivering exceptional performance across coding, math, science, and vision tasks

- o4-mini: A faster, cost-efficient alternative that maintains strong capabilities while being more accessible

- Enhanced Vision: Both models feature advanced image understanding capabilities

- Cost-Effective: Competitive pricing with cached input support for optimized costs

Read more here.

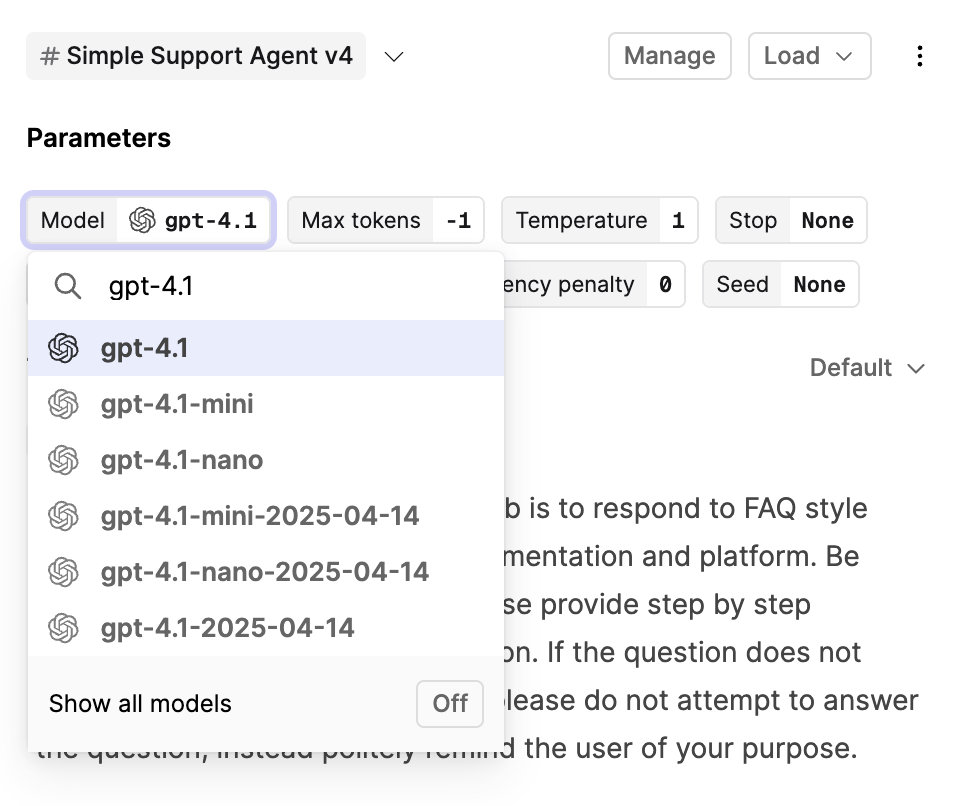

GPT-4.1 Model Family

April 14th, 2025

OpenAI’s GPT-4.1 model family is now available on Humanloop, including GPT-4.1, GPT-4.1 Mini, and GPT-4.1 Nano variants.

The new GPT-4.1 family brings significant improvements across all variants:

- Massive Context Window: All models support up to 1 million tokens

- Enhanced Reliability: Better at following instructions and reduced hallucinations

- Improved Efficiency: 26% more cost-effective than previous versions

- Specialized Variants: From the full GPT-4.1 to the lightweight Nano version for different use cases

Learn more about the capabilities of these new models on Open AI’s website.

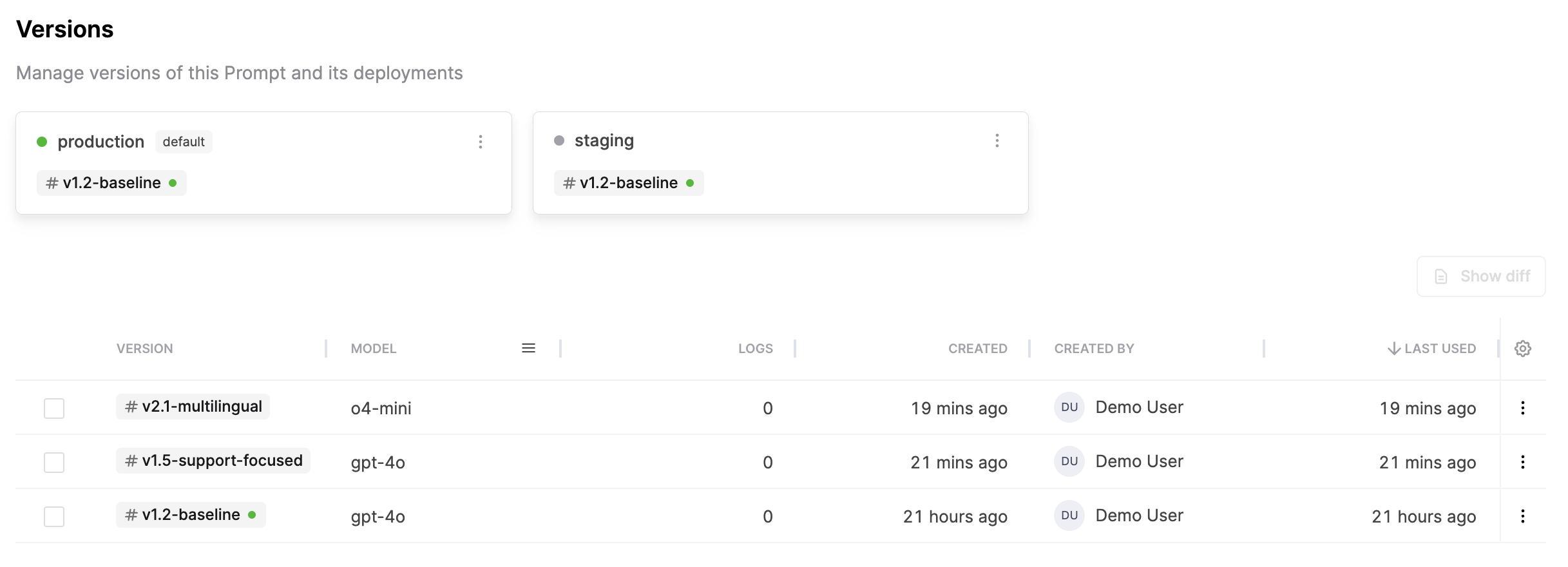

Name Versions and simplified saving

April 11th, 2025

We’ve tweaked our versioning system to make it more intuitive and easier to use.

- Named versions: Versions can now be named, making them easier to identify.

- Save new versions: Versions no longer need to be “committed”—they are now simply saved and can be used immediately.

Note: All existing commit messages have been preserved as version descriptions in the new system. Previously committed versions have been automatically named v1, v2, etc., to help you identify them in the new unified list.

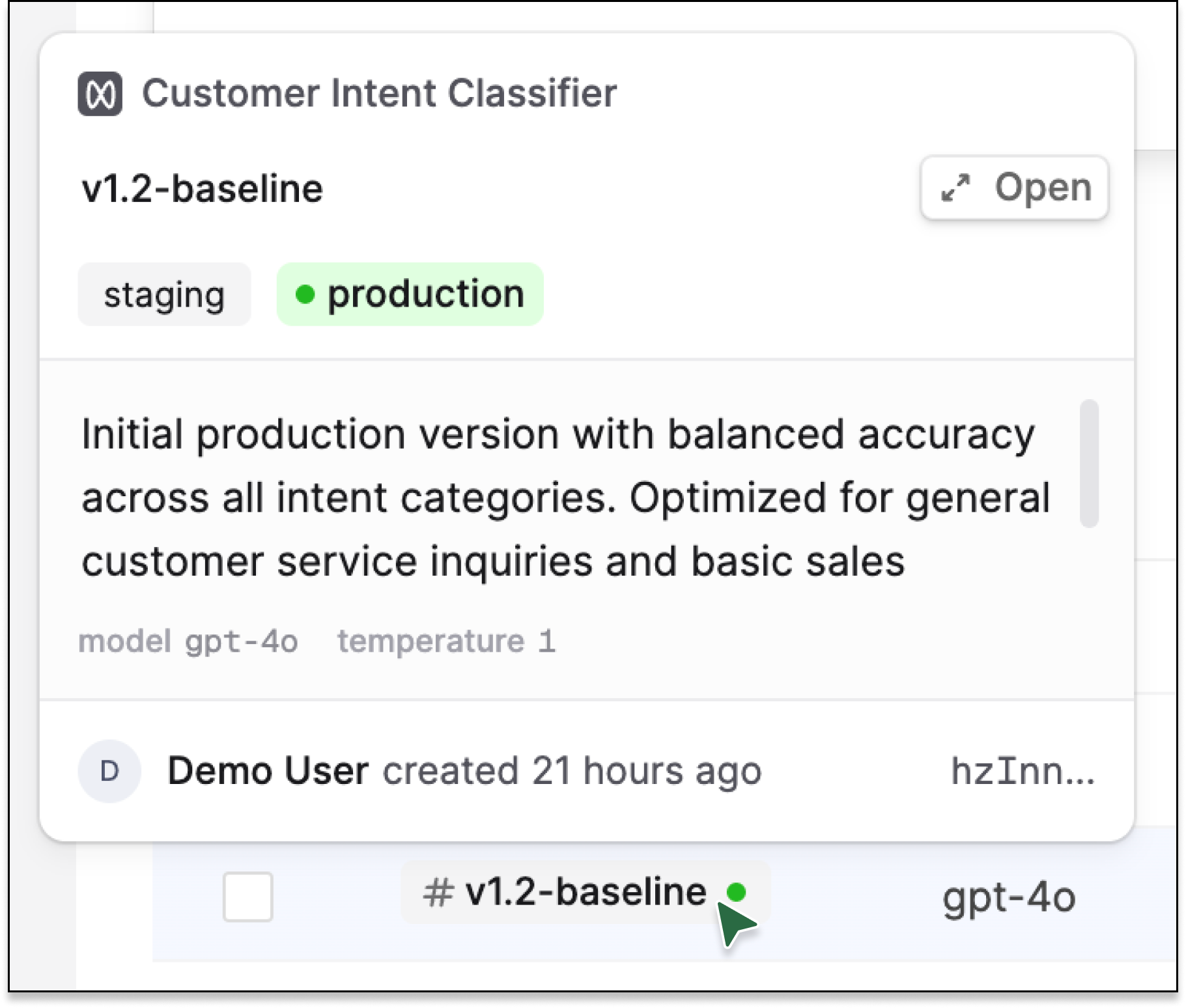

Version Details Popover

Hover over version labels to view metadata and edit names or descriptions directly from anywhere in the platform.

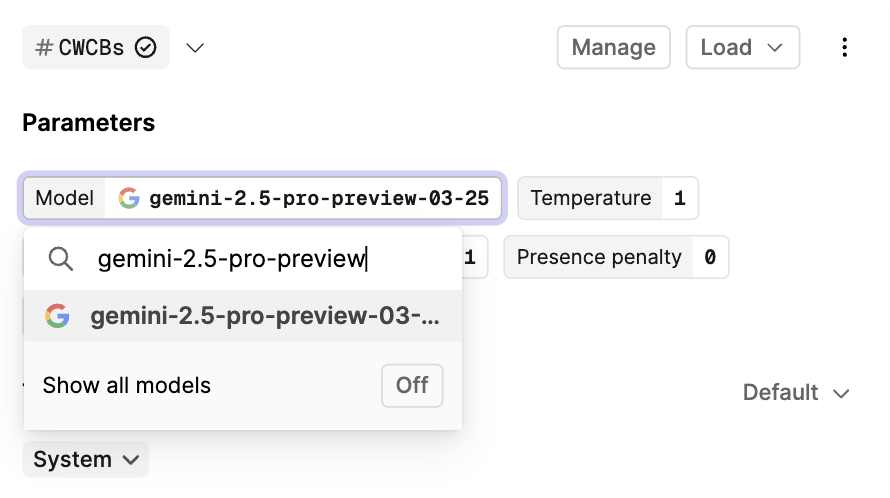

Gemini 2.5 Pro preview

April 4th, 2025

Google’s Gemini 2.5 Pro preview is now available on Humanloop.

Gemini 2.5 Pro Preview delivers state-of-the-art performance in mathematics, science, and code generation tasks, powered by its massive 1 million token context window. Learn more on the Google DeepMind website.