Create your first GPT-4 App

In this tutorial, you’ll use GPT-4 and Humanloop to quickly create a GPT-4 chat app that explains topics in the style of different experts.

At the end of this tutorial, you’ll have created your first GPT-4 app. You’ll also have learned how to:

- Create a Prompt

- Use the Humanloop SDK to call Open AI GPT-4 and log your results

- Capture feedback from your end users to evaluate and improve your model

This tutorial picks up where the Quick Start left off. If you’ve already followed the quick start you can skip to step 4 below.

Create the Prompt

Account setup

Create a Humanloop Account

If you haven’t already, create an account or log in to Humanloop

Add an OpenAI API Key

If you’re the first person in your organization, you’ll need to add an API key to a model provider.

- Go to OpenAI and grab an API key

- In Humanloop Organization Settings set up OpenAI as a model provider.

Using the Prompt Editor will use your OpenAI credits in the same way that the OpenAI playground does. Keep your API keys for Humanloop and the model providers private.

Get Started

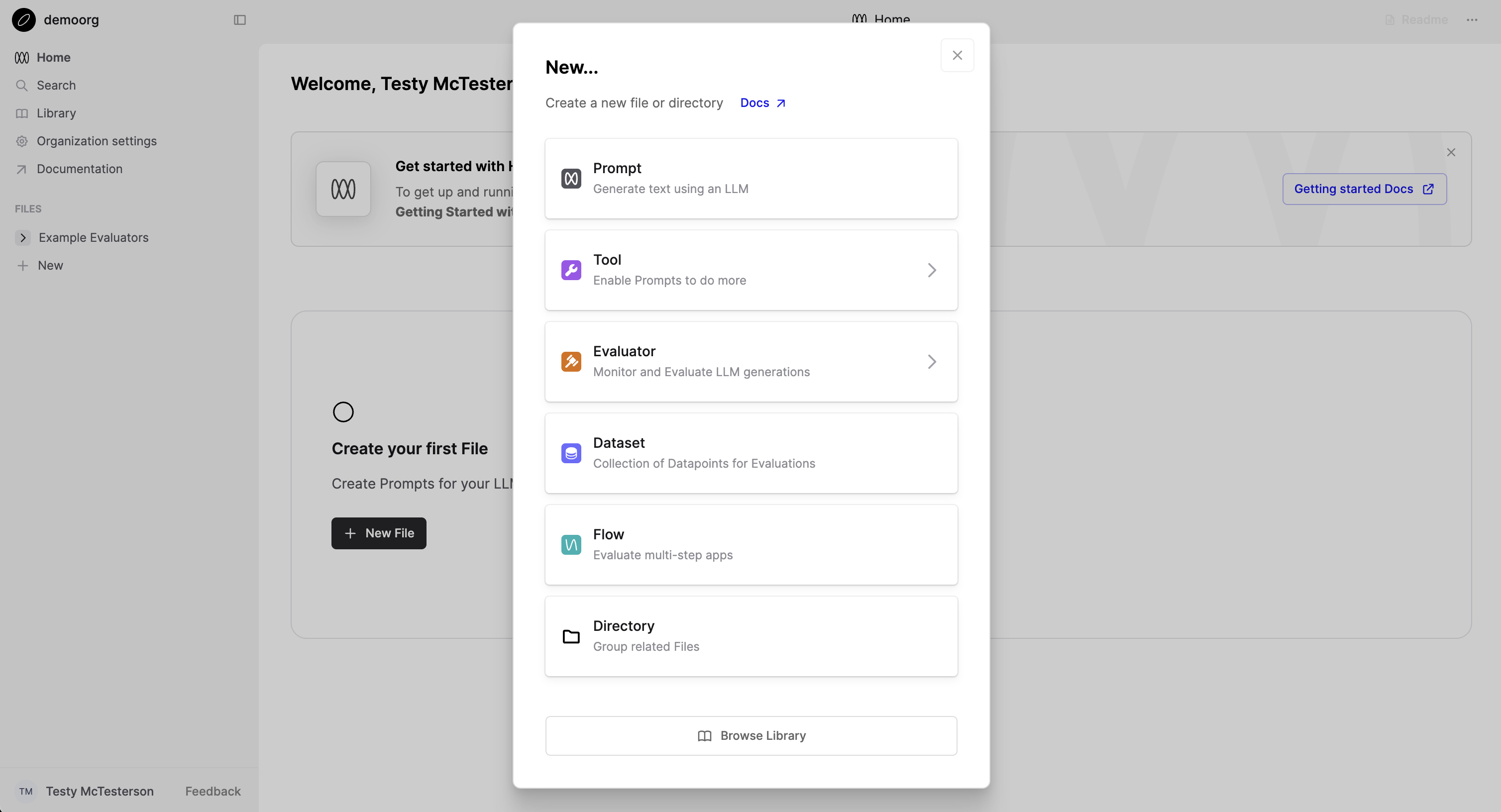

Create a Prompt File

When you first open Humanloop you’ll see your File navigation on the left. Click ‘+ New’ and create a Prompt.

In the sidebar, rename this file to “Comedian Bot” now or later.

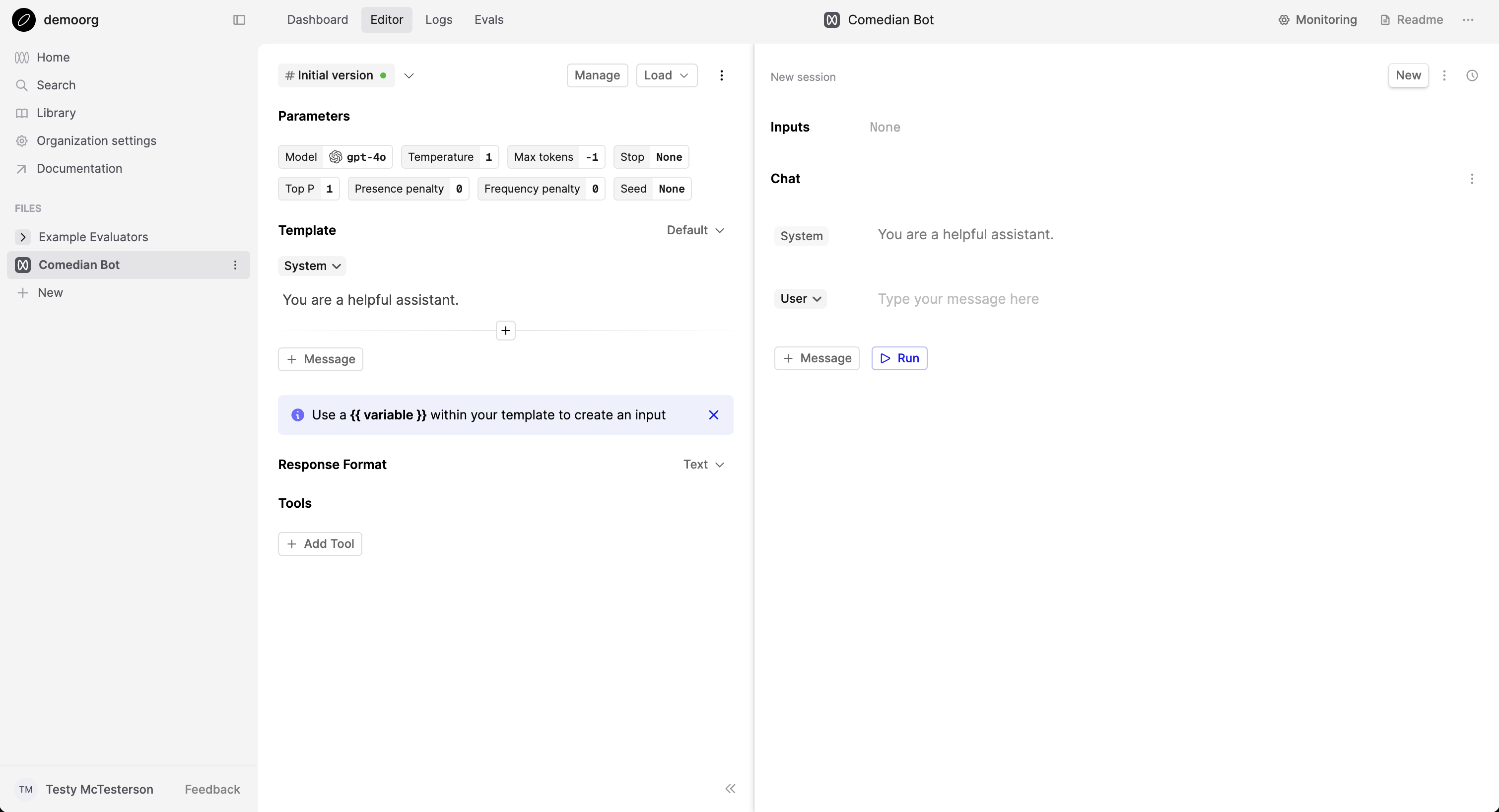

Create the Prompt template in the Editor

The left hand side of the screen defines your Prompt – the parameters such as model, temperature and template. The right hand side is a single chat session with this Prompt.

In the editor, update the system message to:

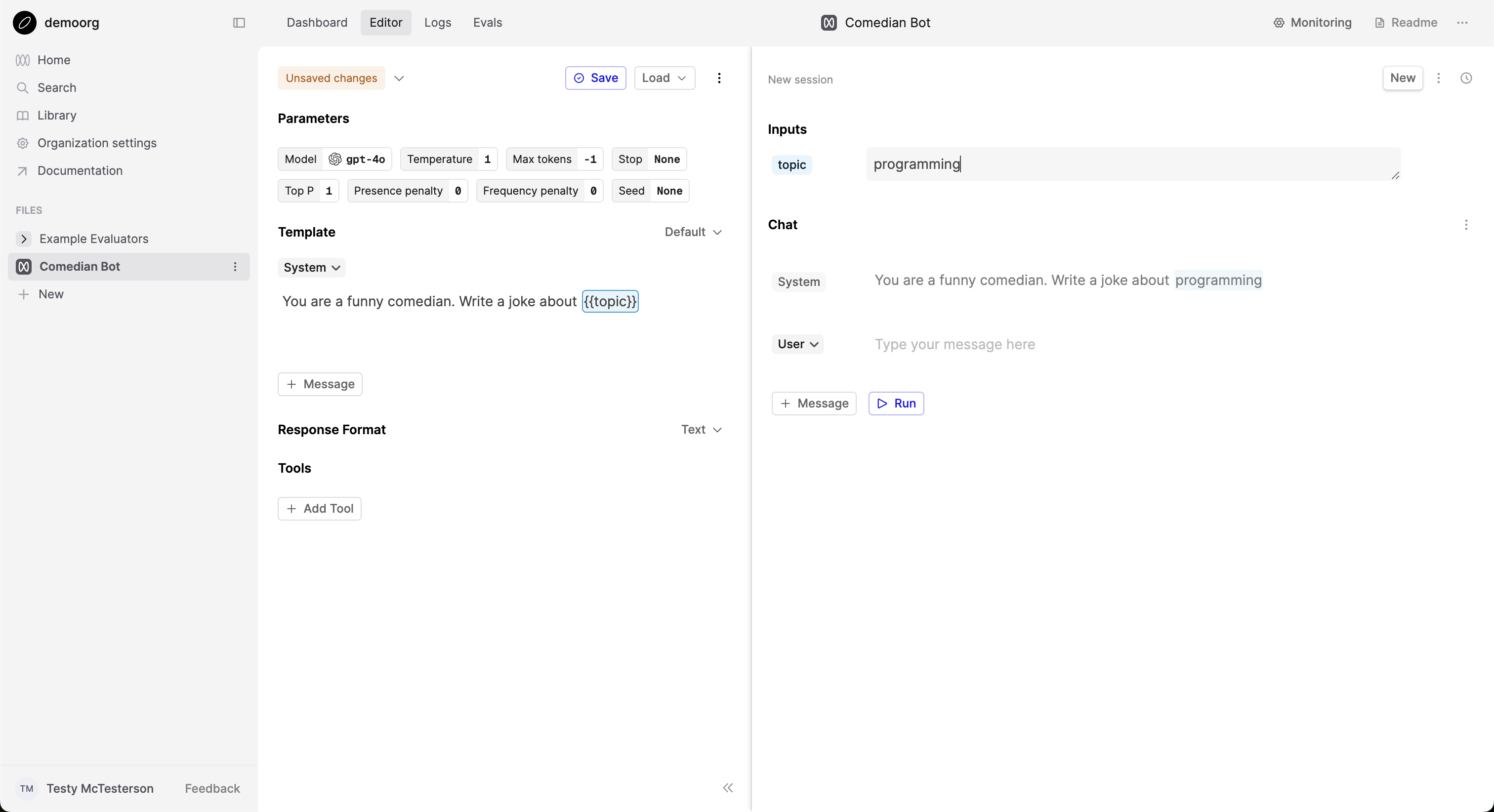

This message forms the chat template. It has an input slot called topic (surrounded by two curly brackets) for an input value that is provided each time you call this Prompt.

On the right hand side of the page, you’ll now see a box in the Inputs section for topic.

- Add a value for

topice.g. music, jogging, whatever - Click Run in the bottom right of the page

This will call OpenAI’s model and return the assistant response. Feel free to try other values, the model is very funny.

You now have a first version of your prompt that you can use.

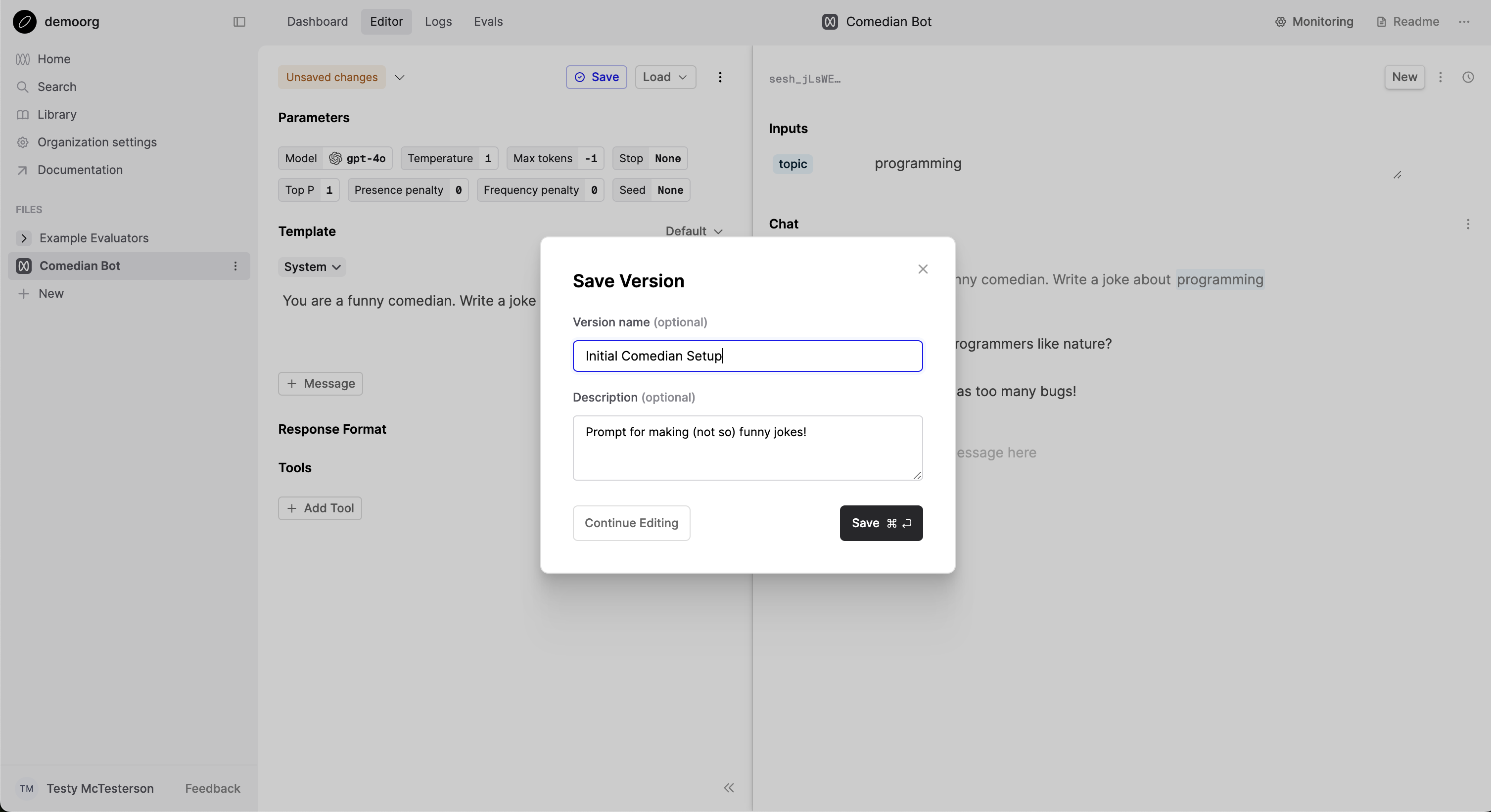

Save your first version of this Prompt

- Click the Save button at the top of the editor

- Enter Initial Comedian Setup in the version name field

- Enter Prompt for making (not so) funny jokes! in the description field

- Click Save

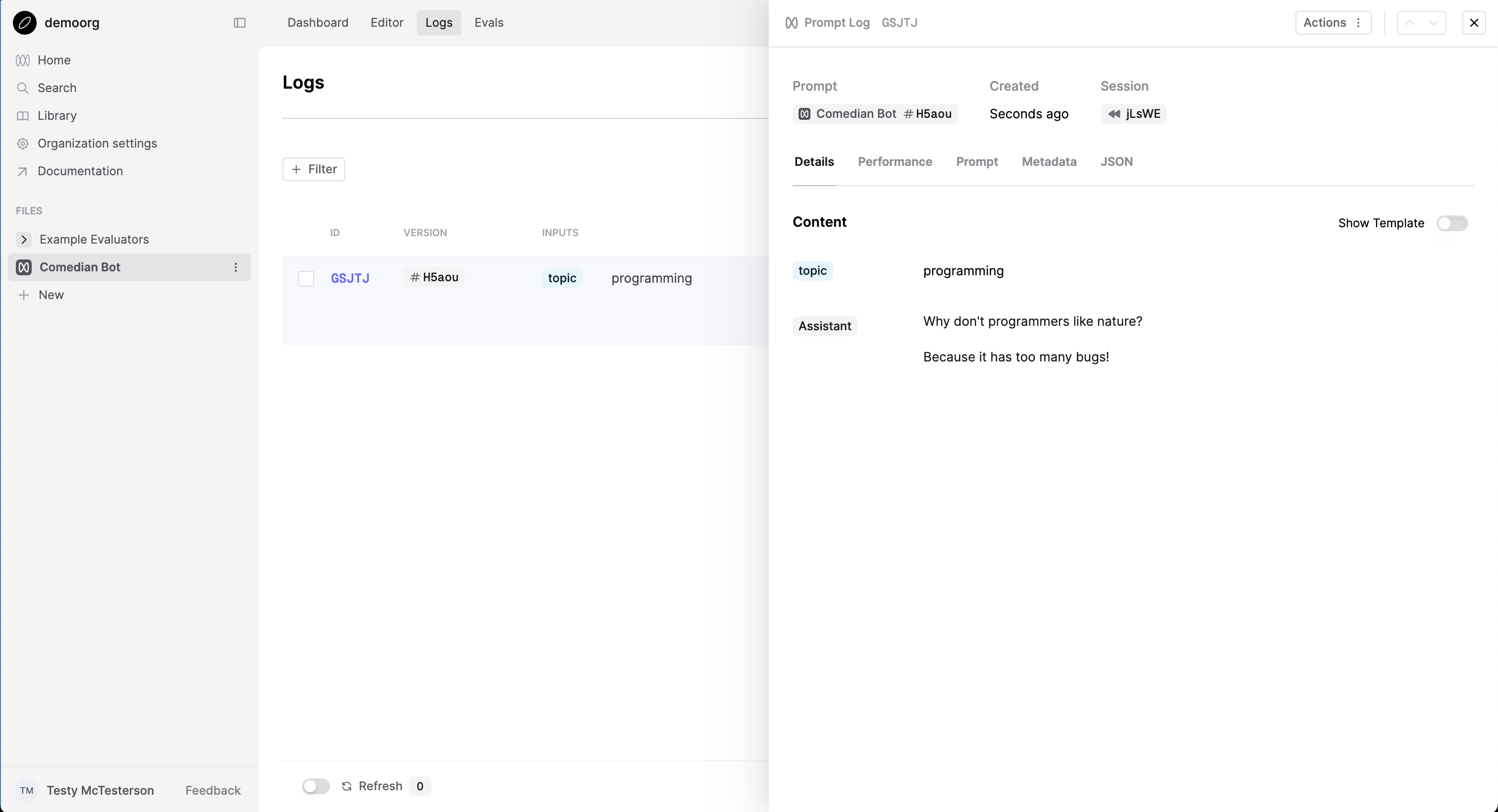

View the logs

Under the Prompt File, click ‘Logs’ to view all the generations from this Prompt

Click on a row to see the details of what version of the Prompt generated it. From here you can give feedback to that generation, see performance metrics, open up this example in the Editor, or add this log to a Dataset.

Call the Prompt in an app

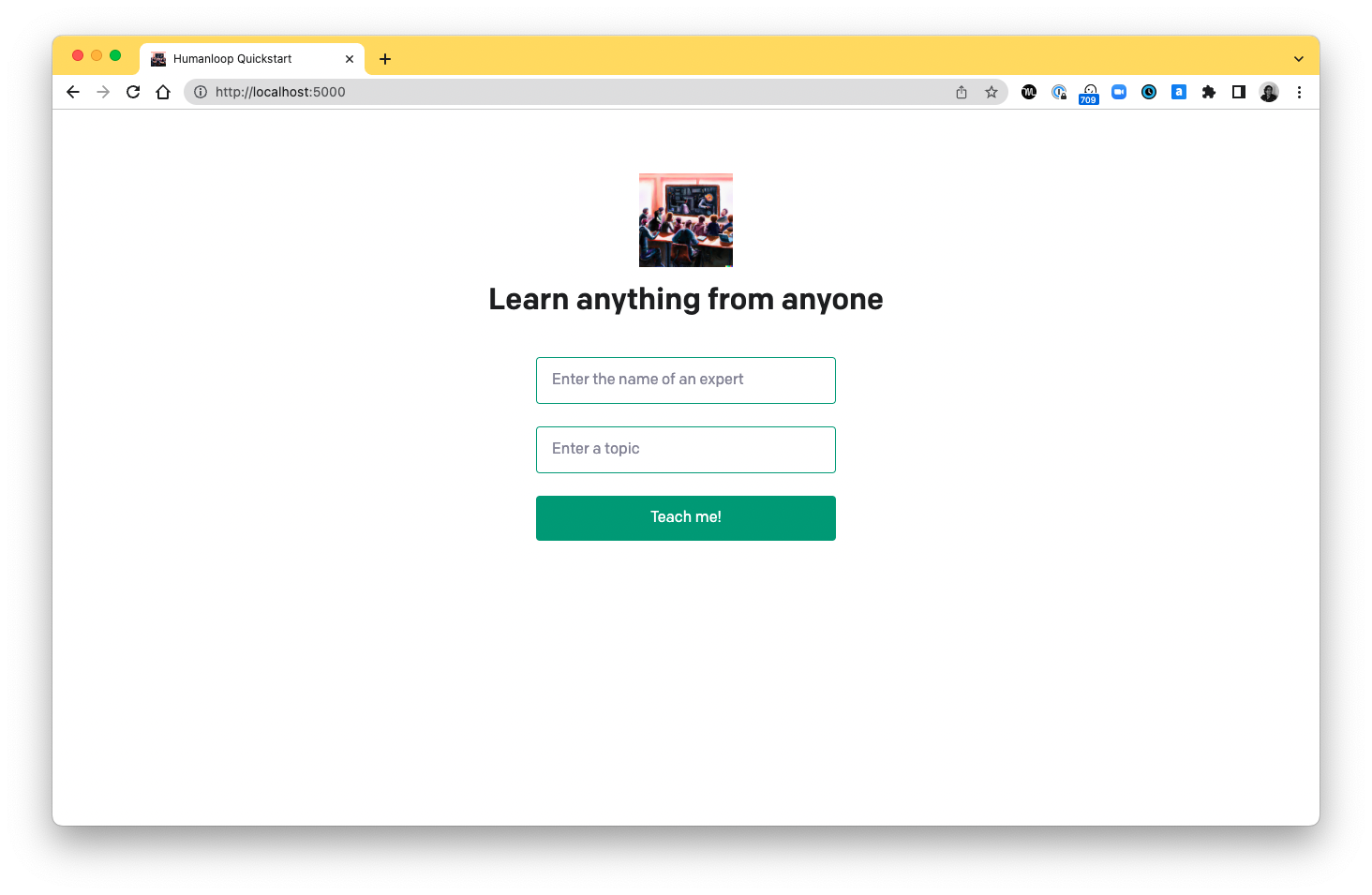

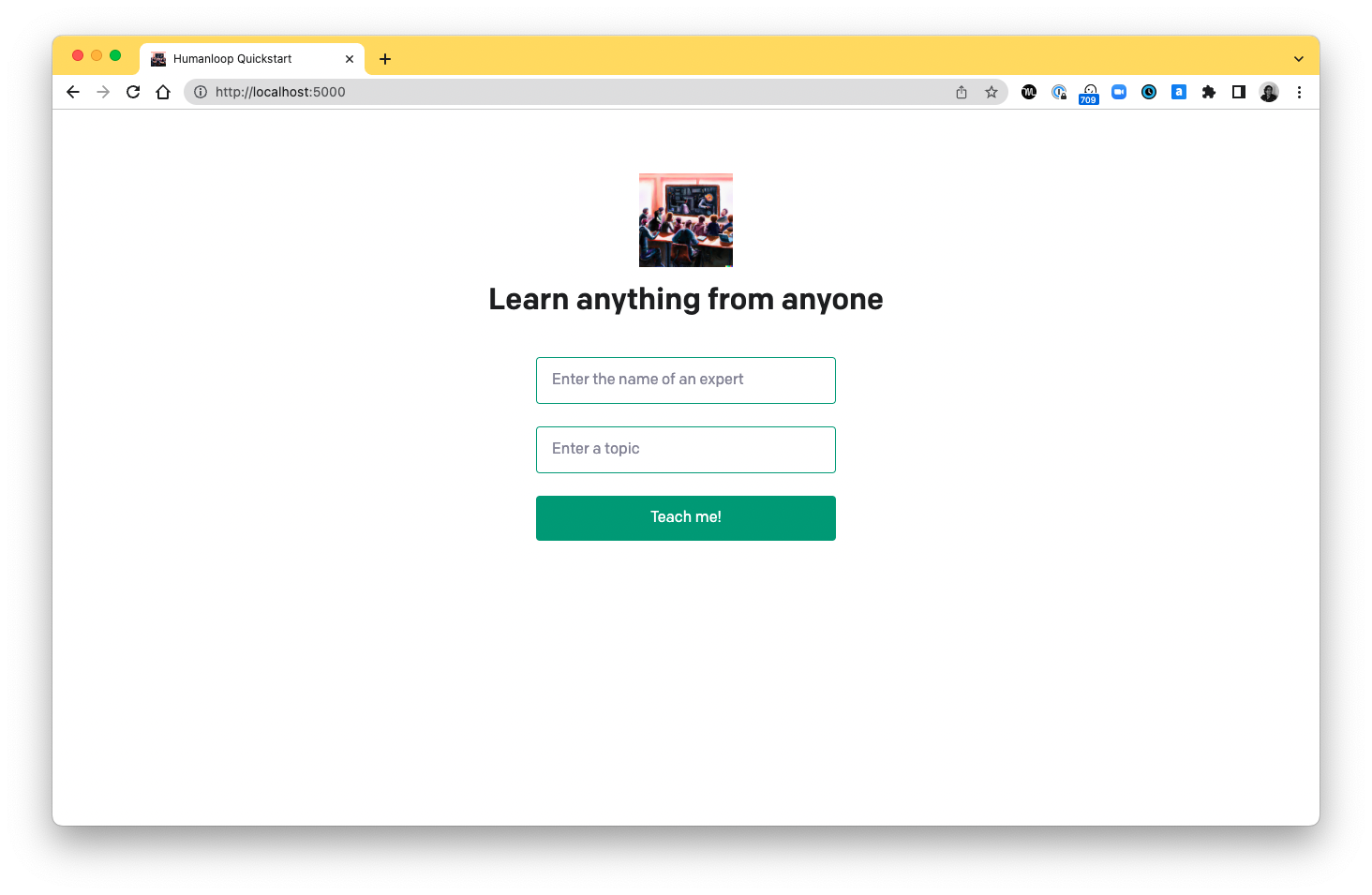

Now that you’ve found a good prompt and settings, you’re ready to build the “Learn anything from anyone” app! We’ve written some code to get you started — follow the instructions below to download the code and run the app.

Setup

If you don’t have Python 3 installed, install it from here. Then download the code by cloning this repository in your terminal:

If you prefer not to use git, you can alternatively download the code using this zip file.

In your terminal, navigate into the project directory and make a copy of the example environment variables file.

Copy your Humanloop API key and set it as HUMANLOOP_API_KEY in your newly created .env file. Copy your OpenAI API key and set it as the OPENAI_API_KEY.

Run the app

Run the following commands in your terminal in the project directory to install the dependencies and run the app.

Open http://localhost:5000 in your browser and you should see the app. If you type in the name of an expert, e.g “Aristotle”, and a topic that they’re famous for, e.g “ethics”, the app will try to generate an explanation in their style.

Press the thumbs-up or thumbs-down buttons to register your feedback on whether the generation is any good.

Try a few more questions. Perhaps change the name of the expert and keep the topic fixed.

View the data on Humanloop

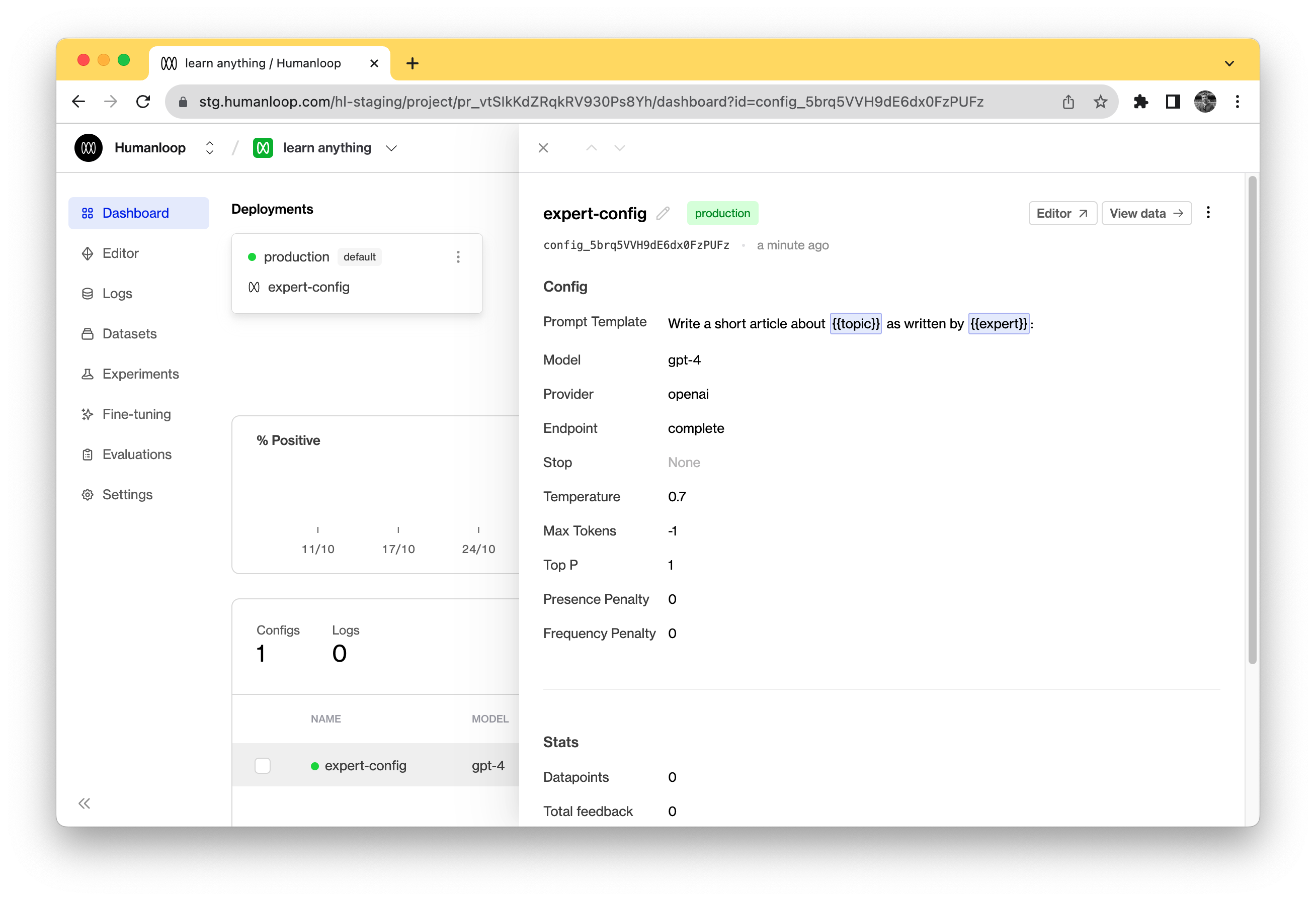

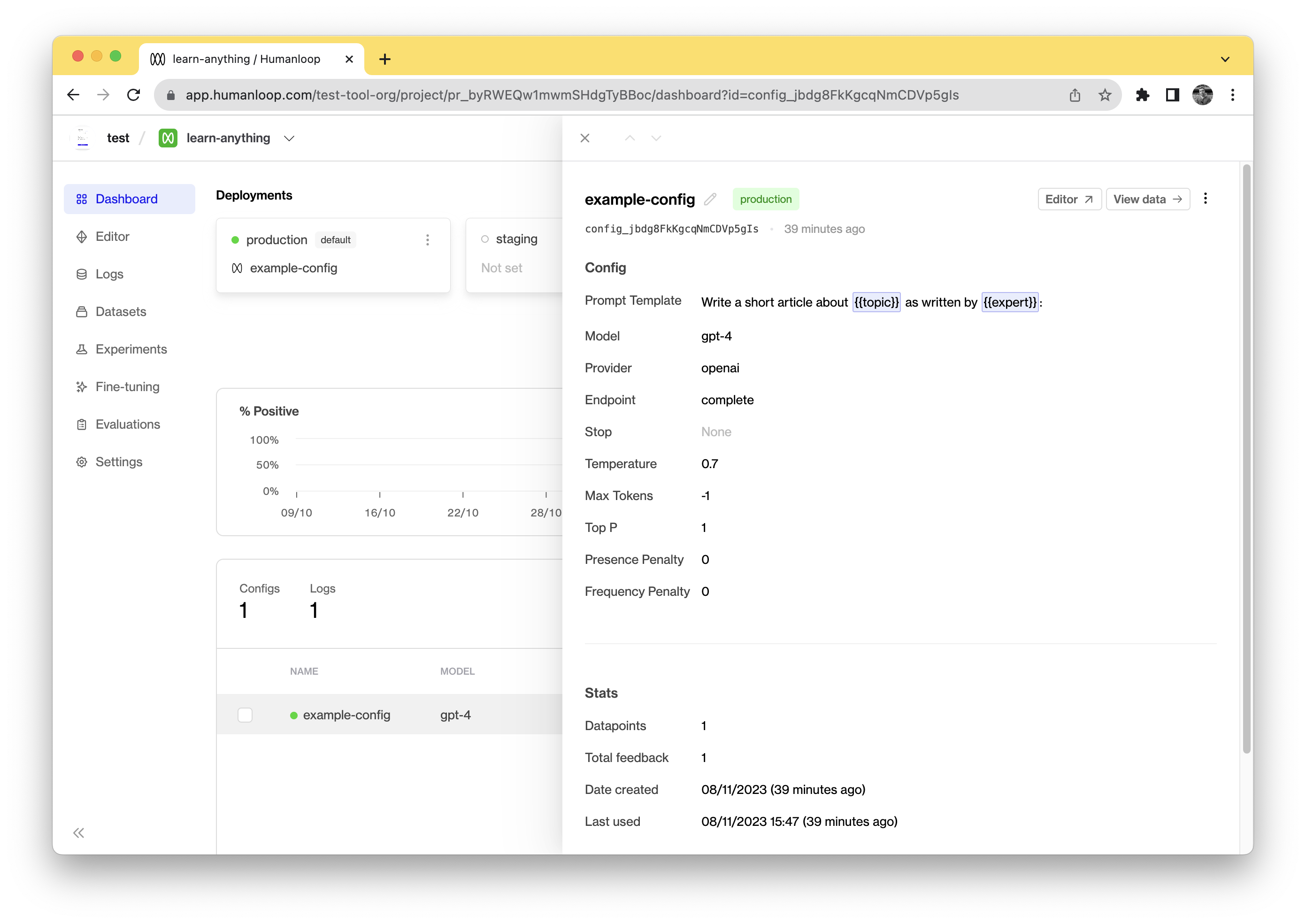

Now that you have a working app you can use Humanloop to measure and improve performance. Go back to the Humanloop app and go to your project named “learn-anything”.

On the Models dashboard you’ll be able to see how many data points have flowed through the app as well as how much feedback you’ve received. Click on your model in the table at the bottom of the page.

Click View data in the top right. Here you should be able to see each of your generations as well as the feedback that’s been logged against them. You can also add your own internal feedback by clicking on a datapoint in the table and using the feedback buttons.

Understand the code

Open up the file app.py in the “openai-quickstart-python” folder. There are a few key code snippets that will let you understand how the app works.

Between lines 30 and 41 you’ll see the following code.

On line 34 you can see the call to humanloop.complete_deployed which takes the project name and project inputs as variables. humanloop.complete_deployed calls GPT-4 and also automatically logs your data to the Humanloop app.

In addition to returning the result of your model on line 39, you also get back a data_id which can be used for recording feedback about your generations.

On line 51 of app.py, you can see an example of logging feedback to Humanloop.

The call to humanloop.feedback uses the data_id returned above to associate a piece of positive feedback with that generation.

In this app there are two feedback groups rating (which can be good or bad) and actions, which here is the copy button and also indicates positive feedback from the user.

Add a new model config

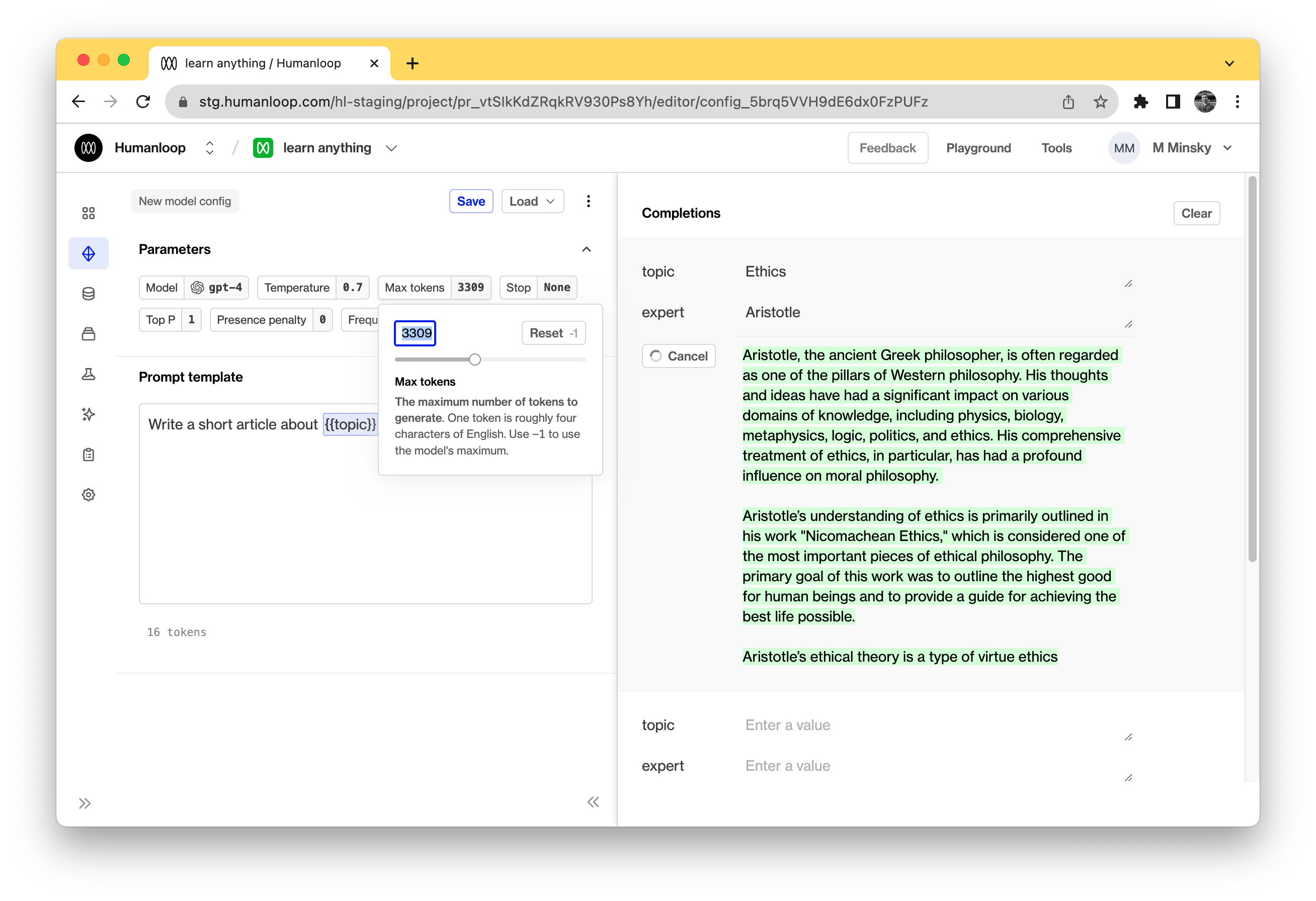

If you experiment a bit, you might find that the model isn’t initially that good. The answers are often too short or not in the style of the expert being asked. We can try to improve this by experimenting with other prompts.

-

Click on your model on the model dashboard and then in the top right, click Editor

-

Edit the prompt template to try and improve the prompt. Try changing the maximum number of tokens using the Max tokens slider, or the wording of the prompt.

Here are some prompt ideas to try out. Which ones work better?

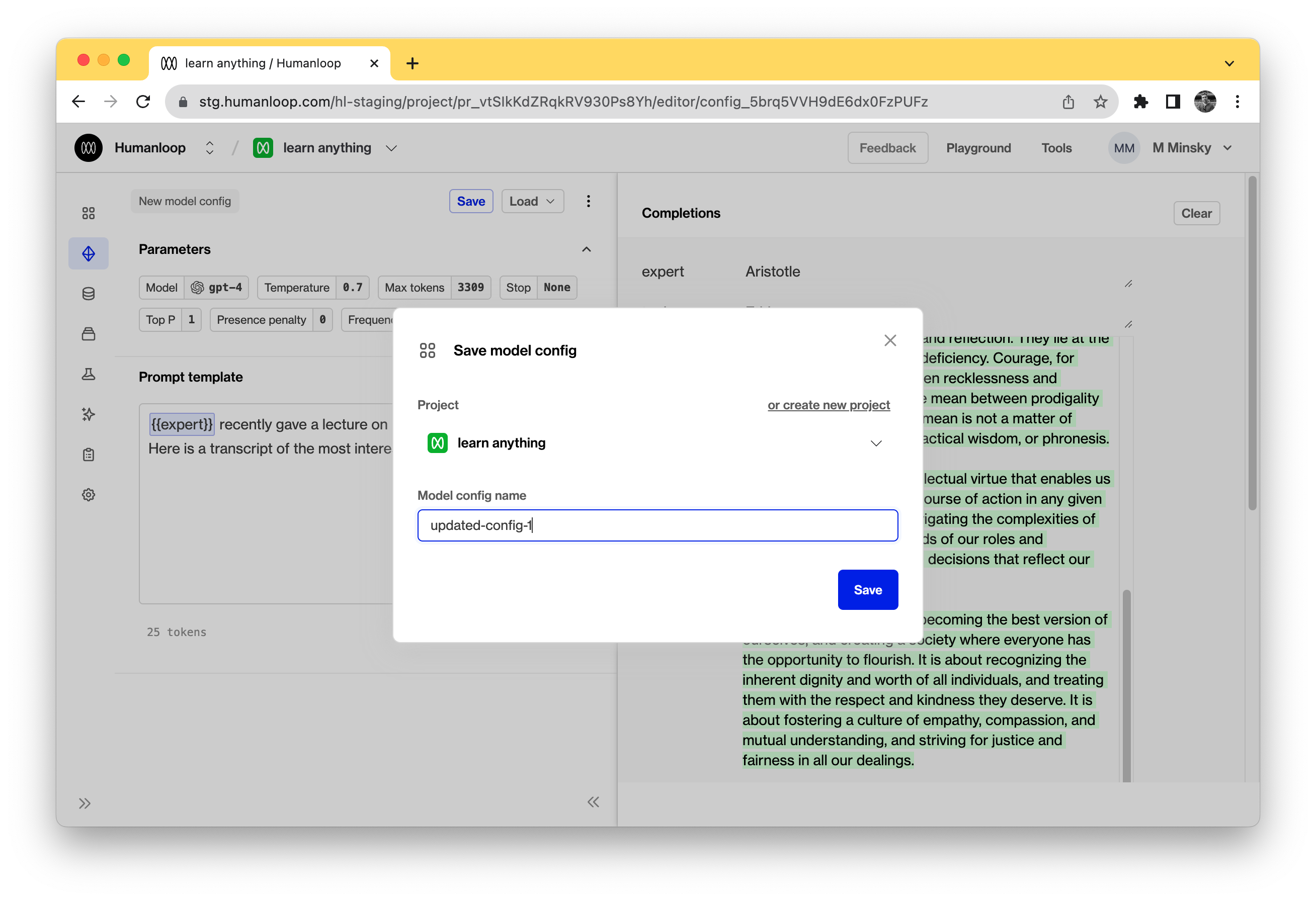

-

Click Save to add the new model to your project. Add it to the “learn-anything” project.

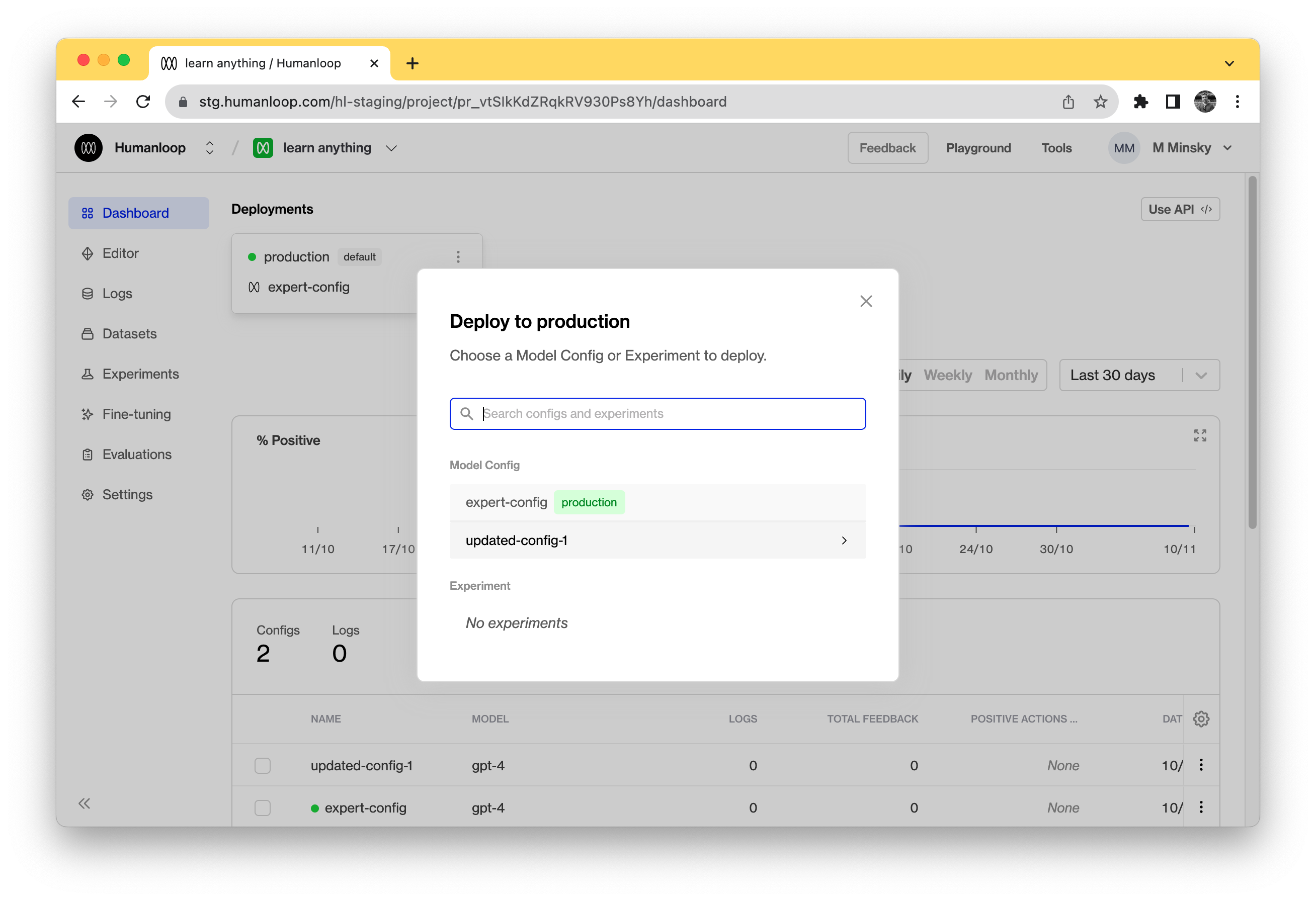

-

Go to your project dashboard. At the top left of the page, click menu of “production” environment card. Within that click the button Change deployment and set a new model config as active; calls to

humanloop.complete_deployedwill now use this new model. Now go back to the app and see the effect!

Congratulations!

And that’s it! You should now have a full understanding of how to go from creating a Prompt in Humanloop to a deployed and functioning app. You’ve learned how to create prompt templates, capture user feedback and deploy a new models.

If you want to learn how to improve your model by running experiments or finetuning check out our guides below.