Fine-tune a model

In this guide we will demonstrate how to use Humanloop’s fine-tuning workflow to produce improved models leveraging your user feedback data.

Paid Feature

This feature is not available for the Free tier. Please contact us if you wish to learn more about our Enterprise plan

Prerequisites

- You already have a Prompt — if not, please follow our Prompt creation guide first.

- You have integrated

humanloop.complete_deployed()or thehumanloop.chat_deployed()endpoints, along with thehumanloop.feedback()with the API or Python SDK.

A common question is how much data do I need to fine-tune effectively? Here we can reference the OpenAI guidelines:

The more training examples you have, the better. We recommend having at least a couple hundred examples. In general, we’ve found that each doubling of the dataset size leads to a linear increase in model quality.

Fine-tuning

The first part of fine-tuning is to select the data you wish to fine-tune on.

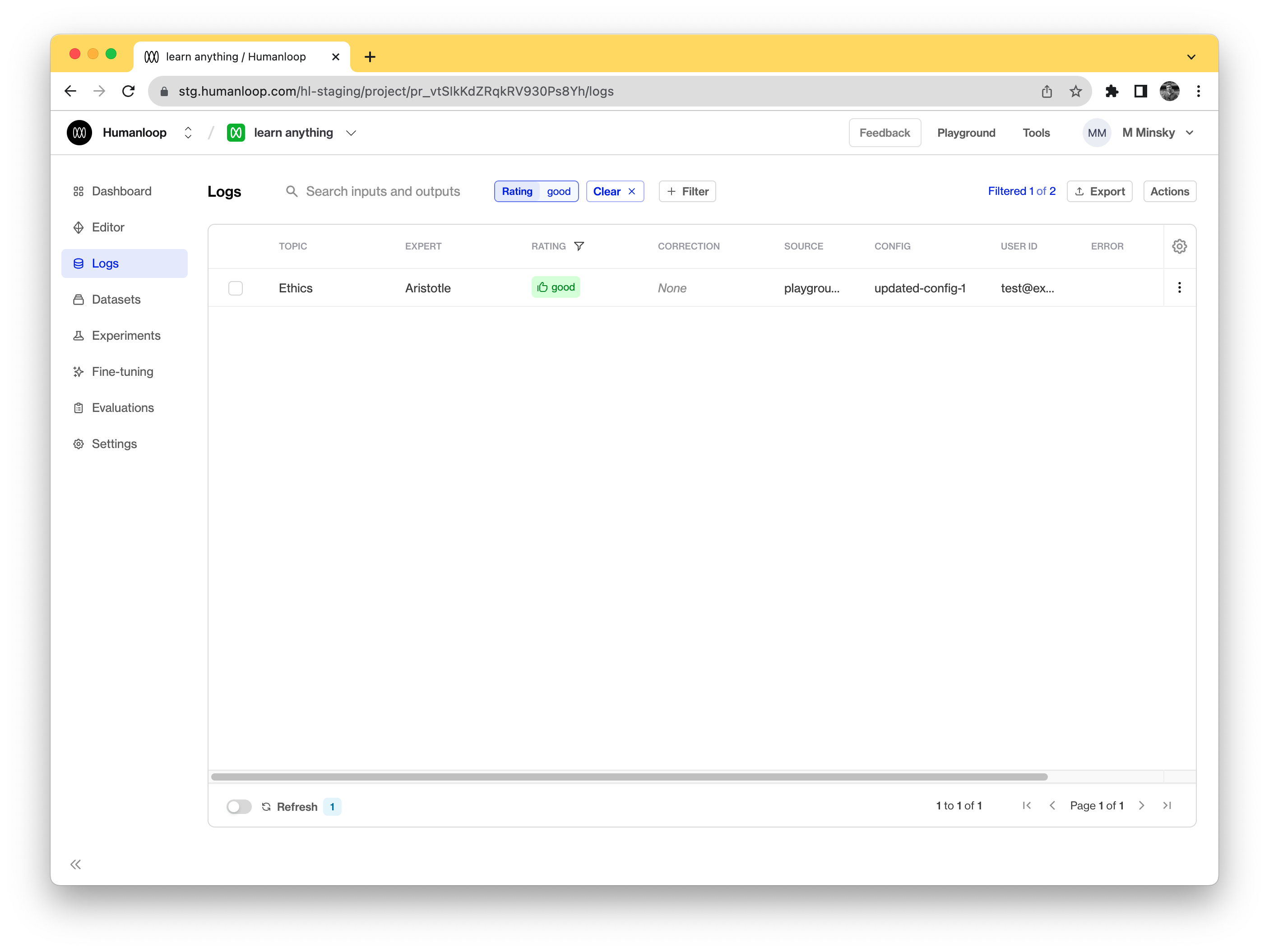

Create a filter

Using the + Filter button above the table of the logs you would like to fine-tune on.

For example, all the logs that have received a positive upvote in the feedback captured from your end users.

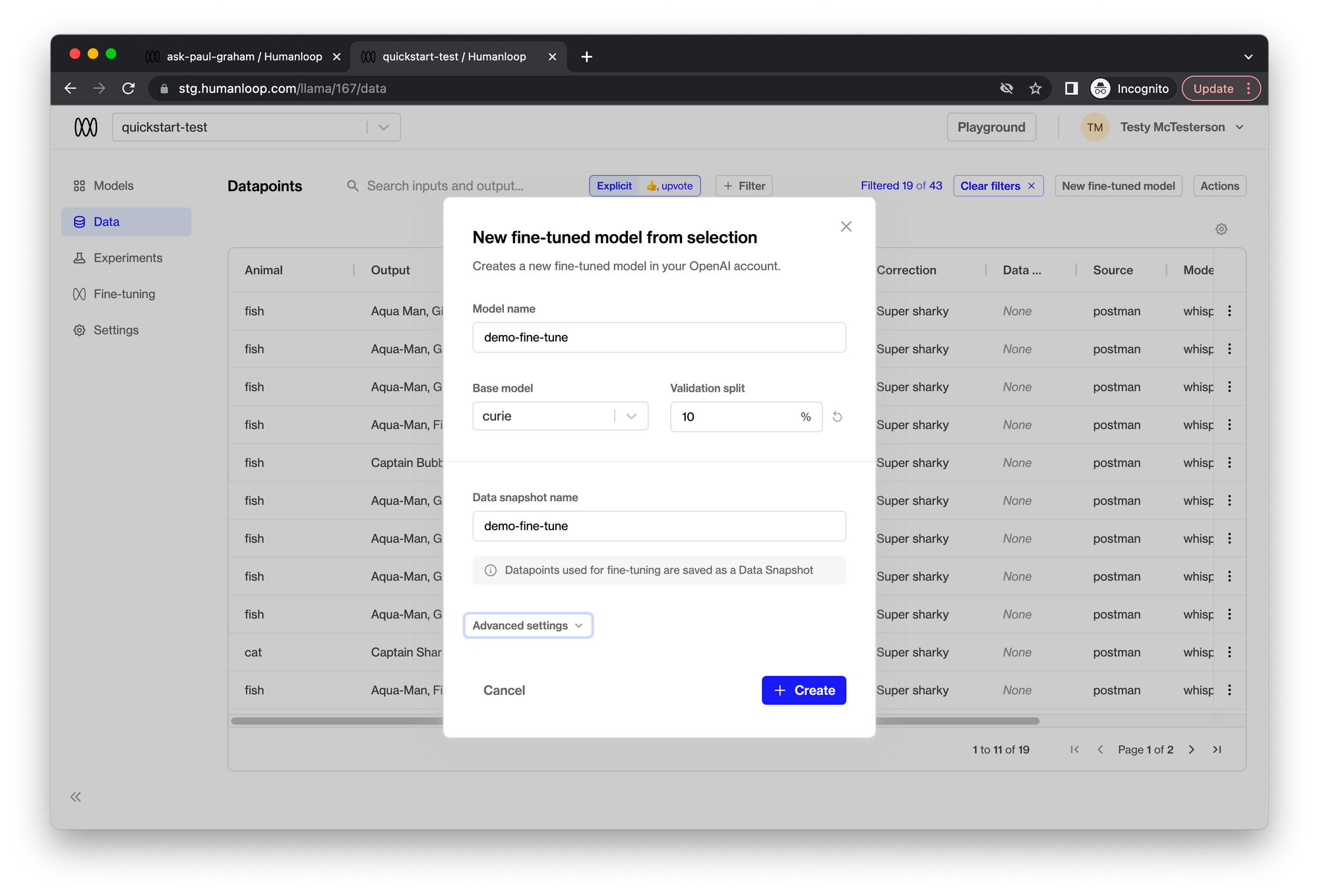

Click the Actions button, then click the New fine-tuned model button to set up the finetuning process.

Enter the appropriate parameters for the fine-tuned model.

- Enter a Model name. This will be used as the suffix parameter in OpenAI’s fine-tune interface. For example, a suffix of “custom-model-name” would produce a model name like

ada:ft-your-org:custom-model-name-2022-02-15-04-21-04. - Choose the Base model to fine-tune. This can be

ada,babbage,curie, ordavinci. - Select a Validation split percentage. This is the proportion of data that will be used for validation. Metrics will be periodically calculated against the validation data during training.

- Enter a Data snapshot name. Humanloop associates a data snapshot to every fine-tuned model instance so it is easy to keep track of what data is used (you can see yourexisting data snapshots on the Settings/Data snapshots page)

Click Create

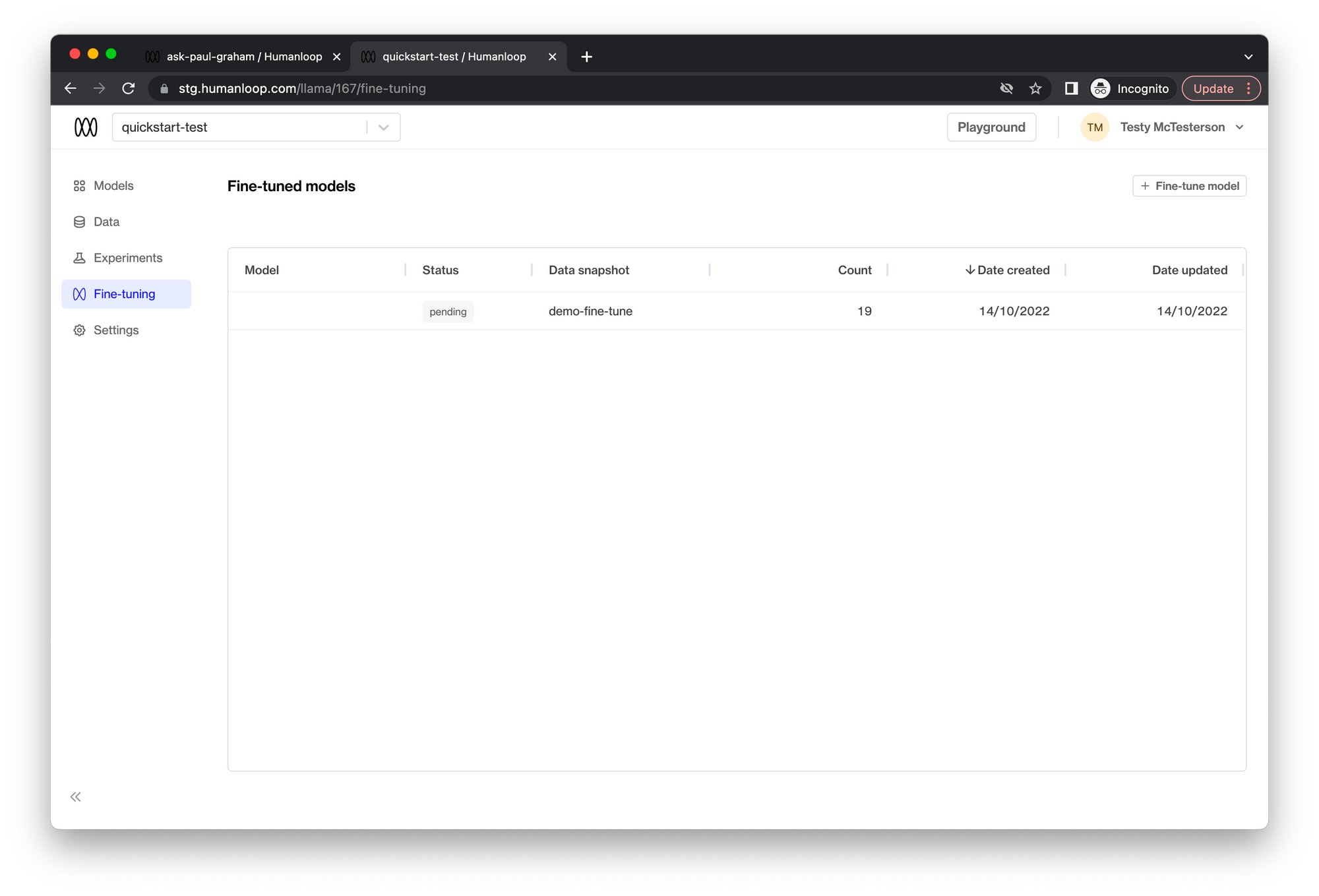

The fine-tuning process runs asynchronously and may take up to a couple of hours to complete depending on your data snapshot size.

🎉 You can now use this fine-tuned model in a Prompt and evaluate its performance.