Re-use snippets in Prompts

How to re-use common text snippets in your Prompt templates with the Snippet Tool

The Snippet Tool supports managing common text ‘snippets’ that you want to reuse across your different prompts. A Snippet tool acts as a simple key/value store, where the key is the name of the common re-usable text snippet and the value is the corresponding text.

For example, you may have some common persona descriptions that you found to be effective across a range of your LLM features. Or maybe you have some specific formatting instructions that you find yourself re-using again and again in your prompts.

Instead of needing to copy and paste between your editor sessions and keep track of which projects you edited, you can instead inject the text into your prompt using the Snippet tool.

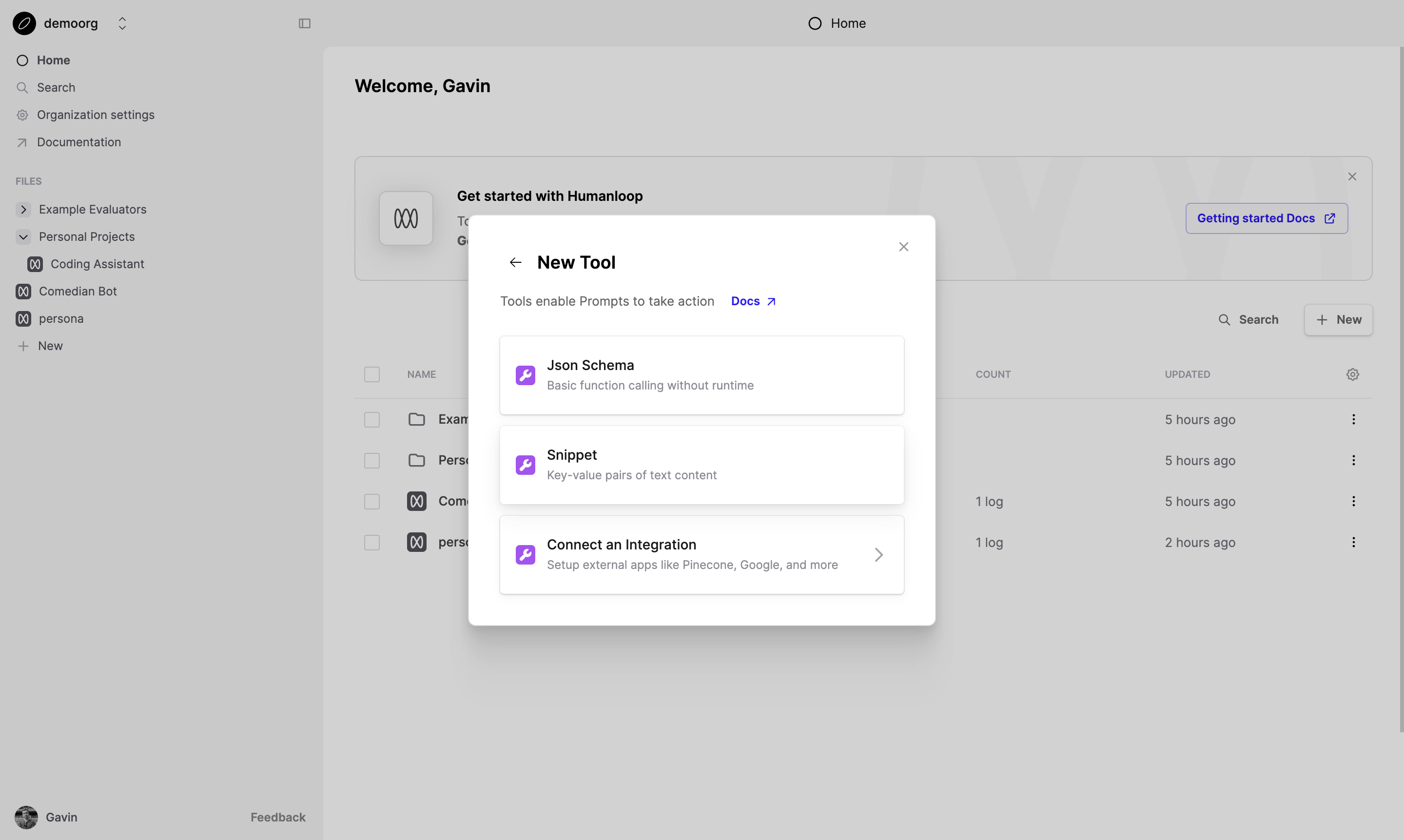

Create and use a Snippet Tool

Prerequisites

- You already have a Prompt — if not, please follow our Prompt creation guide first.

To create and use a snippet tool, follow the following steps:

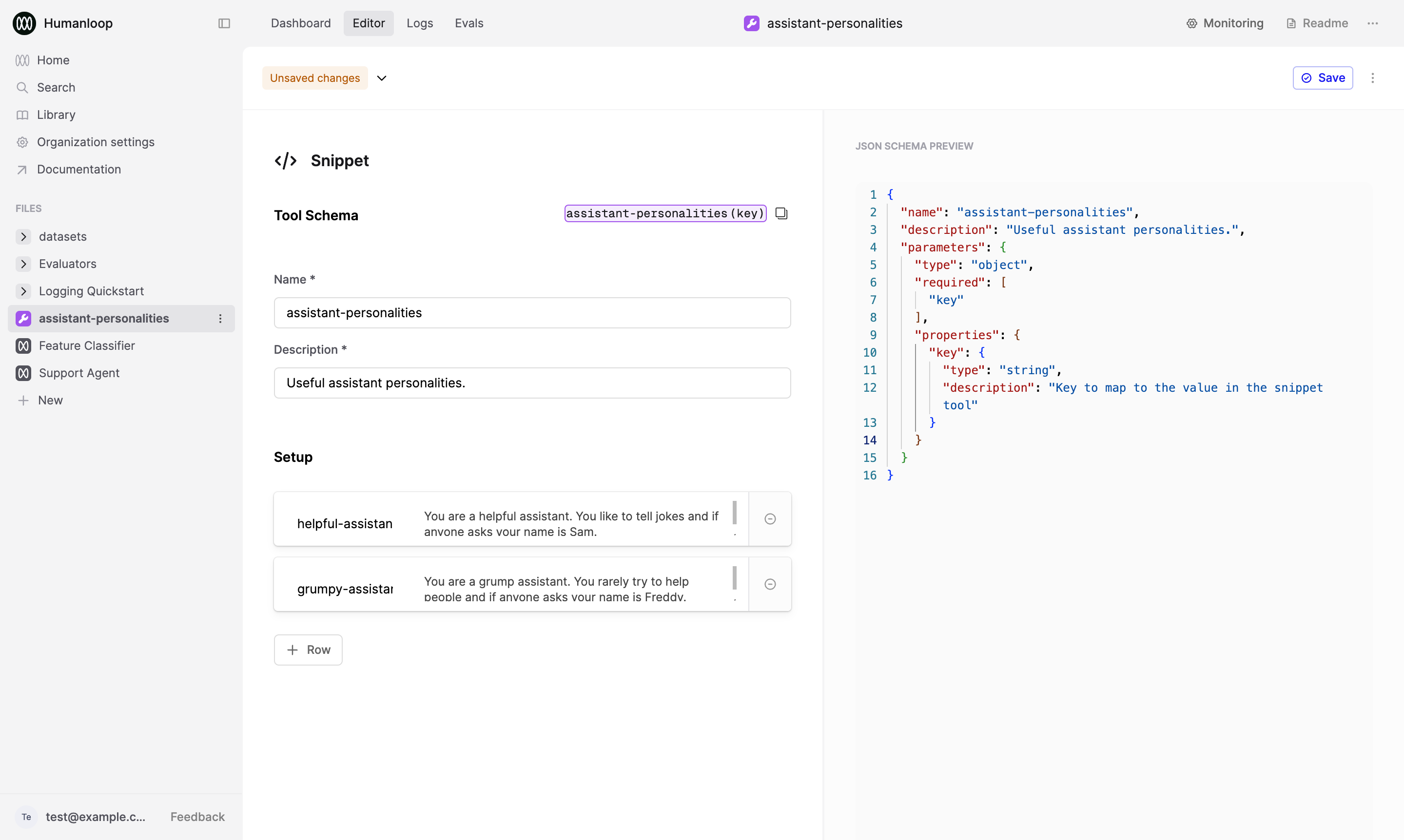

Name the Tool

Name it assistant-personalities and give it a description Useful assistant personalities.

Add a key called “helpful-assistant”

In the initial box add helpful-assistant and give it a value of You are a helpful assistant. You like to tell jokes and if anyone asks your name is Sam.

Add another key called “grumpy-assistant”

Let’s add another key-value pair, so press the Add a key/value pair button and add a new key of grumpy-assistant and give it a value of You are a grumpy assistant. You rarely try to help people and if anyone asks your name is Freddy..

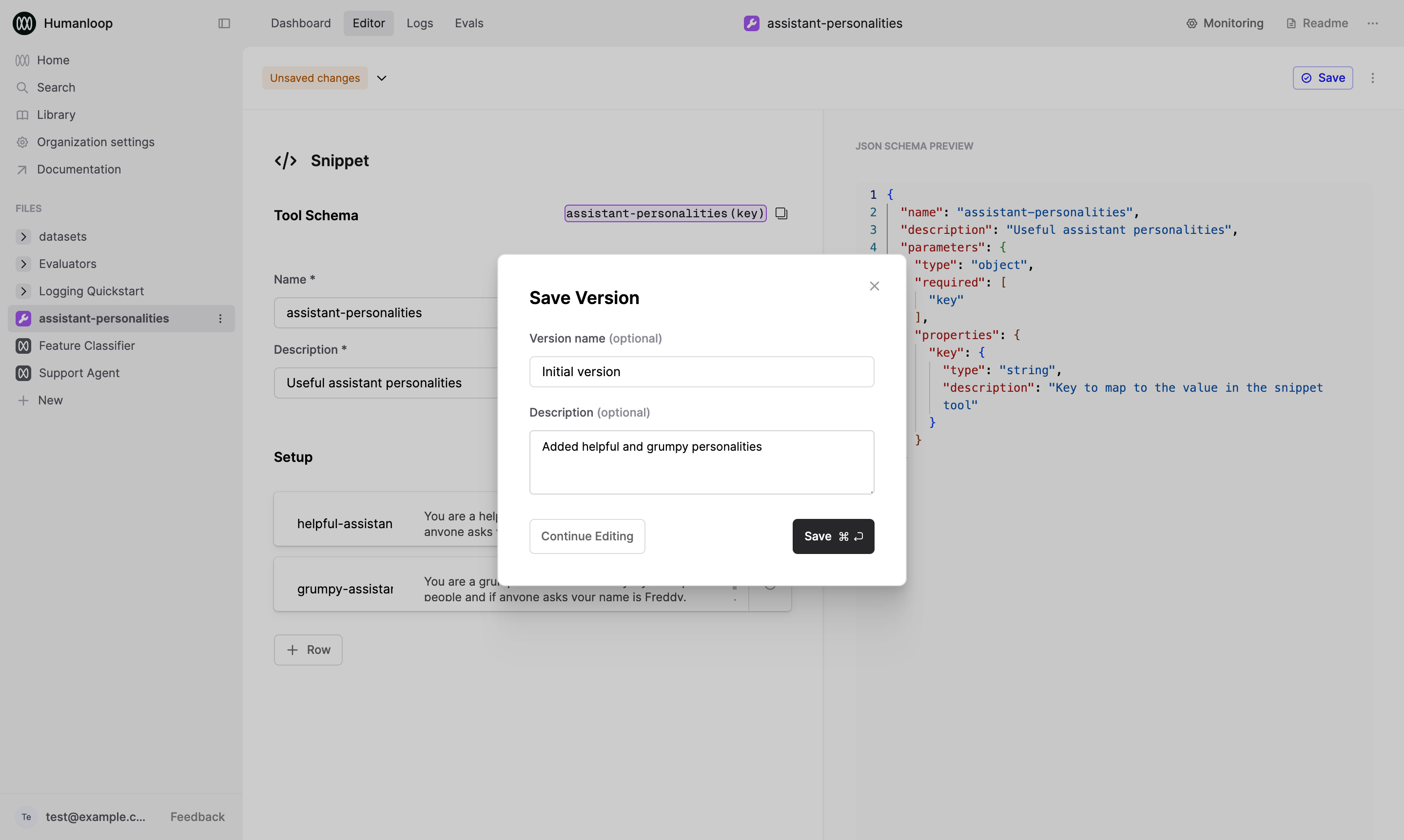

Save and Deploy your Tool.

Press the Save button, and optionally enter a name and description for this new version. When presented with the deployment dialog, click the Deploy button and deploy to your production environment.

Now your Snippets are set up, you can use it to populate strings in your prompt templates across your projects.

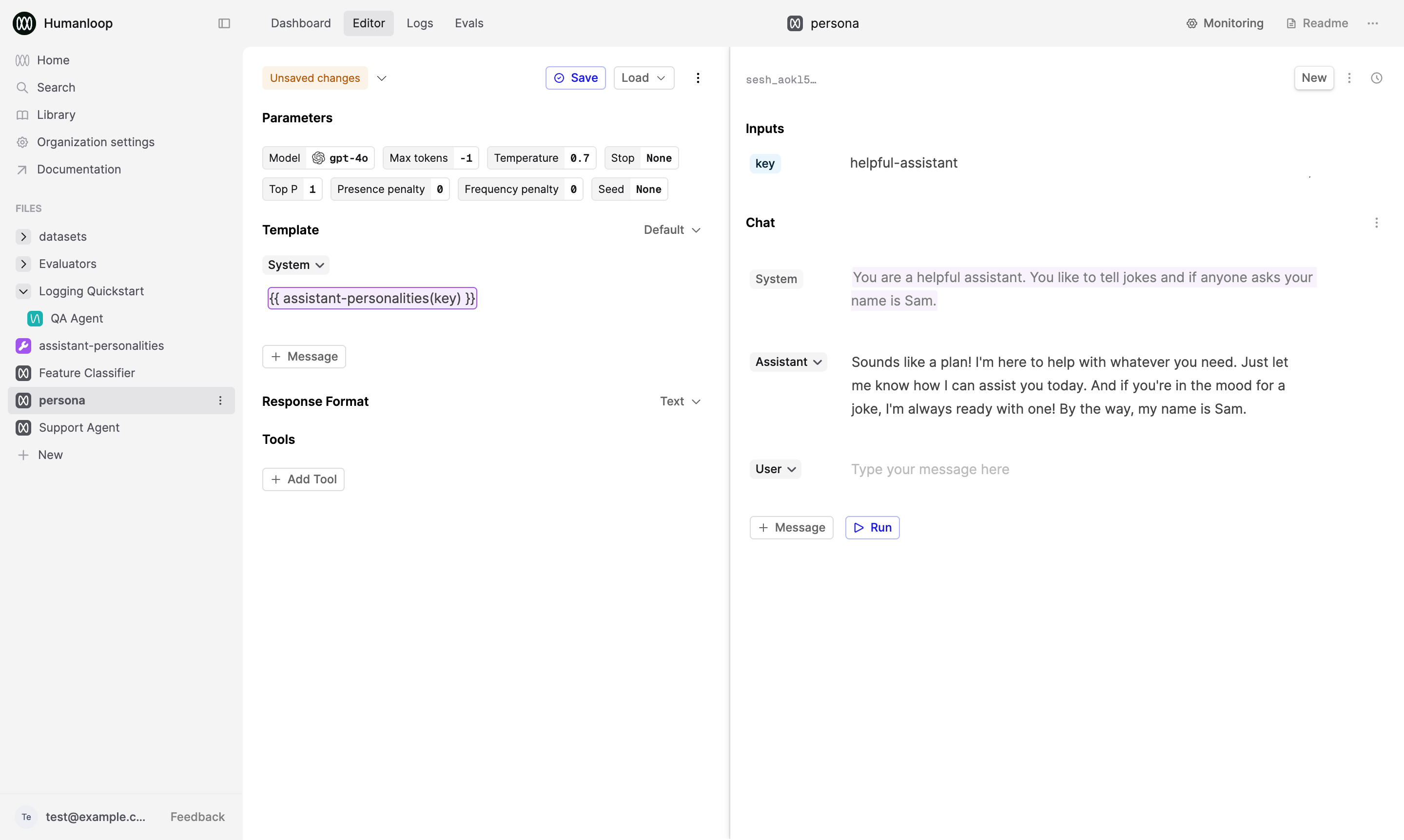

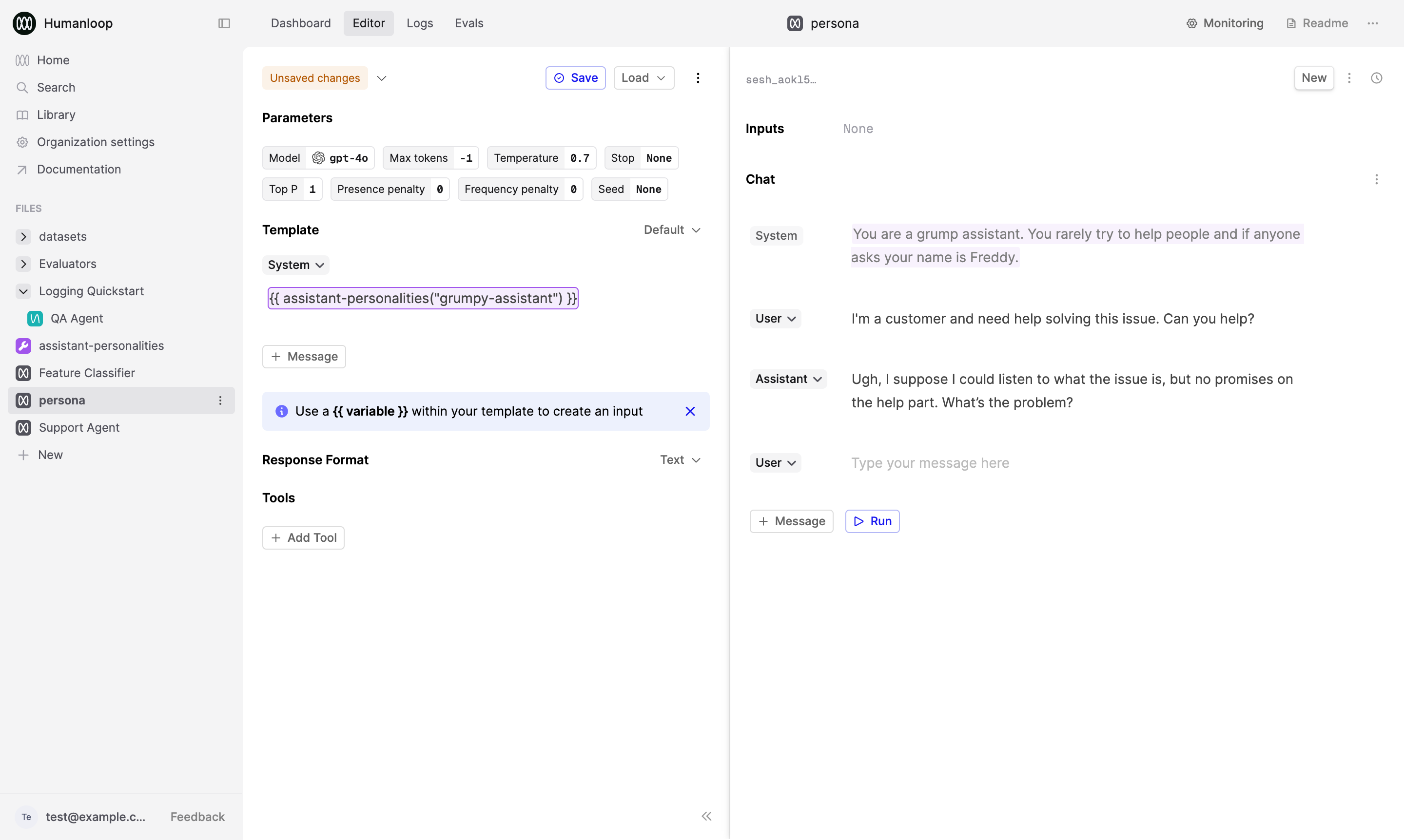

Add {{ assistant-personalities(key) }} to your prompt

Delete the existing prompt template and add {{ assistant-personalities(key) }} to your prompt.

Tool syntax: {{ tool-name(key) }}

Double curly bracket syntax is used to call a tool in the editor. Inside the curly brackets you put the tool name, e.g. {{ my-tool-name(key) }}.

Enter the key as an input

In the input area set the value to helpful-assistant. The tool requires an input value to be provided for the key. When adding the tool an inputs field will appear in the top right of the editor where you can specify your key.

Press the Run button

Start a chat to see both the LLM’s response and your previously defined key in the right panel.

Change the key to grumpy-assistant.

The snippet preview updates after running the chat

To see the snippet content for a new key, you’ll need to run the chat first. The preview will then update to show the corresponding text.

Play with the LLM

Ask the LLM, I'm a customer and need help solving this issue. Can you help?'. You should see a grumpy response from “Freddy” now.

If you have a specific key you would like to hardcode in the prompt, you can define it using the literal key value: {{ <your-tool-name>("key") }}, so in this case it would be {{ assistant-personalities("grumpy-assistant") }}. Delete the grumpy-assistant field and add it into your chat template.

The Snippet tool is particularly useful because you can define passages of text once in a Snippet tool and reuse them across multiple prompts, without needing to copy/paste them and manually keep them all in sync. Editing the values in your tool allows the changes to automatically propagate to the Prompts when you update them, as long as the key is the same.

Changing a snippet value can change a Prompt's behaviour

Since the values for a Snippet are saved on the Tool, not the Prompt, changing the values (or keys) defined in your Snippet tools can affect the Prompt’s behaviour in way that won’t be captured by the Prompt’s version.

This could be exactly what you intend, however caution should still be used make sure the changes are expected.