Create a Dataset from existing Logs

Datasets are a collection of input-output pairs that can be used to evaluate your Prompts, Tools or even Evaluators.

This guide will show you how to create Datasets in Humanloop from your Logs.

Prerequisites

You should have an existing Prompt on Humanloop and already generated some Logs. Follow our guide on creating a Prompt.

Steps

To create a Dataset from existing Logs:

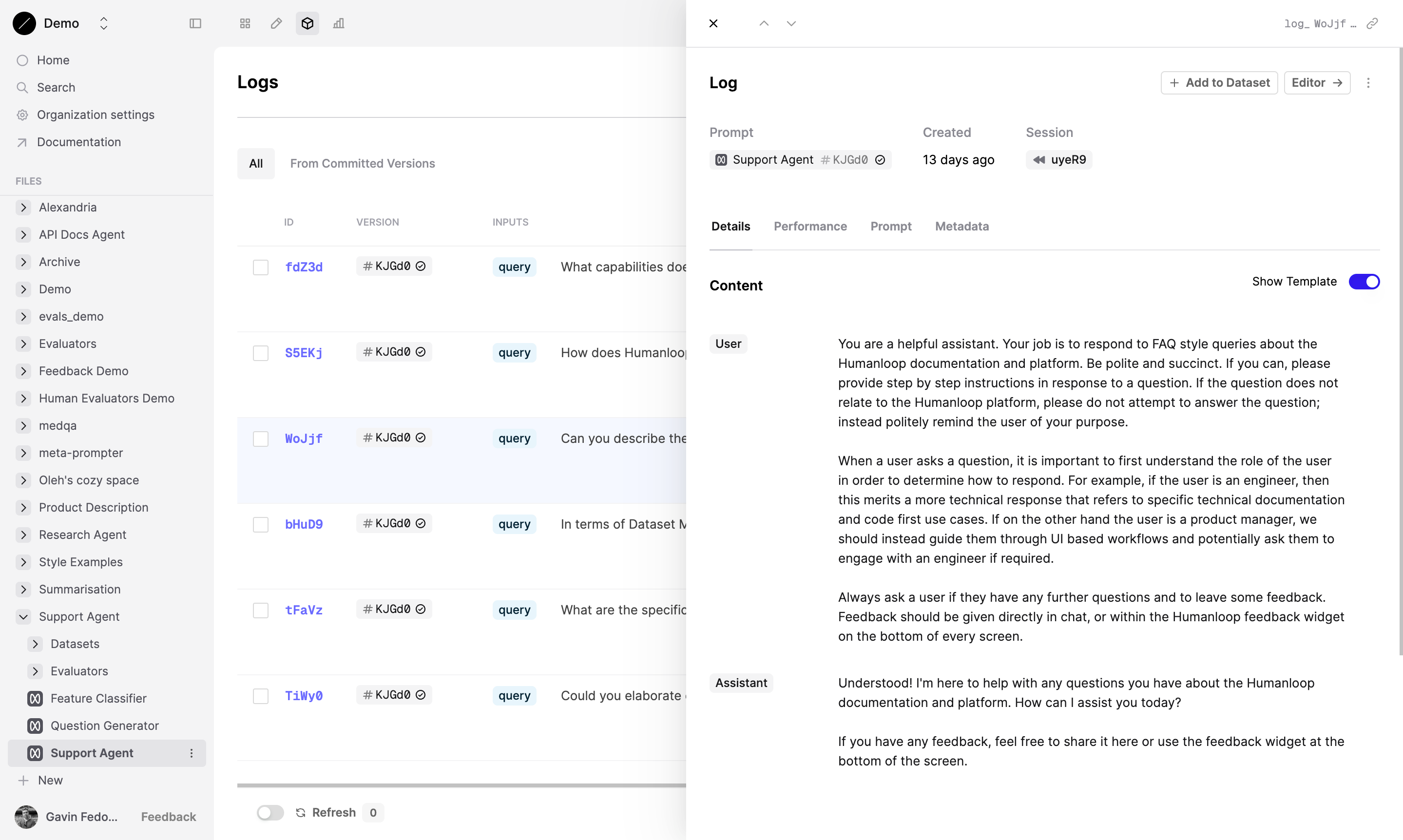

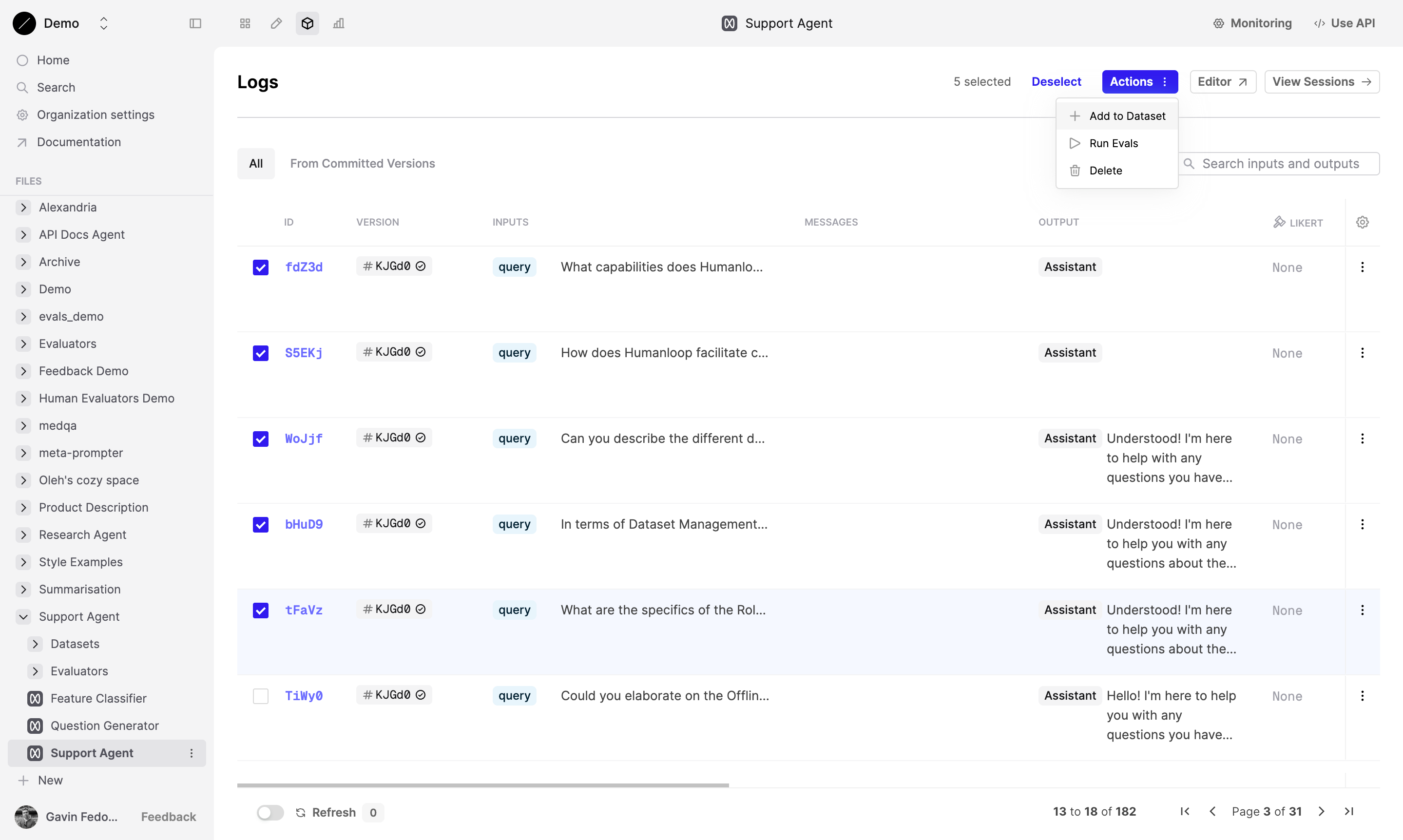

Select a subset of the Logs to add

Filter logs on a criteria of interest, such as the version of the Prompt used, then multi-select Logs.

In the menu in the top right of the page, select Add to Dataset.

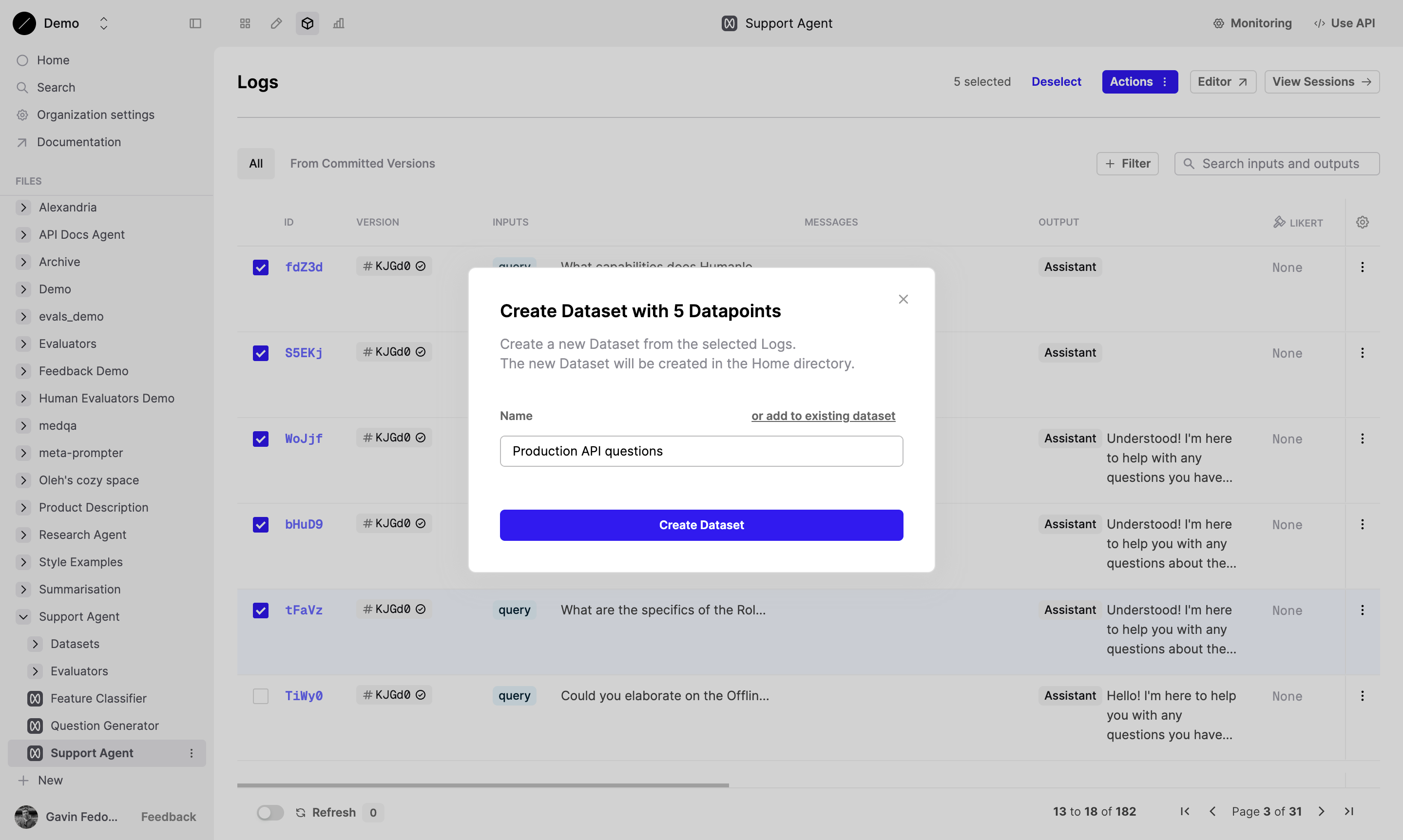

Add to a new Dataset

Provide a name for the new Dataset and click Create (or you can click add to existing Dataset to append the selection to an existing Dataset). Then you can optionally add a version name. A description is pre-filled with details from the source Logs — you can edit this to add more context about the new version if you’d like.

You will then see the new Dataset appear at the same level in the filesystem as your Prompt.

Next steps

🎉 Now that you have Datasets defined in Humanloop, you can leverage our Evaluations feature to systematically measure and improve the performance of your AI applications. See our guides on setting up Evaluators and Running an Evaluation to get started.

For different ways to create datasets, see the links below:

- Upload data from CSV - useful for quickly uploading existing tabular data you’ve collected outside of Humanloop.

- Upload via API - useful for uploading more complex Datasets that may have nested JSON structures, which are difficult to represent in tabular .CSV format, and for integrating with your existing data pipelines.