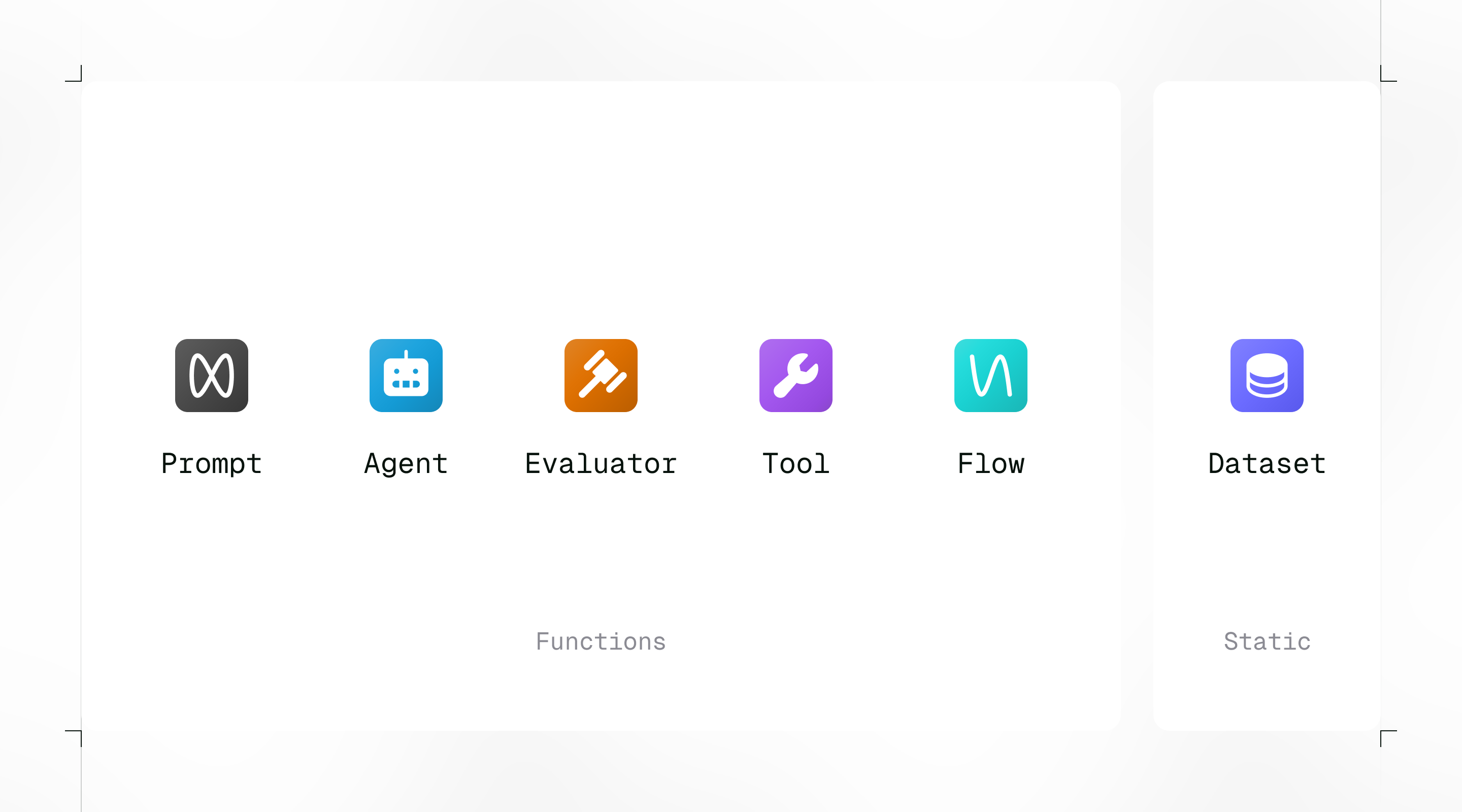

Humanloop Files

Files represent the core building blocks of your AI features on Humanloop. They exist within a flexible filesystem in an Organization.

Function Files

Prompts

Prompts define a task for a Large Language Model.

Agents

Agents are multi-step AI systems that leverage an LLM, external information sources and tool calling to accomplish complex tasks automatically.

Evaluators

Evaluators judge the output of Prompts, Tools, Flows, or other Evaluators.

Tools

Tools extend Prompts and Agents with access to external data sources and enable them to take action.

Flows

Flows are orchestrations of Prompts, Tools, and other code — enabling evaluation and improvement of complete AI pipelines.

Static Files

Datasets

Datasets are collections of Datapoints used for evaluation and fine-tuning.

File properties

Files are managed in the UI or code

Files can be managed in the Humanloop UI, or via the API. Product teams and their Subject Matter Experts (SMEs) may prefer using the UI for convenience, whereas AI Teams and Engineers may prefer to use the API for greater control and customization.

Files are version-controlled

Files have immutable Versions that are uniquely determined by their parameters that characterise the behavior of the system. For example, a Prompt Version is determined by the prompt template, base model, and hyperparameters. Within the Humanloop Editor and via the API, you can save new Versions of a File, view the history of changes, and revert to a previous version via deployments.

Files have a serialized form

All Files can be exported and imported in a serialized form. For example, Prompts and Agents are serialized to our .prompt and .agent file formats. This allows technical teams to maintain the source of truth within their existing version control systems, such as Git.

Files support deployments

You can tag File Versions with specific Environments and target these Environments via the UI and API to facilitate robust deployment workflows.

Function Files are callable

Function Files can be called when using the Humanloop runtime. Or logged to when using your own runtime.

Example: When a Prompt is called, Humanloop acts as a proxy to the model provider, logs and returns the output. If you manage the model calls yourself, the results can be logged to the Prompt File.

Using the Humanloop runtime is easier and lets you run files directly in the UI, while managing your own runtime gives you more control.

Function Files have Logs

Every time a Function File is called, a Log is created. Logs can also be posted via the API.