Tools

Tools on Humanloop are used to extend Agents and Prompts with access to external data sources and enable them to take action.

Types of Tools

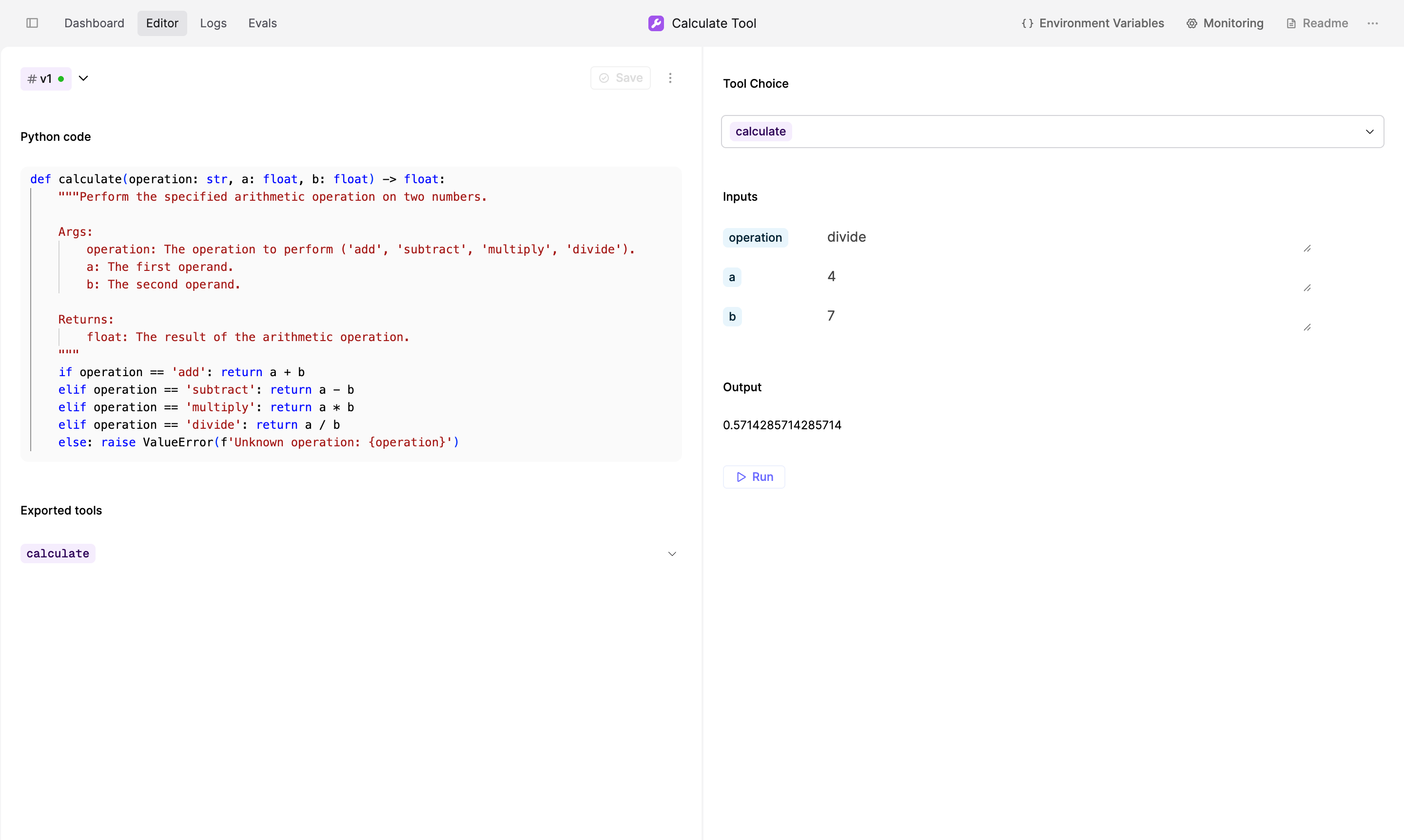

Runnable Tools

Runnable Tools can be executed by Humanloop, meaning they can form a part of an Agent’s automatic execution. Python code Tools, an example of these, are run in a secure Python runtime and allow you to write custom code to be executed.

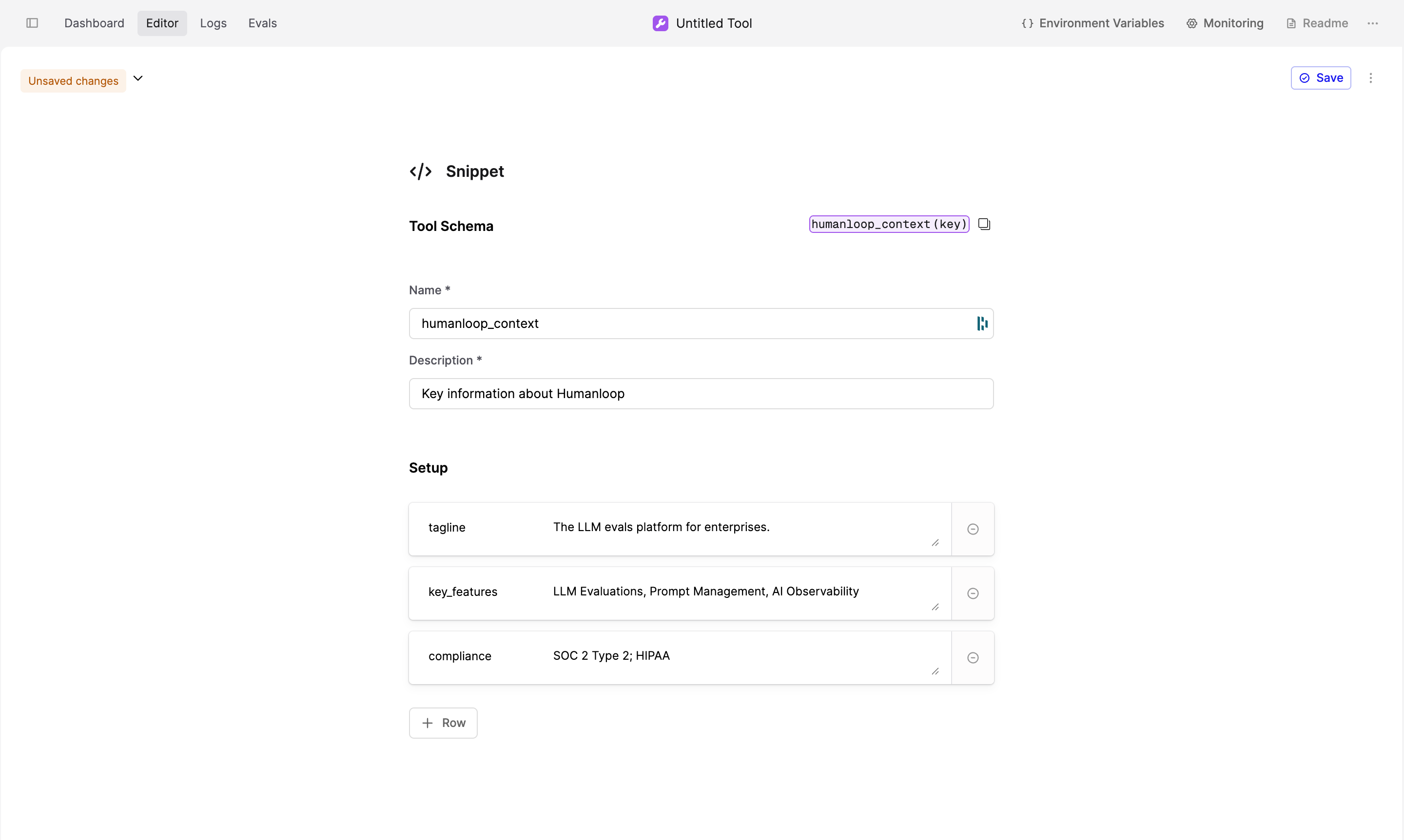

Template Tools

Template Tools can be used within a Prompt’s template to dynamically insert information for the model when called. These include:

- Snippet Tools: Reusable text components that can be shared across multiple Prompts

- Integration Tools: Pre-built connectors to popular services like Google Search and Pinecone vector database

An example Snippet Tool. This can be referred to within a Prompt template with {{humanloop_context(key)}}.

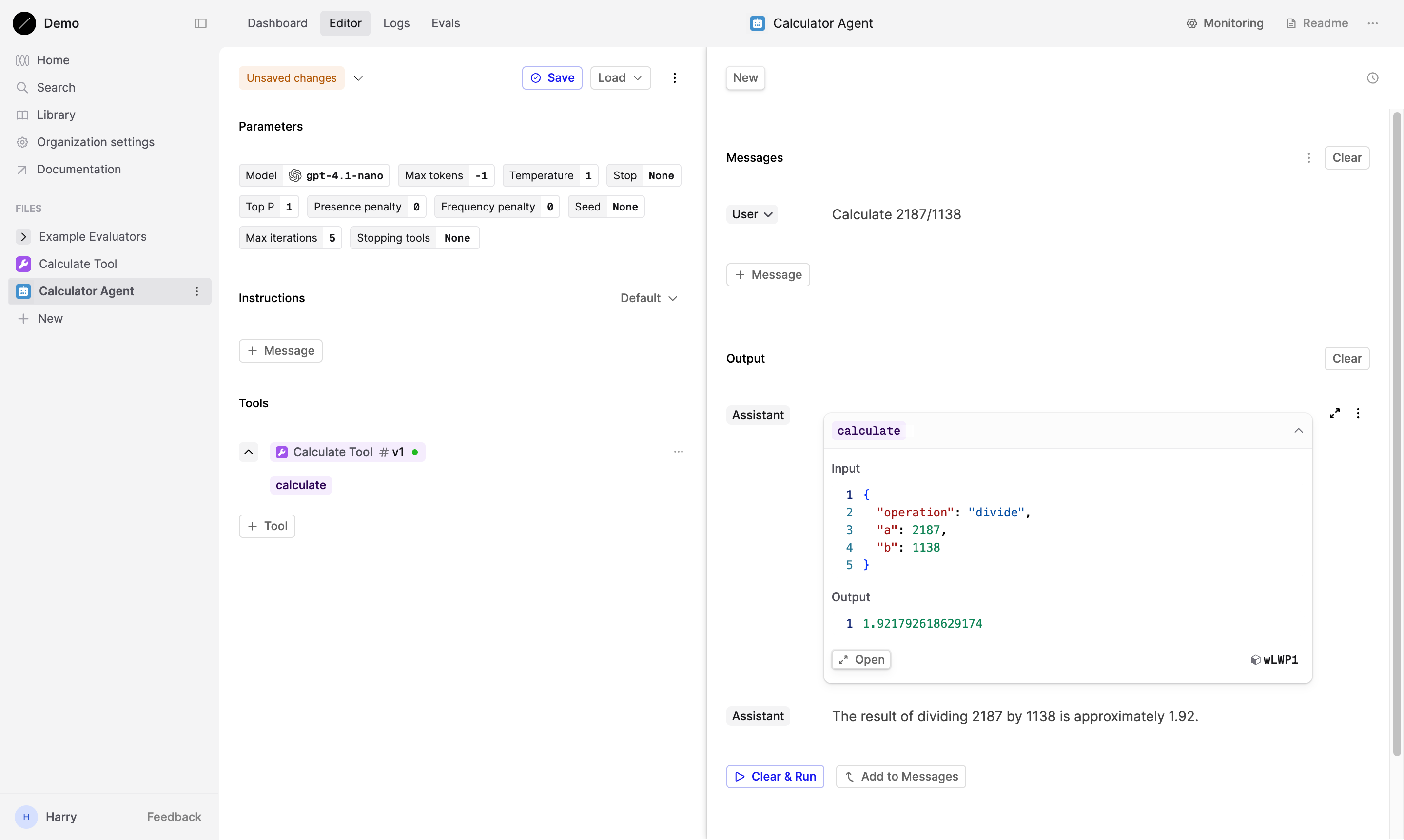

Function calling Tools

Many models, such as those by OpenAI and Anthropic, now support function calling. You can provide these models with definitions of the available tools. The model then decides whether to call a tool, and which parameters to use.

On Humanloop, JSON schema Tools record the schema definitions that are used for function calling, allowing you to tweak and version control them.

In addition to JSON schema Tools, all Runnable Tools and Prompts can be used for function calling. Humanloop will automatically generate the schema definitions for these when they’re linked to a Agent or Prompt.

Humanloop runtime versus your runtime

Humanloop supports running Python code Tools within the Humanloop runtime. If you have Tools that you want to run in your own environment, you can use the Humanloop API to manage versions and record Logs.

The simplest way to do this is by decorating a function with the Tool decorator in the SDK.

Running the decorated function will automatically log the inputs and output of the function

to Humanloop. If you’re using the Python SDK, the @tool decorator will also infer a JSON schema from the function’s type hints.