Evaluating externally generated Logs

If running your infrastructure to generate logs, you can still leverage the Humanloop evaluations suite via our API. The workflow looks like this:

- Trigger the creation of an evaluation run

- Loop through the datapoints in your dataset and perform generations on your side

- Post the generated logs to the evaluation run

This works with any evaluator - if you have configured a Humanloop-runtime evaluator, these will be automatically run on each log you post to the evaluation run; or, you can use self-hosted evaluators and post the results to the evaluation run yourself (see Self-hosted evaluations).

Prerequisites

- You need to have access to evaluations

- You also need to have a project created - if not, please first follow our project creation guides.

- You need to have a dataset in your project. See our dataset creation guide if you don’t yet have one.

- You need a model configuration to evaluate, so create one in the Editor.

Setting up the script

Set up the model config you are evaluating

If you constructed this in Humanloop, retrieve it by calling:

Alternatively, if your model config lives outside the Humanloop system, post it to Humanloop with the register model config endpoint.

Either way, you need the ID of the config.

In the Humanloop app, create an evaluator

We’ll create a Valid JSON checker for this guide.

- Visit the Evaluations tab, and select Evaluators

- Click + New Evaluator and choose Code from the options.

- Select the Valid JSON preset on the left.

- Choose the mode Offline in the settings panel on the left.

- Click Create.

- Copy your new evaluator’s ID from the address bar. It starts with

evfn_.

Create an evaluation run with hl_generated set to False

This tells the Humanloop runtime that it should not trigger evaluations but wait for them to be posted via the API.

By default, the evaluation status after creation is pending. Before sending the generation logs, set the status to running.

Iterate through the datapoints in the dataset, produce a generation and post the evaluation

Run the full script above.

If everything goes well, you should now have posted a new evaluation run to Humanloop and logged all the generations derived from the underlying datapoints.

The Humanloop evaluation runtime will now iterate through those logs and run the Valid JSON evaluator on each. To check progress:

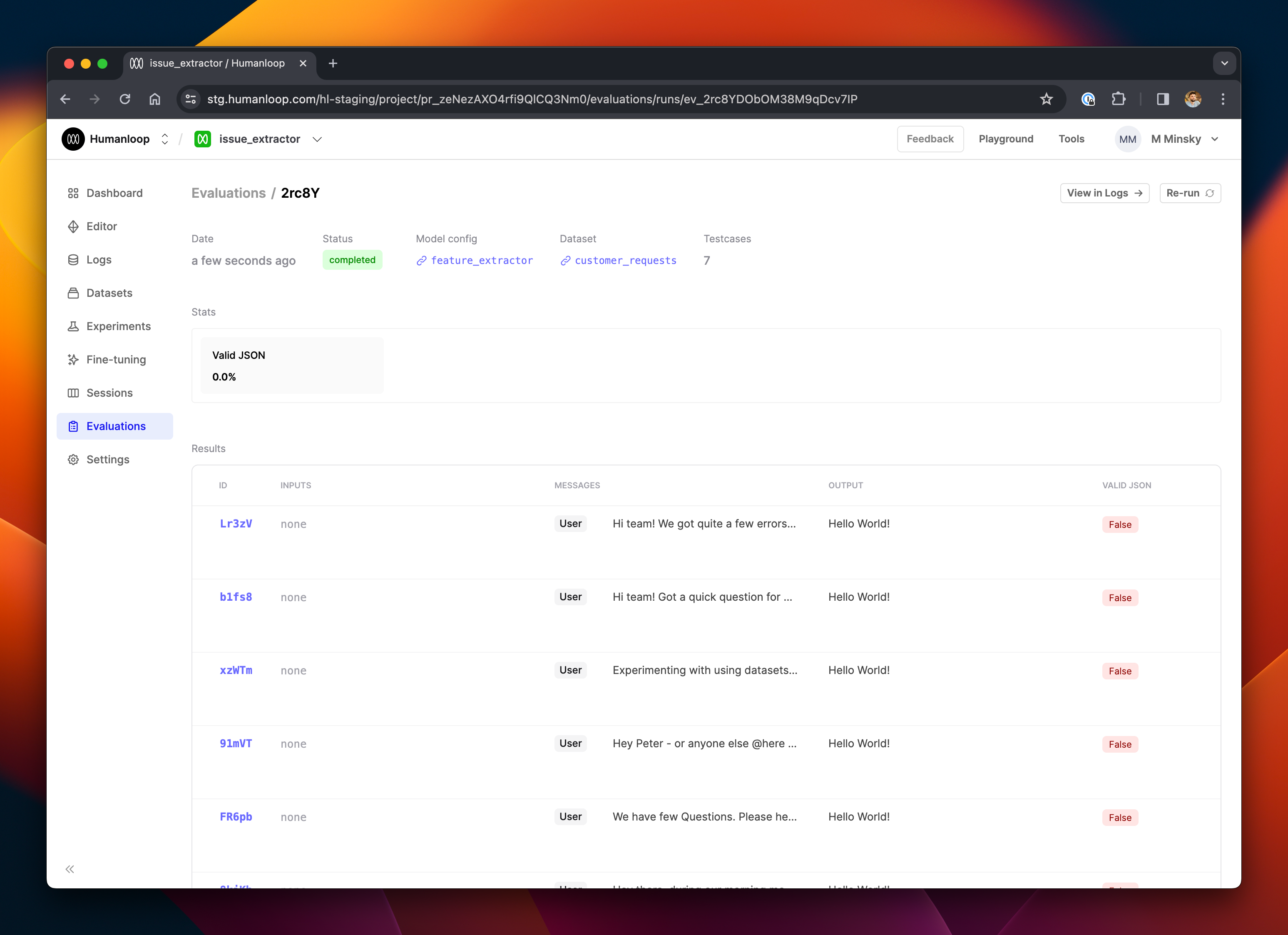

Visit your project in the Humanloop app and go to the Evaluations tab.

You should see the run you recently created; click through to it, and you’ll see rows in the table showing the generations.

In this case, all the evaluations returned False because the “Hello World!” string wasn’t valid JSON. Try logging something valid JSON to check that everything works as expected.

Full Script

For reference, here’s the full script to get started quickly.

It’s also a good practice to wrap the above code in a try-except block and to

mark the evaluation run as failed (using update_status) if an exception

causes something to fail.