Evaluating with human feedback

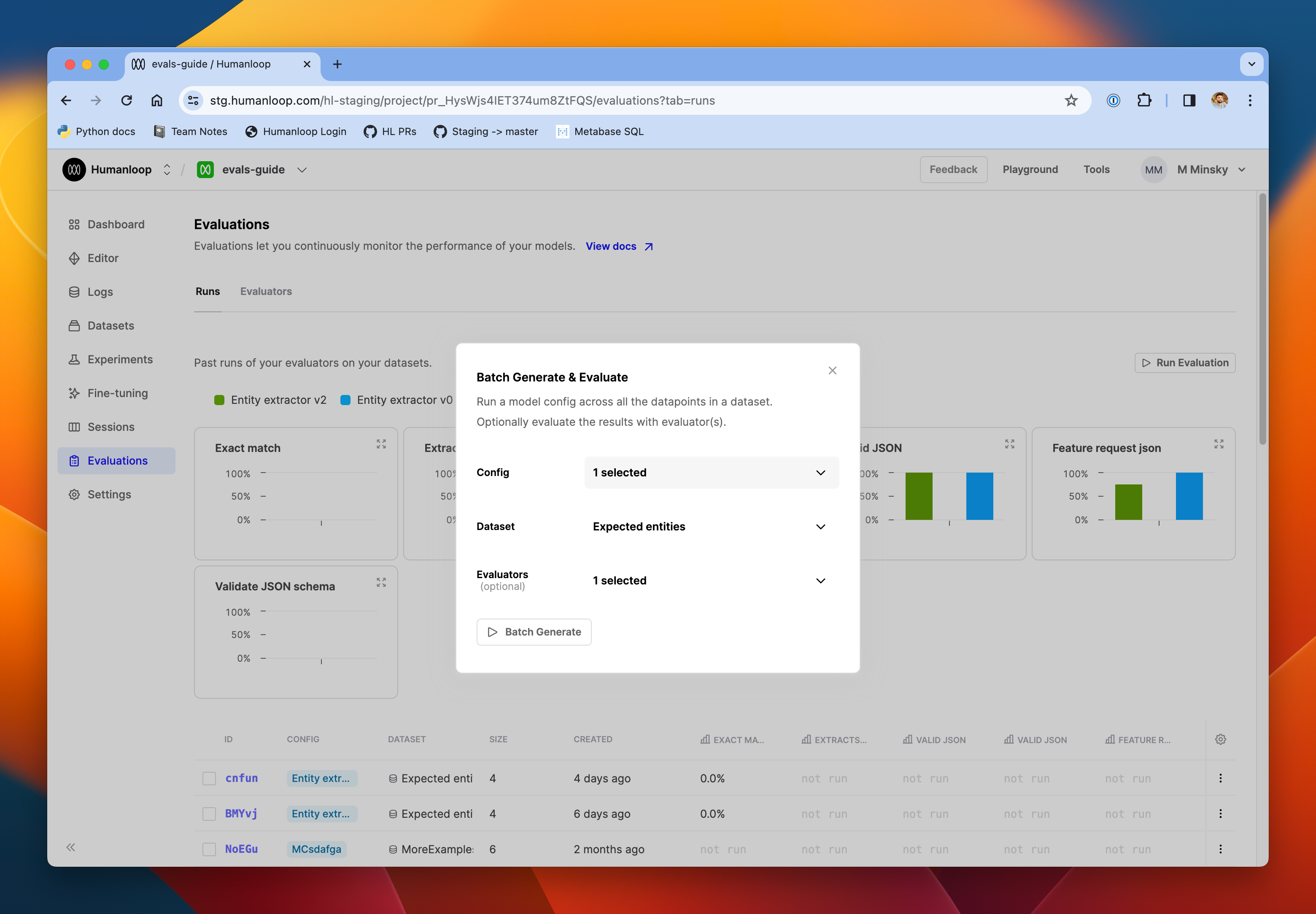

This guide demonstrates how to run a batch generation and collect manual human feedback.

Prerequisites

- You need to have access to evaluations.

- You also need to have a Prompt – if not, please follow our Prompt creation guide.

- Finally, you need at least a few logs in your project. Use the Editor to generate some logs if you don’t have any yet.

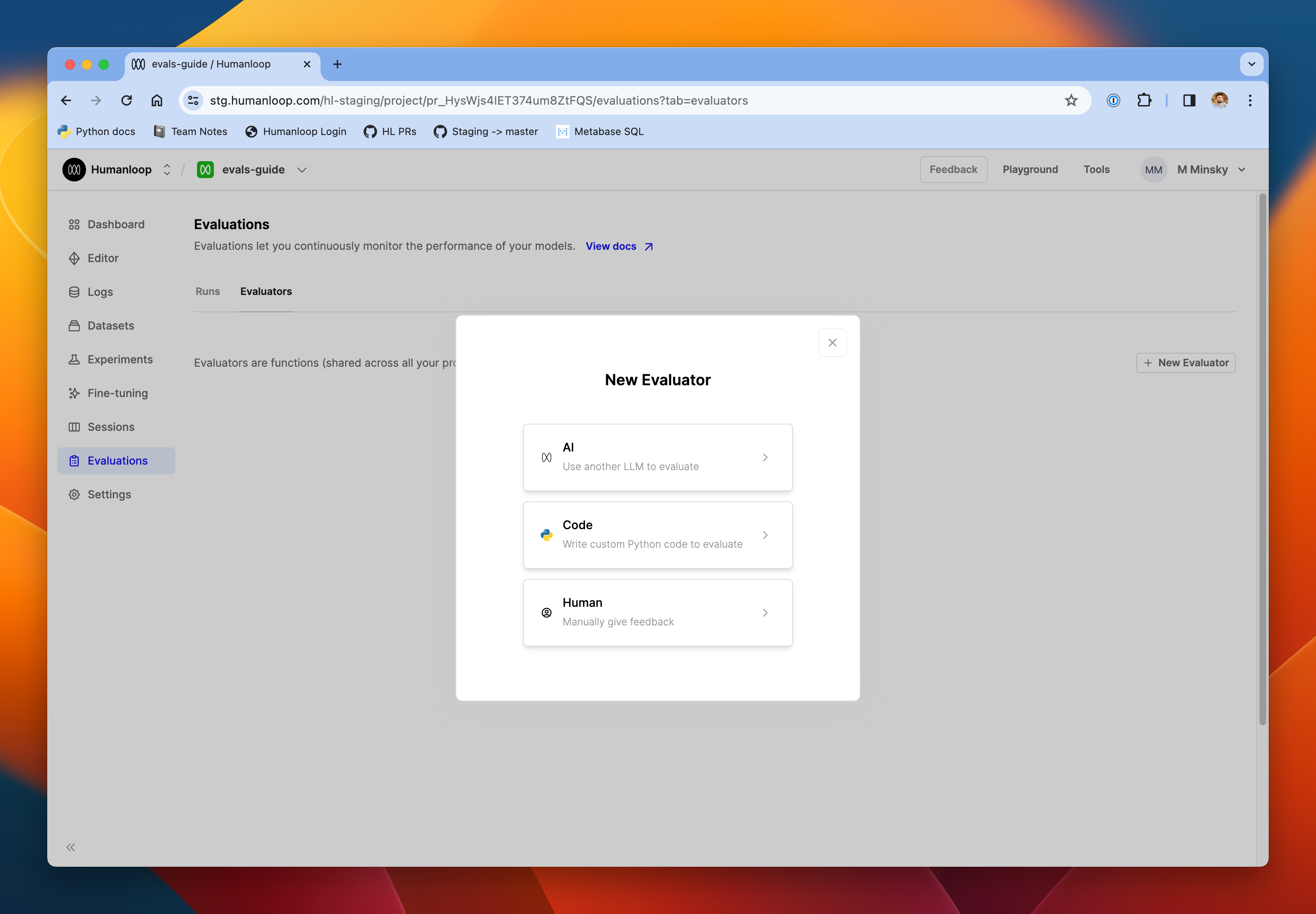

Set up an evaluator to collect human feedback

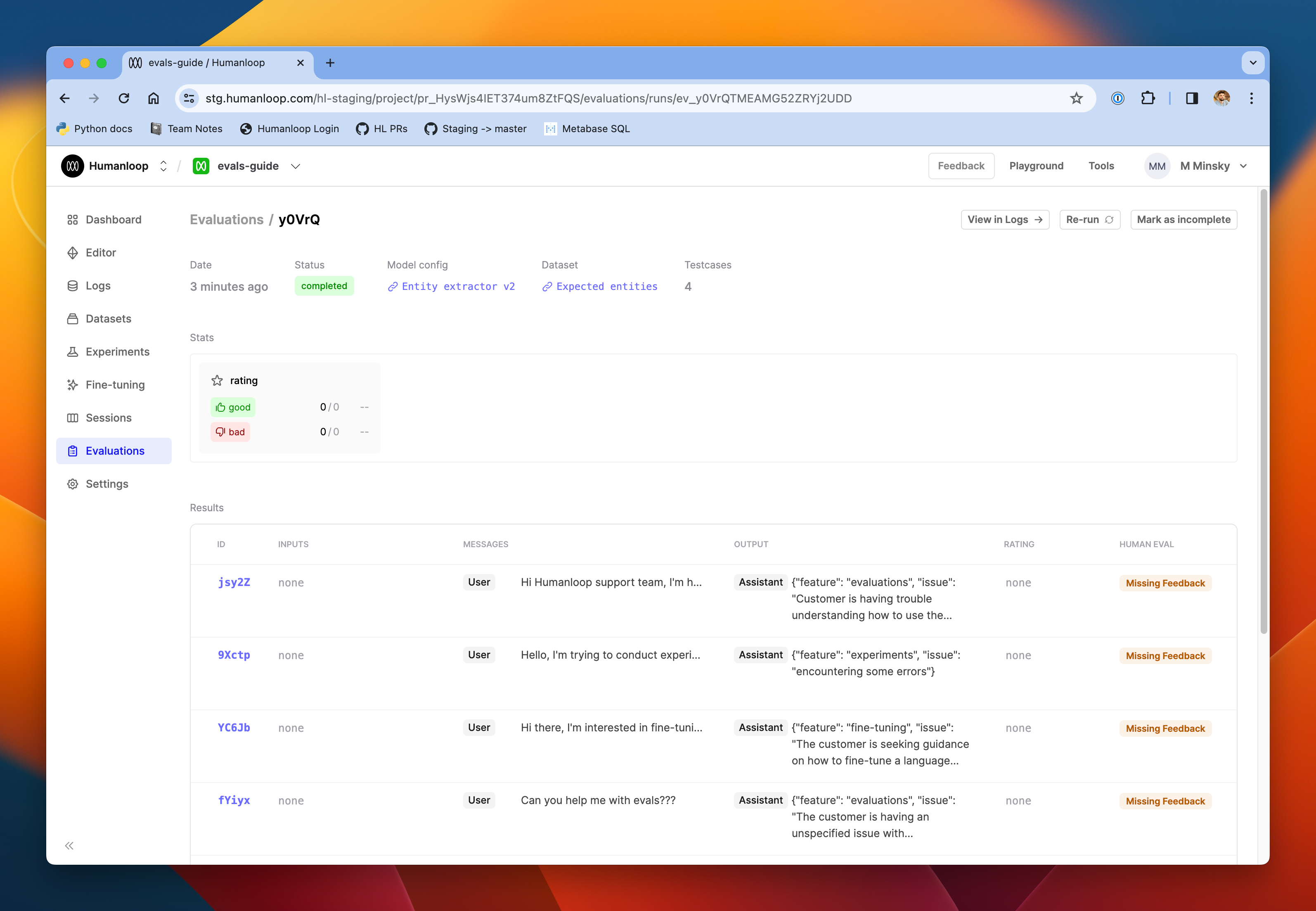

Choose the model config you are evaluating, a dataset you would like to evaluate against and then select the new Human evaluator.

View the details

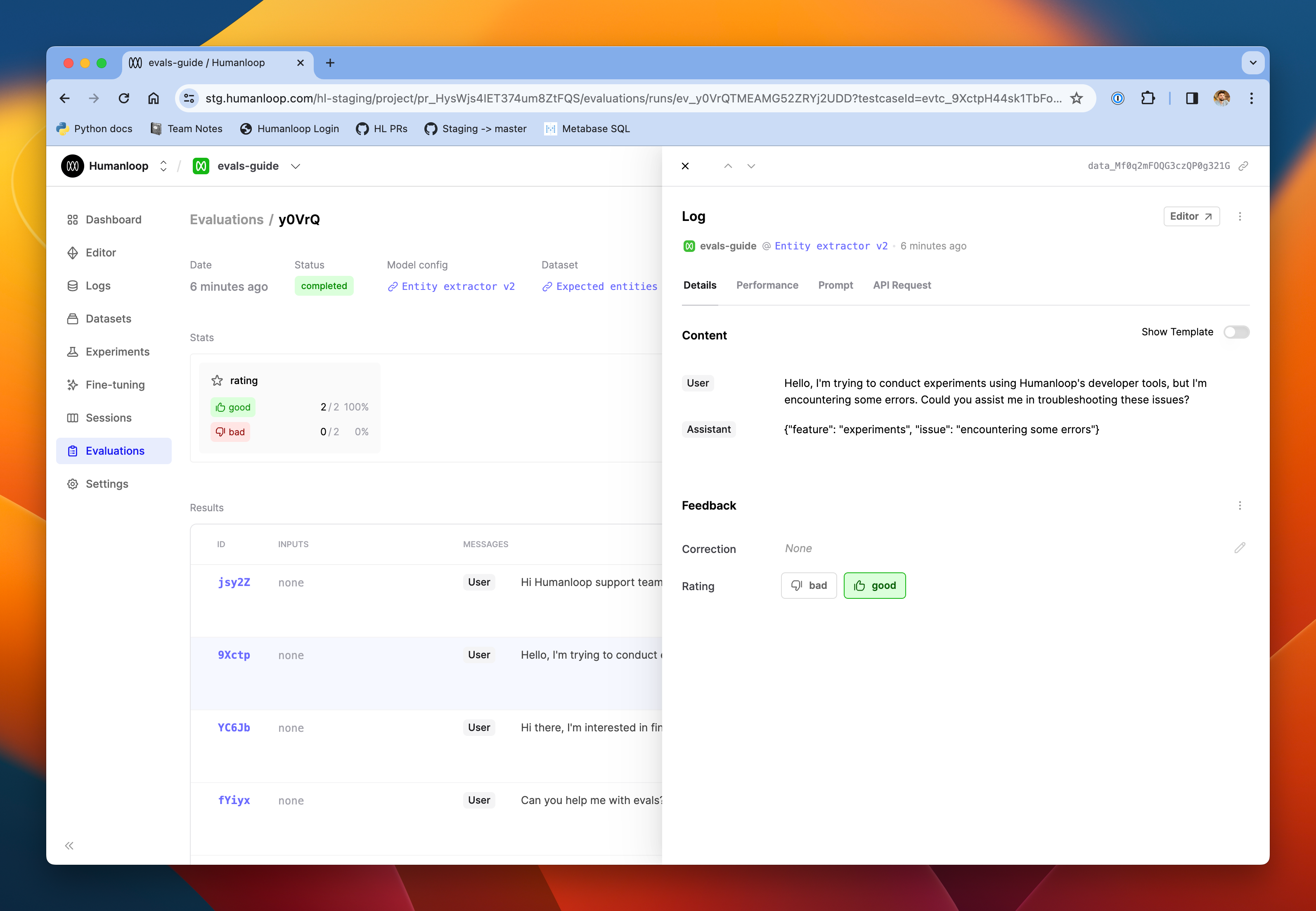

As the rows populate with the generated output from the model, you can review those outputs and apply feedback in the rating column. Click a row to see the full details of the Log in a drawer.

Configuring the feedback schema

If you need a more complex feedback schema, visit the Settings page in your project and follow the link to Feedbacks. Here, you can add more categories to the default feedback types. If you need more control over feedback types, you can create new ones via the API.