Set up Monitoring

In this guide, we will demonstrate how to create and use online evaluators to observe the performance of your models.

Paid Feature

This feature is not available for the Free tier. Please contact us if you wish to learn more about our Enterprise plan

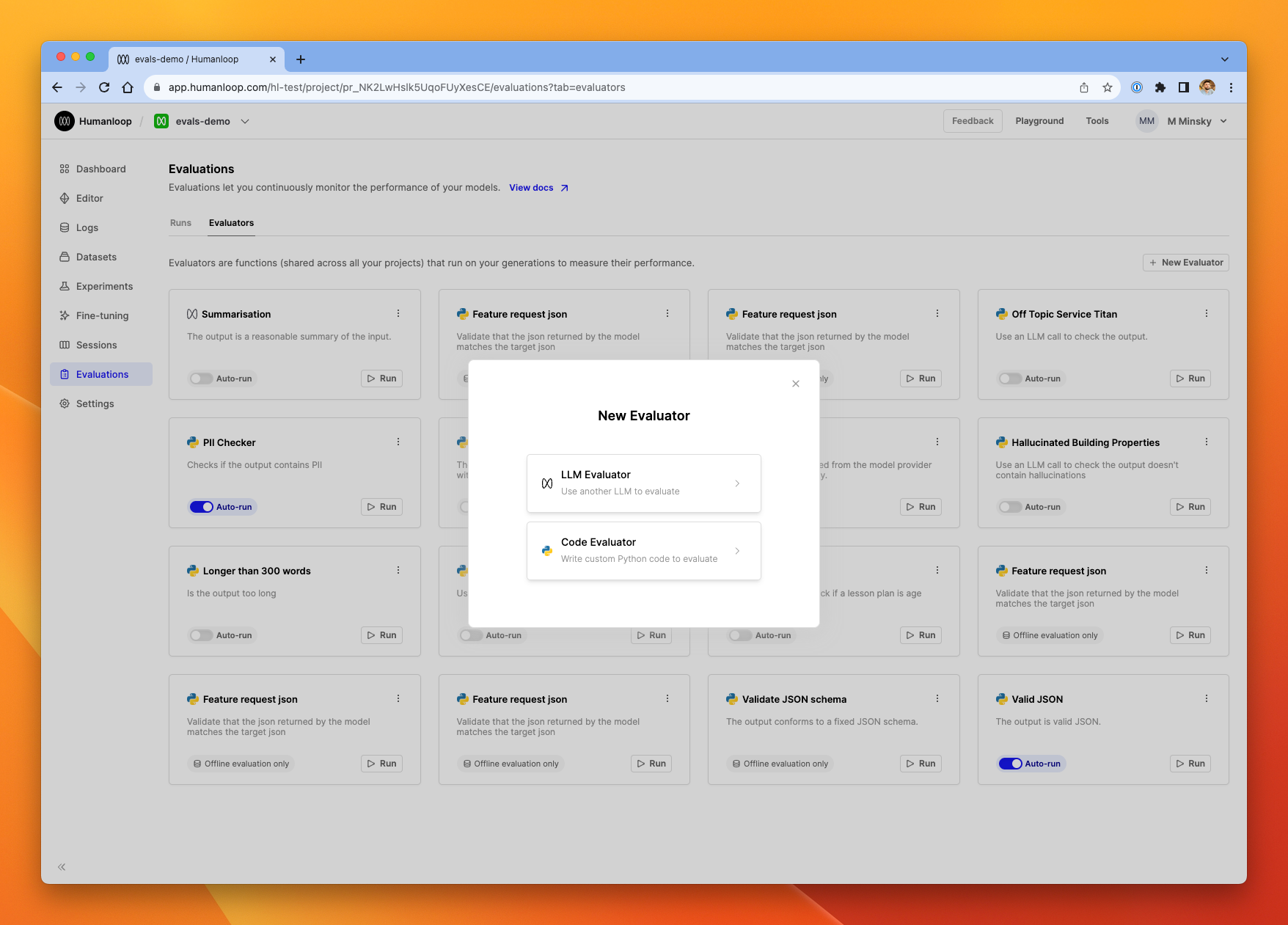

Create an online evaluator

Prerequisites

- You need to have access to evaluations.

- You also need to have a Prompt – if not, please follow our Prompt creation guide.

- Finally, you need at least a few logs in your project. Use the Editor to generate some logs if you don’t have any yet.

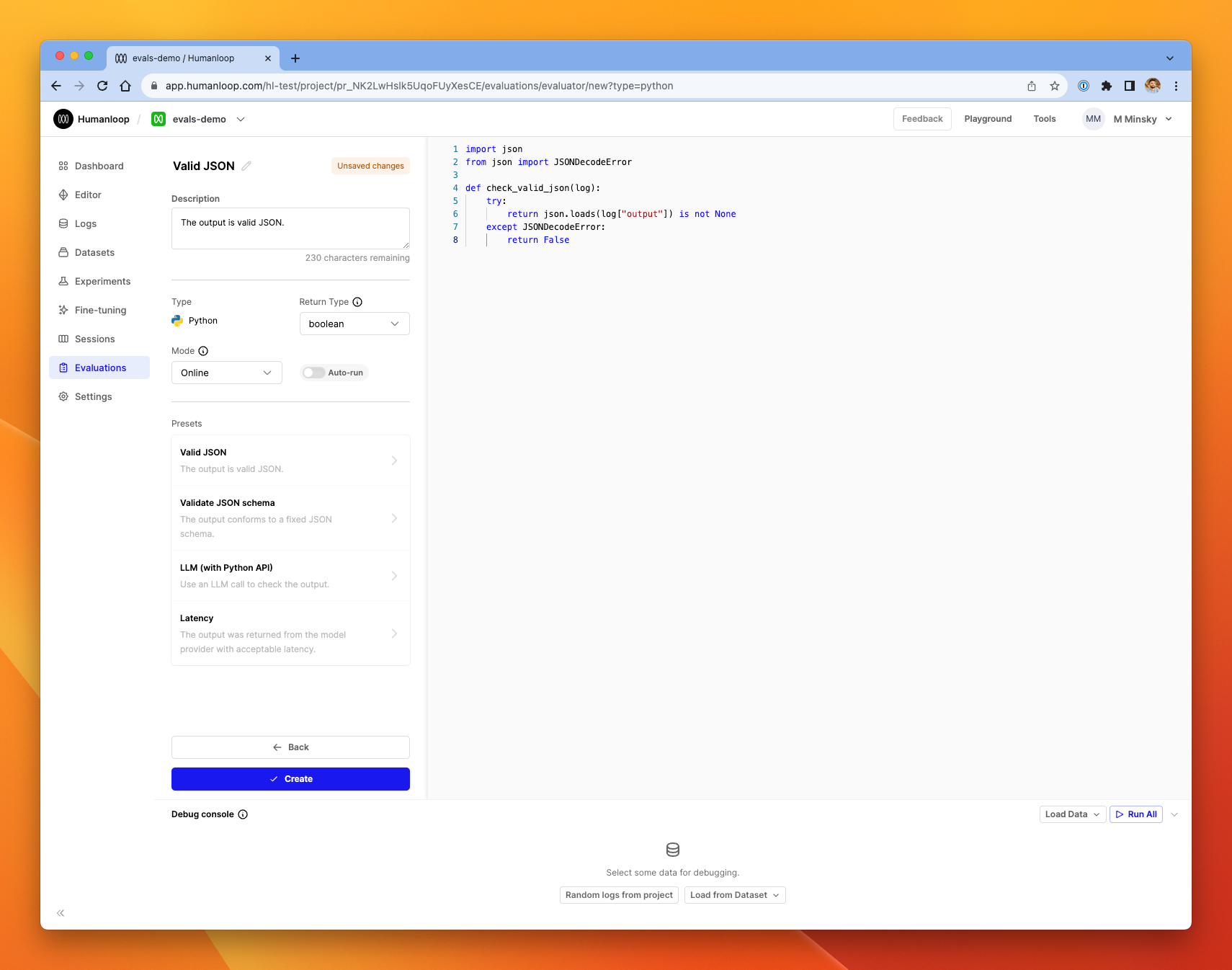

To set up an online Python evaluator:

From the library of presets on the left-hand side, we’ll choose Valid JSON for this guide. You’ll see a pre-populated evaluator with Python code that checks the output of our model is valid JSON grammar.

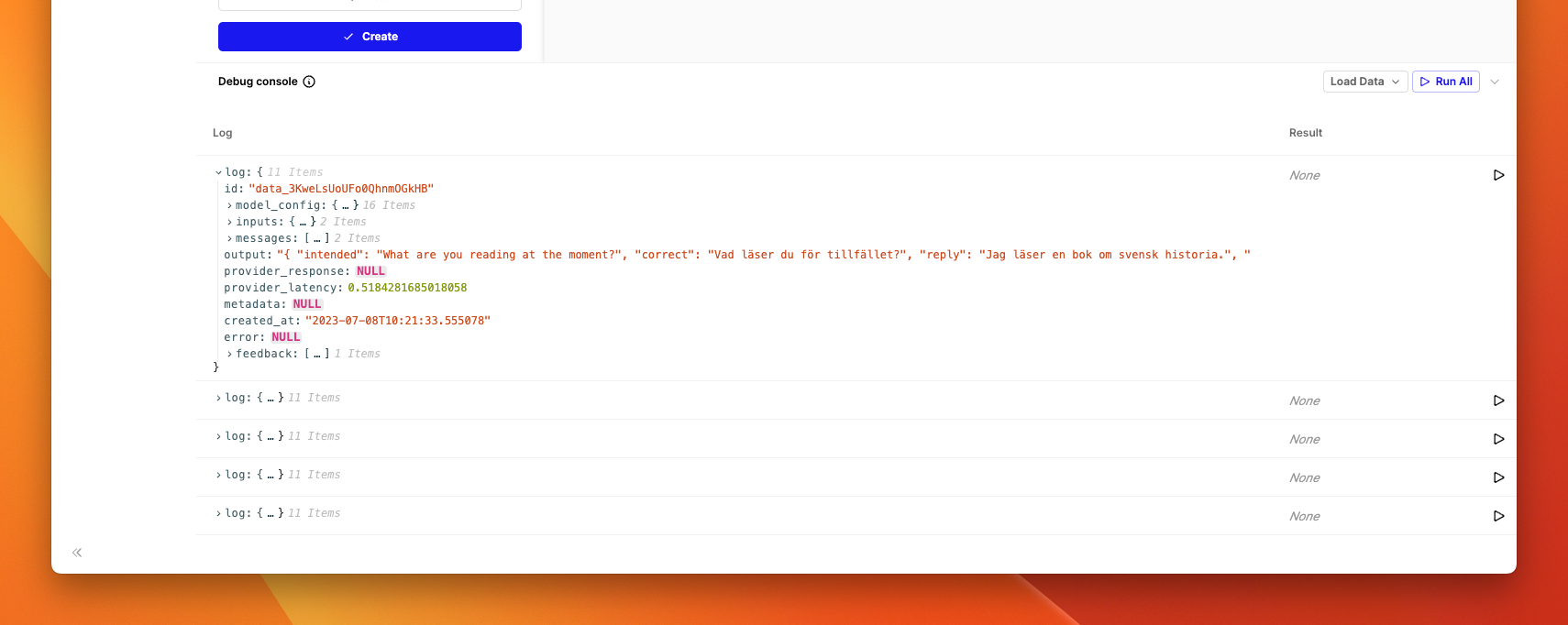

In the debug console at the bottom of the dialog, click Random logs from project. The console will be populated with five datapoints from your project.

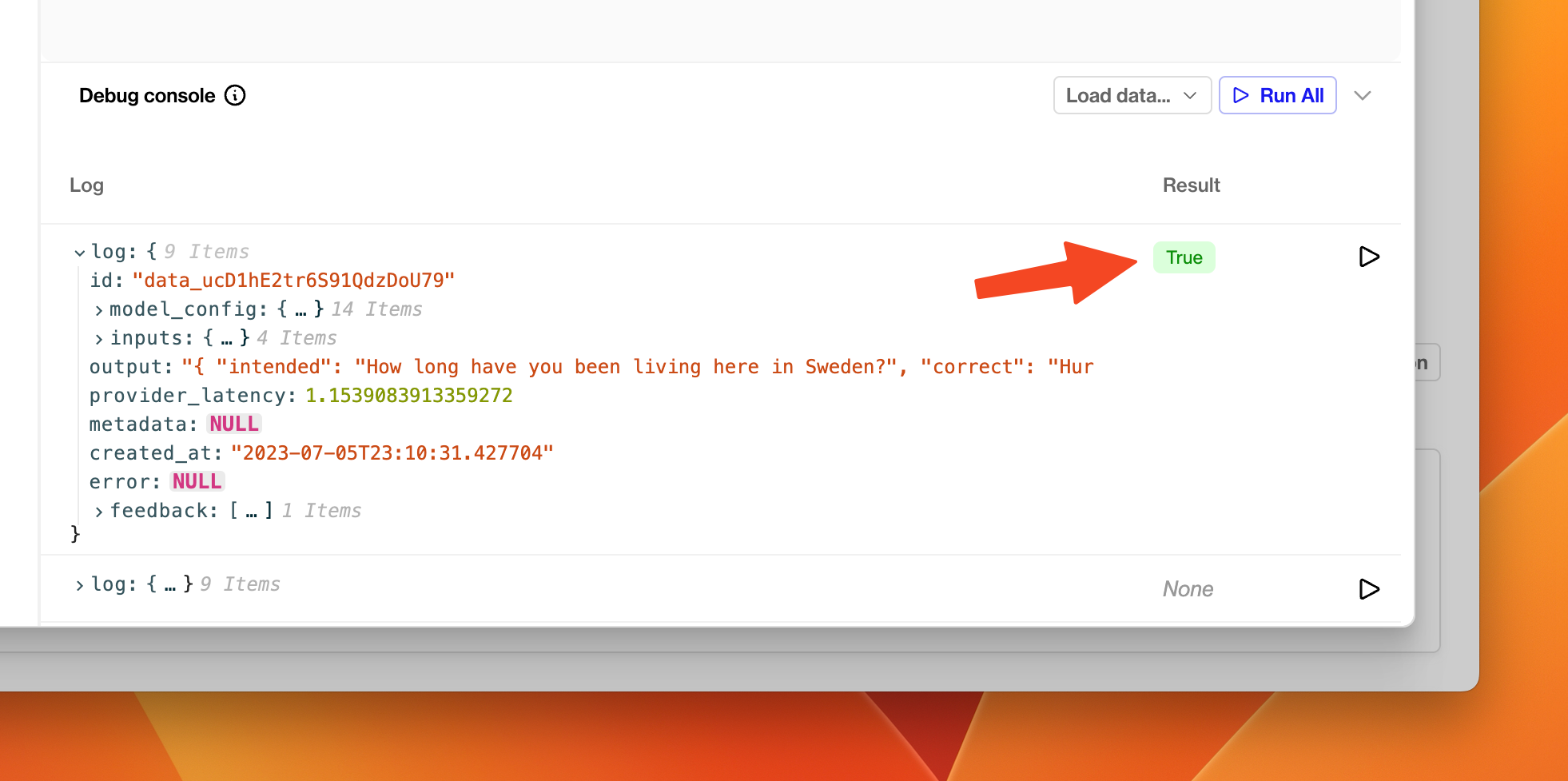

Click the Run button at the far right of one of the log rows. After a moment, you’ll see the Result column populated with a True or False.

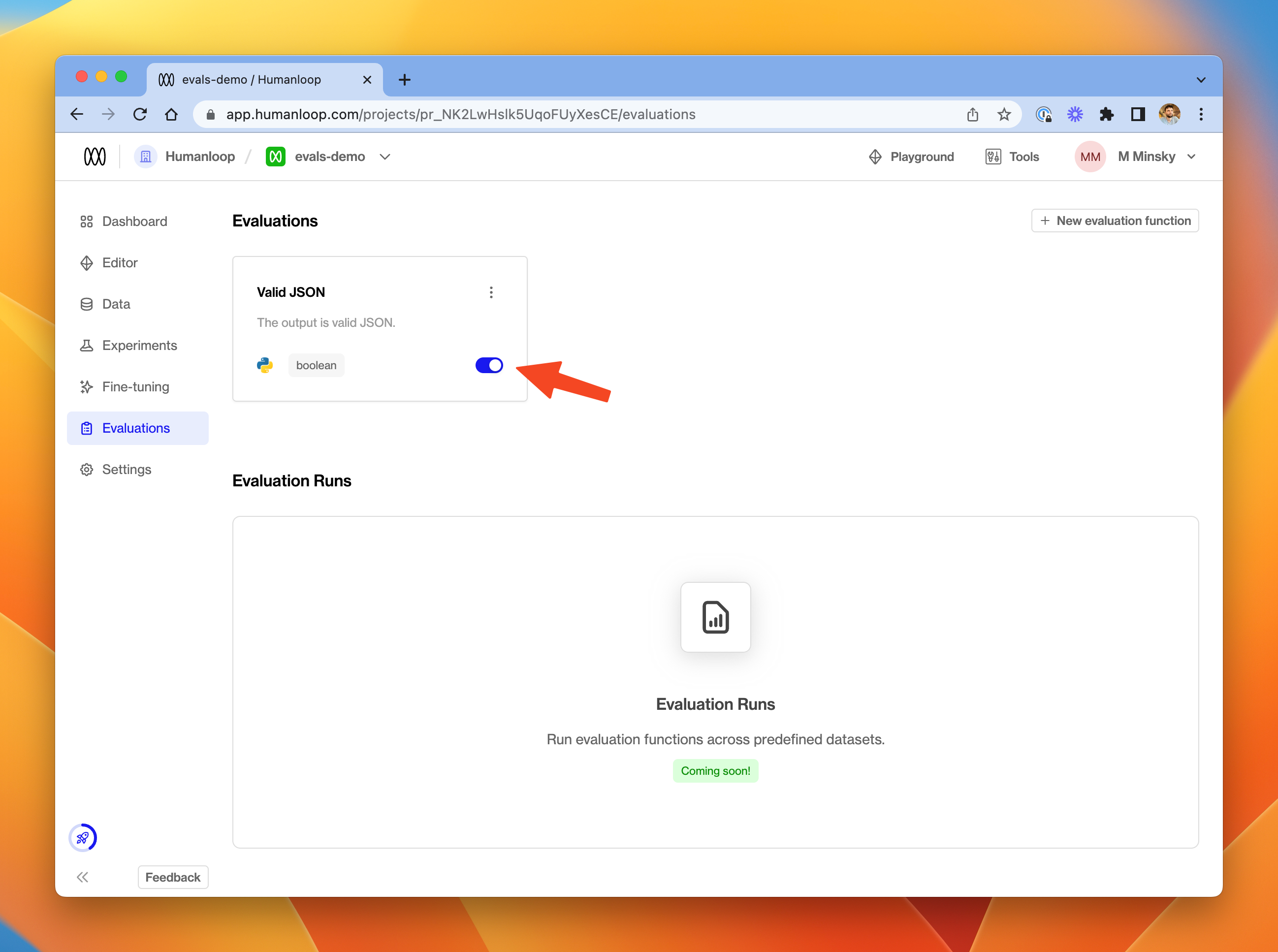

Activate an evaluator for a project

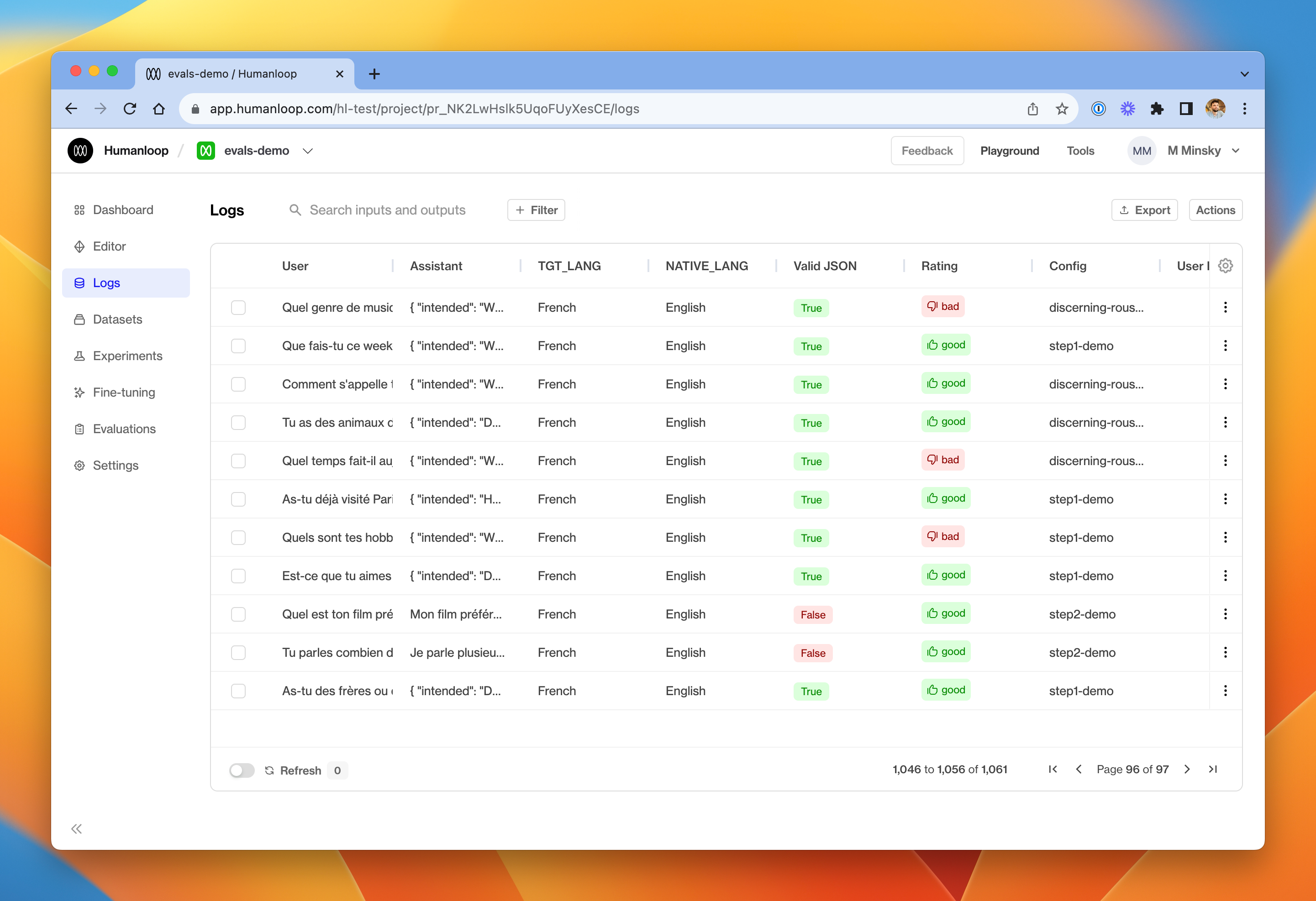

Track the performance of models

Prerequisites

- A Humanloop project with a reasonable amount of data.

- An Evaluator activated in that project.

To track the performance of different model configs in your project:

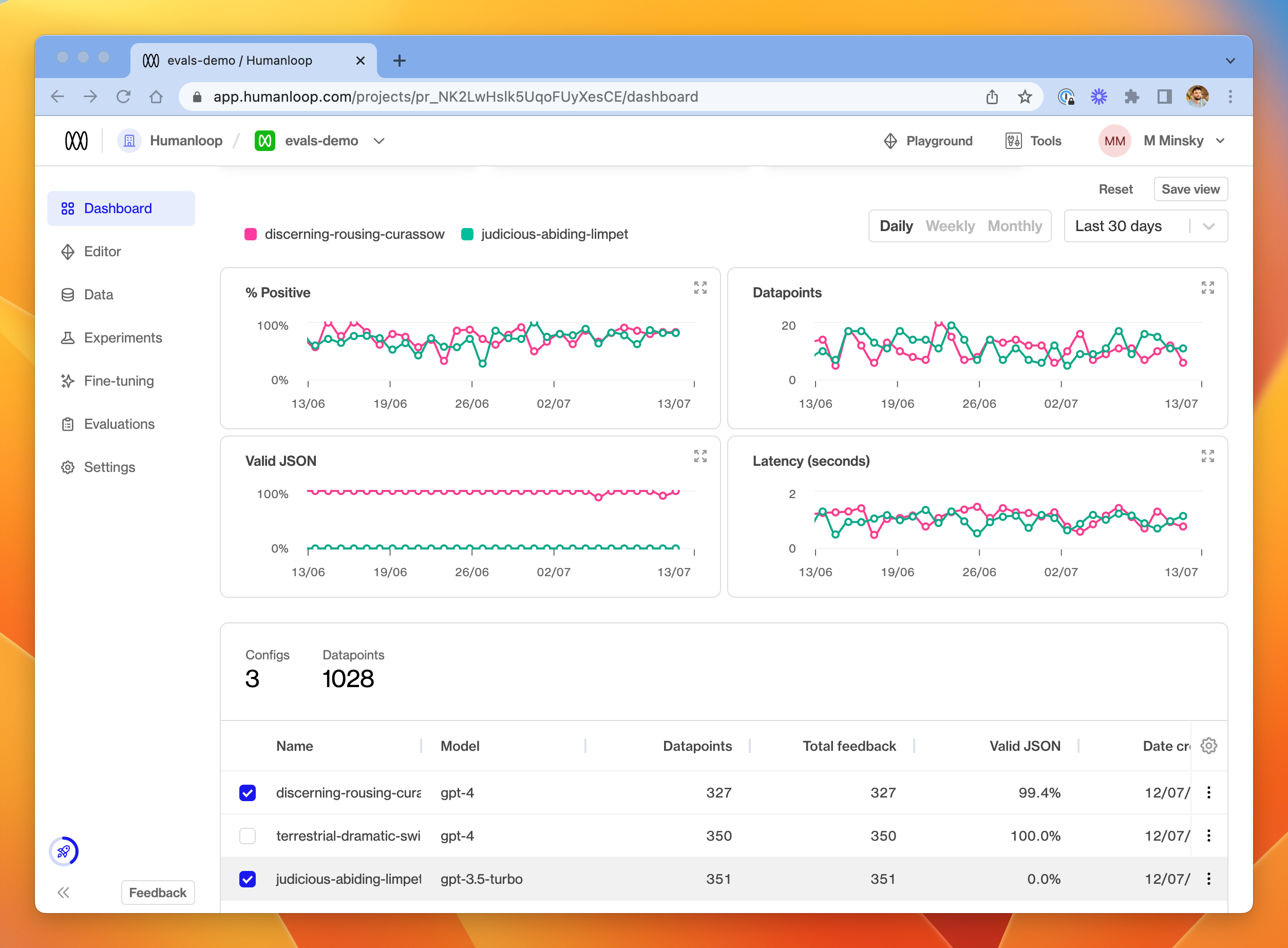

Go to the Dashboard tab.

In the table of model configs at the bottom, choose a subset of the project’s model configs.

Available Modules

The following Python modules are available to be imported in your code evaluators:

remathrandomdatetimejson(useful for validating JSON grammar as per the example above)jsonschema(useful for more fine-grained validation of JSON output - see the in-app example)sqlglot(useful for validating SQL query grammar)requests(useful to make further LLM calls as part of your evaluation - see the in-app example for a suggestion of how to get started).