Evaluate external logs

This guide demonstrates how to run an Evaluation on Humanloop using your own logs. This is useful if you have existing logs in an external system and want to evaluate them on Humanloop with minimal setup.

In this guide, we will use the example of a JSON file containing chat messages between users and customer support agents. This guide will bring you through uploading these logs to Humanloop and creating an Evaluation with them.

Prerequisites

The code in this guide uses the Python SDK. To follow along, you will need to have the SDK installed and configured. While the code snippets are in Python, the same steps can be performed using the TypeScript SDK or via the API directly. If you are using the API directly, you will need to have an API key.

Install and initialize the SDK

First you need to install and initialize the SDK. If you have already done this, skip to the next section.

Open up your terminal and follow these steps:

- Install the Humanloop SDK:

- Initialize the SDK with your Humanloop API key (you can get it from the Organization Settings page).

The example JSON data in this guide can be found in the Humanloop Cookbook.

To continue with the code in this guide, download conversations-a.json and conversations-b.json from the assets folder.

Evaluate your external logs

We’ll start by loading data from conversations-a.json, which represents logs recorded by an external system.

In this example, data is a list of chat messages between users and a support agent.

Upload logs to Humanloop

These steps are suitable if you do not already have an Evaluation on Humanloop. The Upload new logs step demonstrates a simpler process if you already have an Evaluation you want to add a new set of logs to.

Upload the logs with the log(...) method.

This will automatically create a Flow on Humanloop.

We additionally pass in some attributes identifying

the configuration of the system that generated these logs.

attributes accepts arbitrary values, and is used for versioning the Flow.

Here, it allows us to associate this set of logs with a specific version of the support agent.

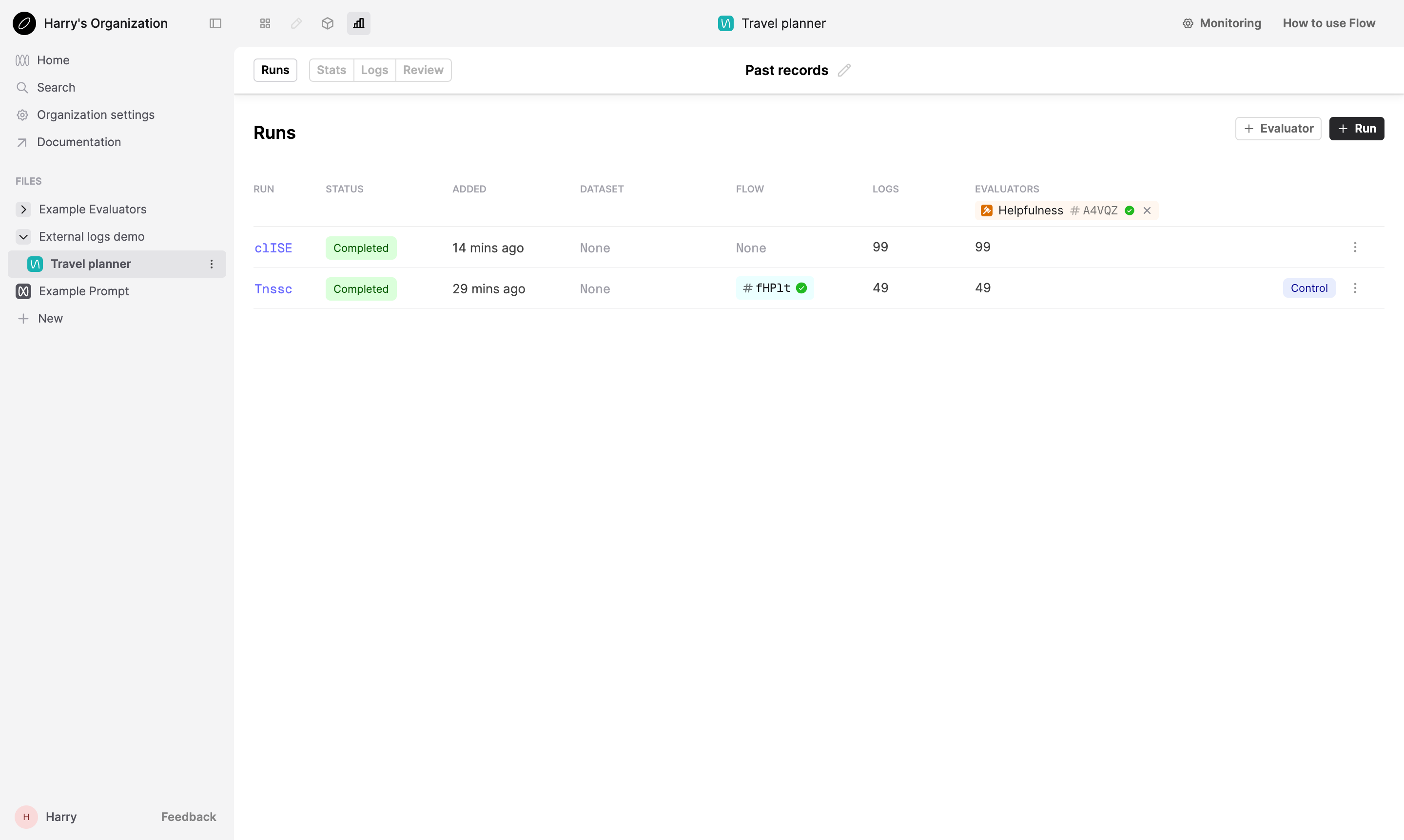

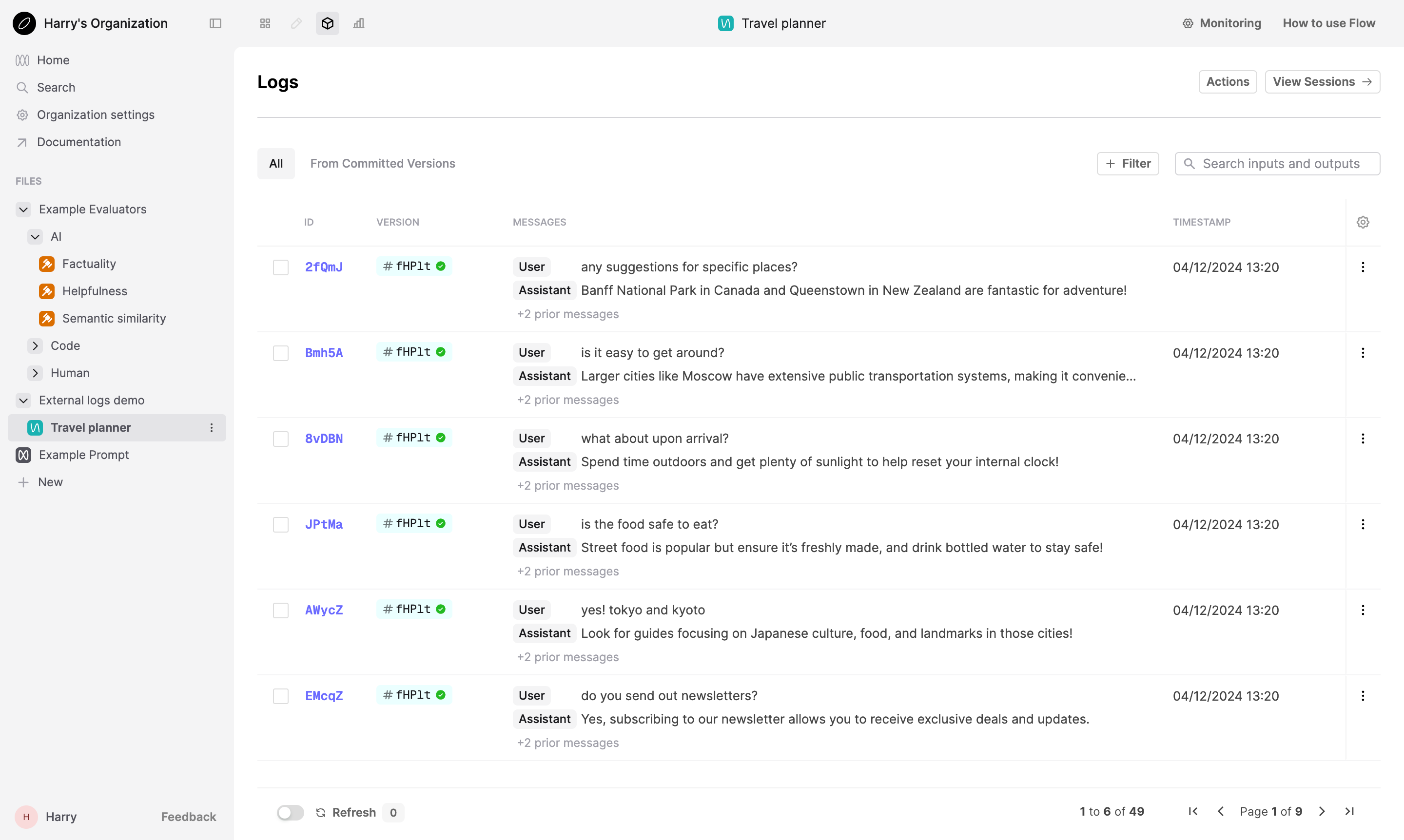

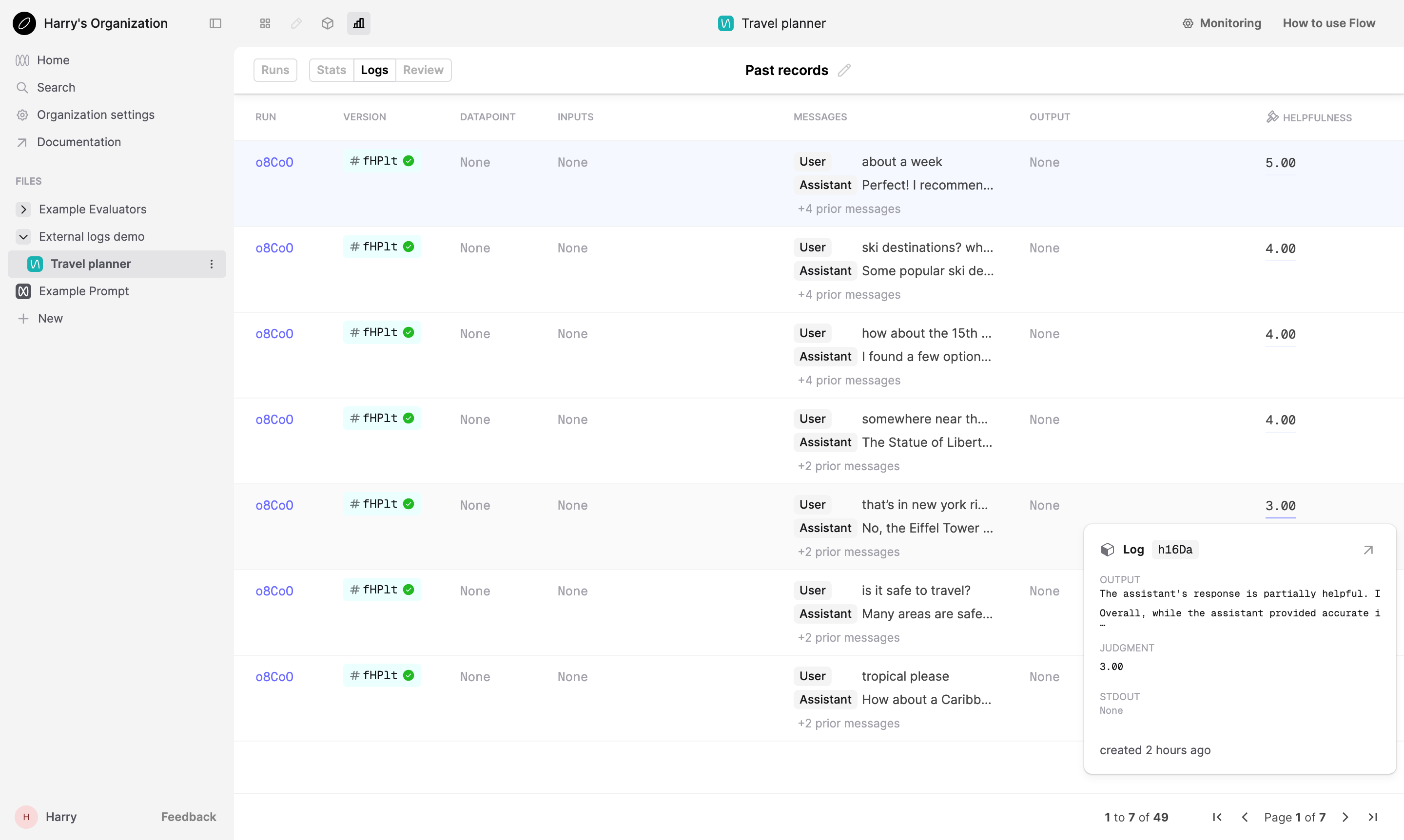

This will have created a new Flow on Humanloop named Travel planner. To confirm this logging has succeeded, navigate to the Logs tab of the Flow and view the uploaded logs. Each Log should correspond to a conversation and contain a list of messages.

We will also use the created Flow version when creating our Run.

Create an Evaluation Run

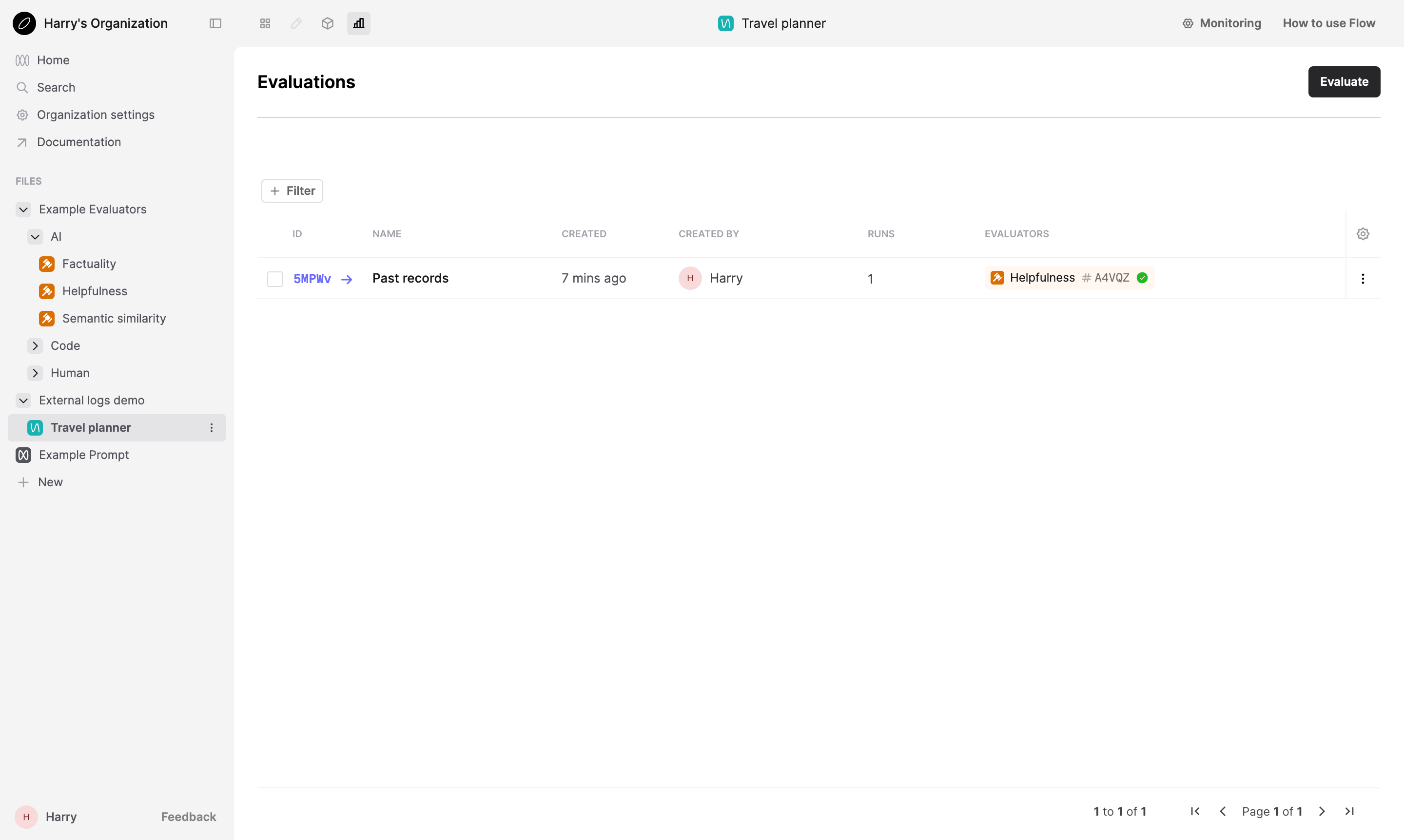

Next, create an Evaluation on Humanloop. Within the Evaluation, create a Run which will contain the Logs.

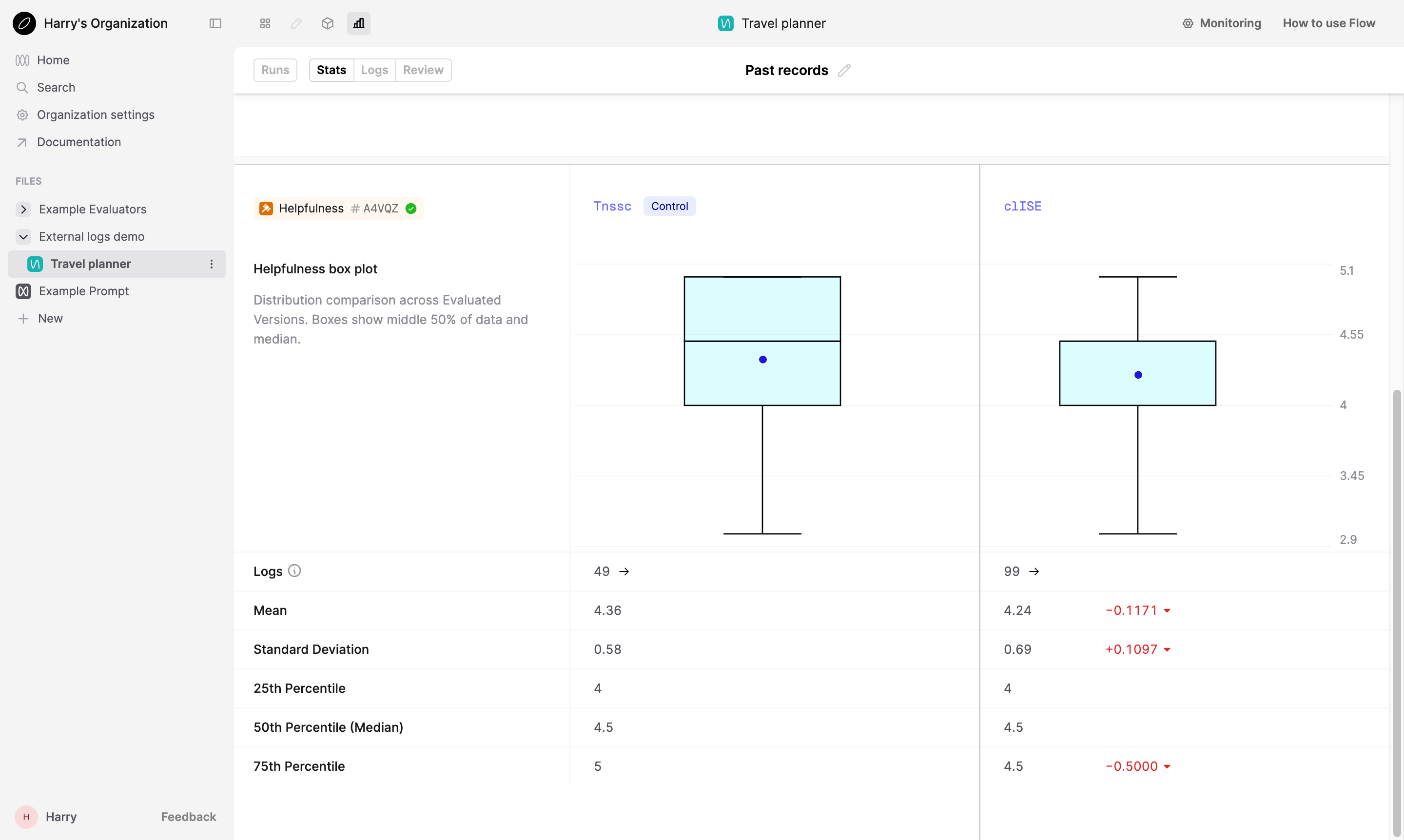

Here, we’ll use the example “Helpfulness” LLM-as-a-judge Evaluator. This will automatically rate the helpfulness of the support agent across our logs.

Review the Evaluation

You have now created an Evaluation on Humanloop and added Logs to it.

Go to the Humanloop UI to view the Evaluation. Within the Evaluation, go to Logs tab. Here, you can view your uploaded logs as well as the Evaluator judgments.

The following steps will guide you through adding a different set of logs to a new Run for comparison.

Upload new logs

If you already have an Evaluation that you want to add a new set of logs to, you can start from here.

To start from this point, retrieve the ID of the Evaluation you want to add logs to.

Go to the Evaluation you want to add logs to on the Humanloop UI and copy the ID

from the URL. This is the segment of the URL after evaluations/, e.g. evr_....

Now that we have an Evaluation on Humanloop, we can add a separate set of logs to it and compare the performance across this set of logs to the previous set.

While we can achieve this by repeating the above steps, we can add logs to a Run in a more direct and simpler way now that we have an existing Evaluation.

For this example, we’ll continue with the Evaluation created in the previous section,

and add a new Run with the data from conversations-b.json. These represent a set of logs

from a prototype version of the support agent.

Create a new Run

Create a new Run within the Evaluation that will contain this set of logs.

Log to the Run

Pass the run_id argument in your log(...) call to associate the Log with the Run.

Next steps

The above examples demonstrate how you can quickly populate an Evaluation Run with your logs.

- You can extend this Evaluation with custom Evaluators, such as using Code Evaluators to calculate metrics, or using Human Evaluators to set up your Logs to be reviewed by your subject-matter experts.

- Now that you’ve set up an Evaluation, explore the other File types on Humanloop to see how they can better reflect your production systems, and how you can use Humanloop to version-control them. Here, we’ve used a Flow to represent a black-box system.