Set up logging

This quickstart takes a chat agent and adds Humanloop logging to it so you can observe and reason about its behavior.

Prerequisites

Account setup

Create a Humanloop Account

If you haven’t already, create an account or log in to Humanloop

Add an OpenAI API Key

If you’re the first person in your organization, you’ll need to add an API key to a model provider.

- Go to OpenAI and grab an API key.

- In Humanloop Organization Settings set up OpenAI as a model provider.

Using the Prompt Editor will use your OpenAI credits in the same way that the OpenAI playground does. Keep your API keys for Humanloop and the model providers private.

Install dependencies

Create the chat agent

To demonstrate how to add logging, we will start with a chat agent that answers math and science questions.

Create a script and add the following:

Log to Humanloop

Use the SDK decorators to enable logging. At runtime, every call to a decorated function will create a Log on Humanloop.

Run the code

Have a conversation with the agent. When you’re done, type exit to close the program.

Check your workspace

Navigate to your workspace to see the logged conversation.

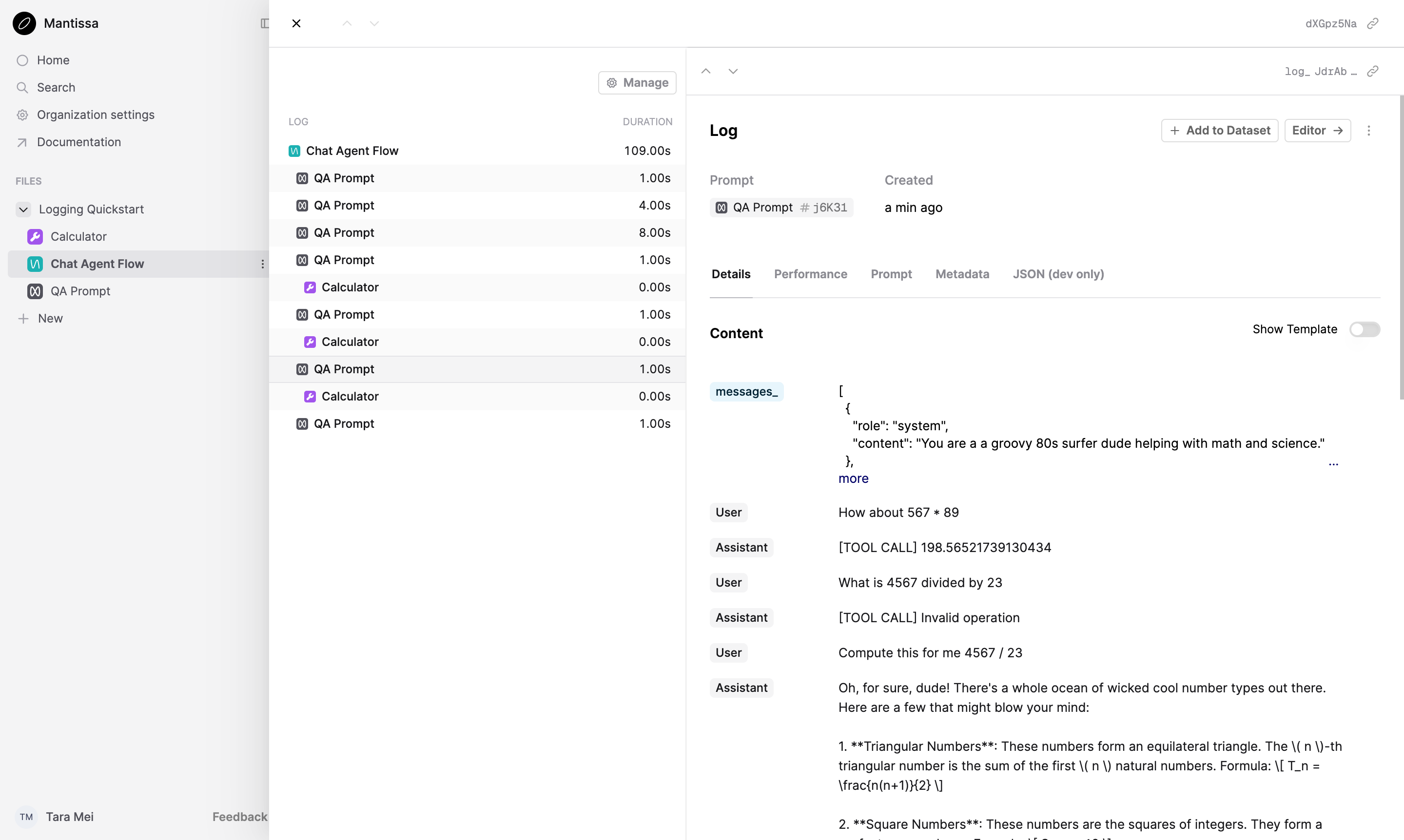

Inside the Logging Quickstart directory on the left, click the QA Agent Flow. Select the Logs tab from the top of the page and click the Log inside the table.

You will see the conversation’s trace, containing Logs corresponding to the Tool and the Prompt.

Change the agent and rerun

Modify the call_model function to use a different model and temperature.

Run the agent again, then head back to your workspace.

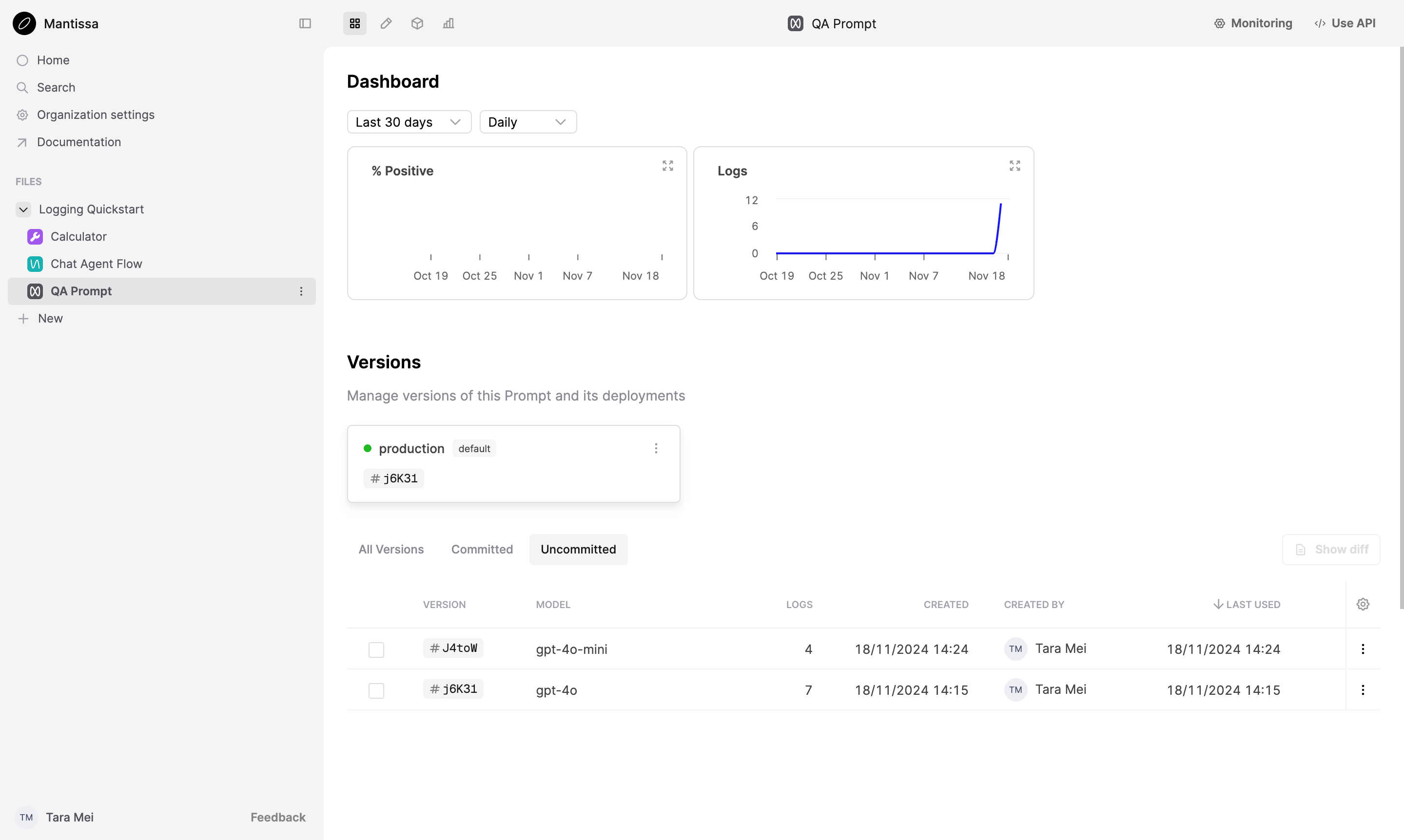

Click the QA Prompt Prompt, select the Dashboard tab from the top of the page and you should find a new version of the Prompt at the top of the list.

By changing the hyperparameters of the OpenAI call, you have tagged a new version of the Prompt.

Next steps

Logging is the first step to observing your AI product. Follow up with these guides on monitoring and evals:

-

Add monitoring Evaluators to evaluate Logs as they’re made against a File.

-

Explore evals to improve the performance of your AI feature in our guide on running an Evaluation.

-

See logging in action on a complex example in our tutorial on evaluating an agent.